Introduction

Overview

Teaching: 30 min

Exercises: 0 minQuestions

What will I learn during this workshop?

What are the tools that I will be using?

What are the tidy data principles?

What is working in a more open way beneficial?

Objectives

Discover a complete data analysis process revolving around the tidy principles.

Learn how to increase your data analysis efficacy

Table of contents

1. Overview

Welcome!

In this training you will learn R, RStudio, git, and GitHub. You will learn modern data science with R and the tidyverse suite of packages. It’s going to be fun and empowering! You will learn a reproducible workflow that can be used in research and analyses of all kinds.

In particular, you will learn about the concept of literate programming, a concept coined by Donald Kuth where a program code is made primarily to be read and understood by other people, and secondarily to be executed by the computer. This means that literate programs are very easy to understand and share, as all the code is well explained.

This training will get acquainted with these skills and best practices, you will get comfortable with a workflow that you can use in your own projects. Overall, you will

Three main takeaways

- Modern data transformation and visualization (R/RStudio,

tidyverse).- Collaborative version control (

git/GitHub).- Associating code and its description through literate programming (R Markdown/GitHub).

1.1 What to expect

This is going to be a fun workshop.

The plan is to expose you to a lot of great tools that you can have confidence using in your research. You’ll be working hands-on and doing the same things on your own computer as we do live on up on the screen. We’re going to go through a lot in these two days and it’s less important that you remember it all. More importantly, you’ll have experience with it and confidence that you can do it. The main thing to take away is that there are good ways to approach your analyses; we will teach you to expect that so you can find what you need and use it! A theme throughout is that tools exist and are being developed by real, and extraordinarily nice, people to meet you where you are and help you do what you need to do. If you expect and appreciate that, you will be more efficient in doing your awesome science.

You are all welcome here, please be respectful of one another. You are encouraged to help each other. We abide to the Carpentries Code of Conduct.

Everyone in this workshop is coming from a different place with different experiences and expectations. But everyone will learn something new here, because there is so much innovation in the data science world. Instructors and helpers learn something new every time, from each other and from your questions. If you are already familiar with some of this material, focus on how we teach, and how you might teach it to others. Use these workshop materials not only as a reference in the future but also for talking points so you can communicate the importance of these tools to your communities. A big part of this training is not only for you to learn these skills, but for you to also teach others and increase the value and practice of open data science in science as a whole.

1.2 What you will learn

- how to think about data

- how to think about data separately from your research questions.

- how and why to tidy data and analyze tidy data, rather than making your analyses accommodate messy data.

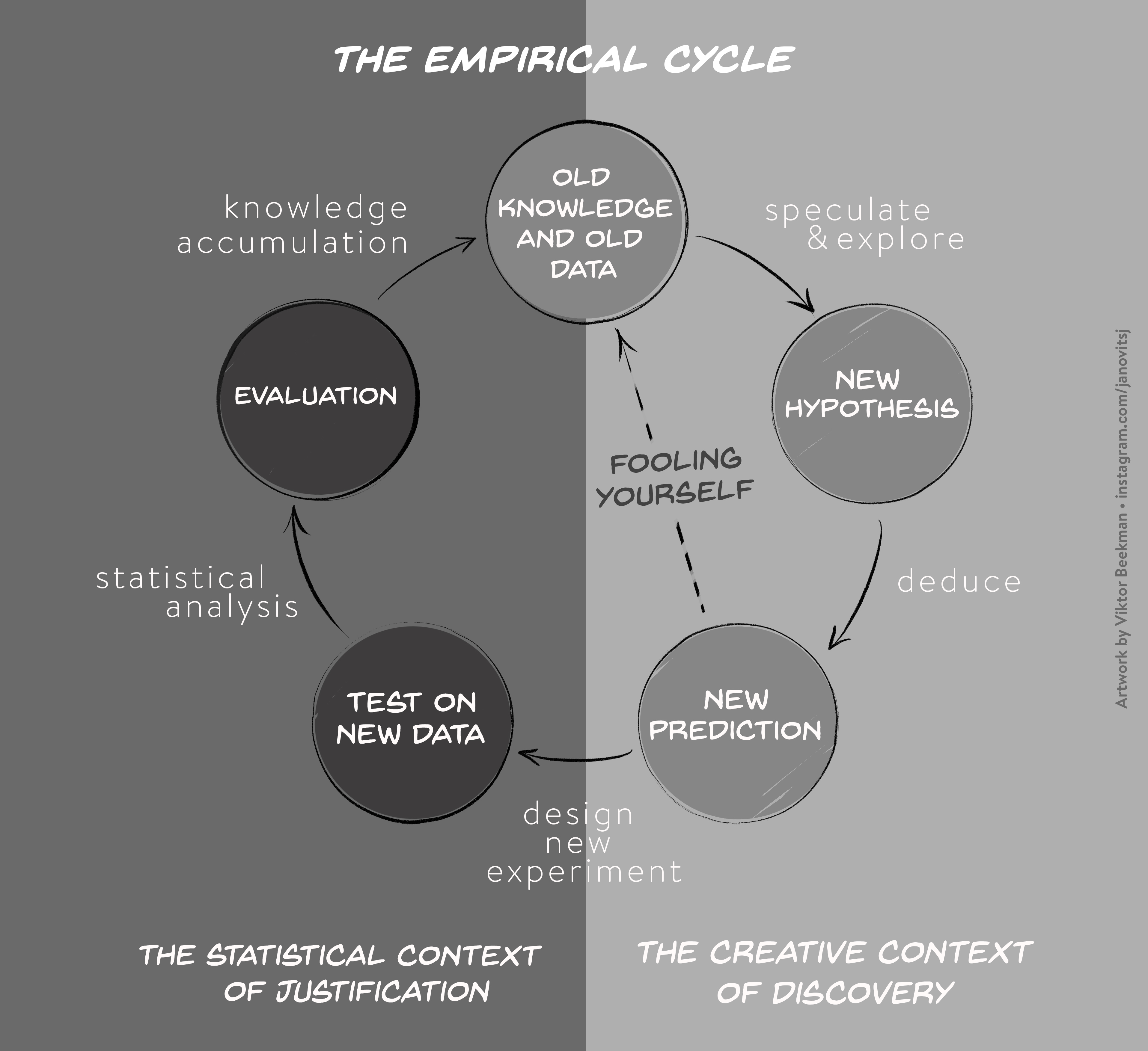

- how there is a lot of decision-making involved with data analysis, and a lot of creativity.

- how to increase efficiency in your data science

- foster reproducibility for you and others.

- facilitate collaboration with others — especially your future self!

- how Open Science is a great benefit

- Open Science is often good science: reproducible, clear, easy to share and access.

- broaden the impact of your work.

- ameliorate your scientific reputation.

- how to learn with intention and community

- think long-term instead of only to get a single job done now.

- the #rstats online community is fantastic. The tools we’re using are developed by real people. Real, nice people. They are building powerful and empowering tools and are welcoming to all skill-levels.

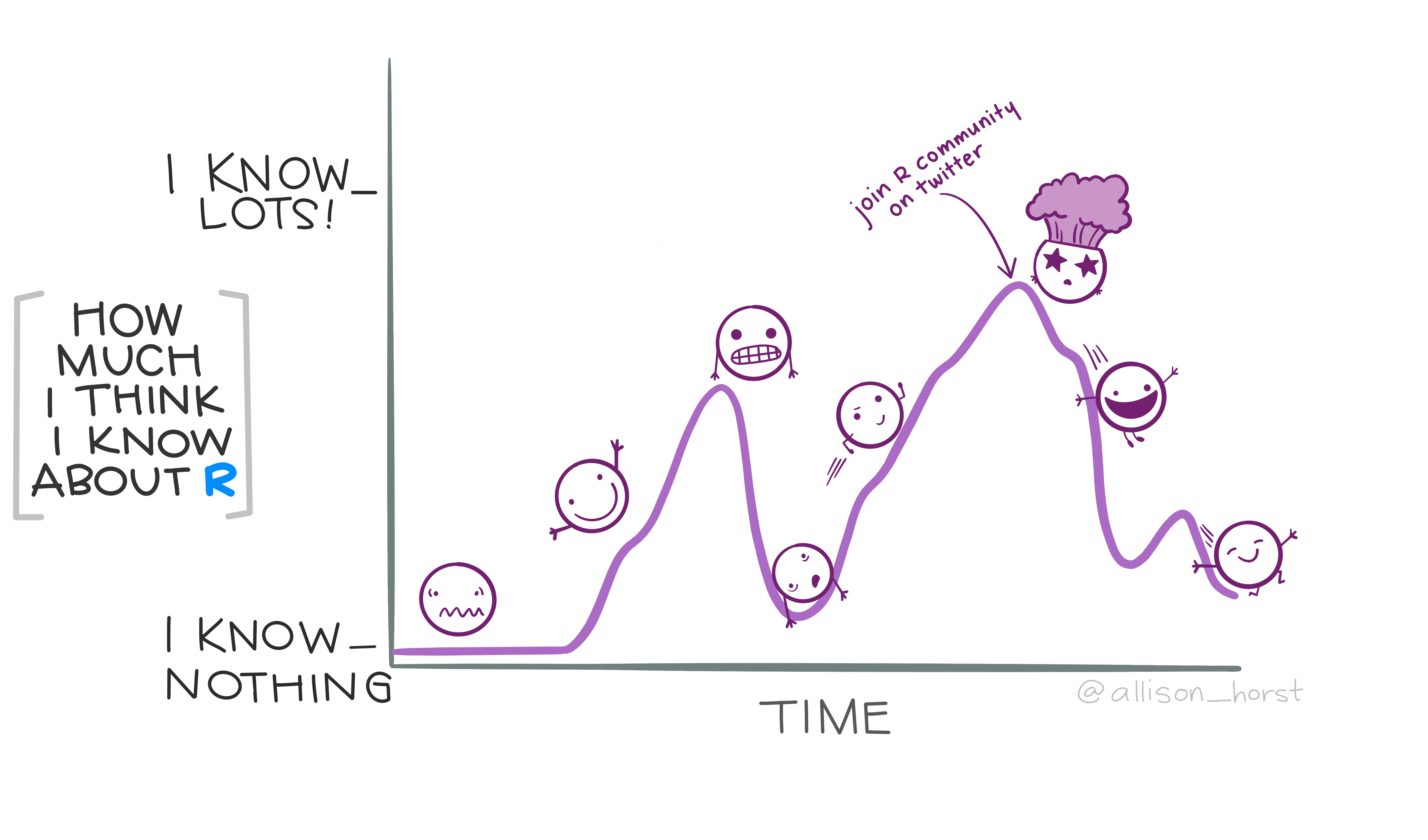

1.3 Be persistent

Learning a new programming language such as R and a new theme (data analysis) is not an easy task. Also, there is literally no end to learning, you will always find a better more smooth way to do things, a new package recently developed etc.

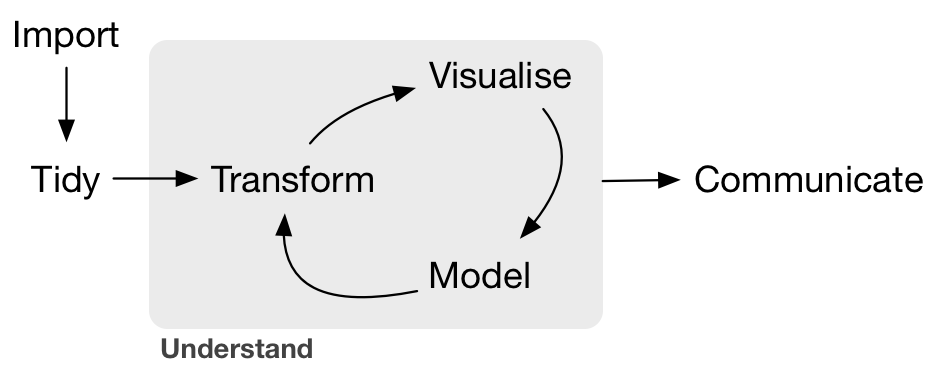

2. The tidy data workflow

We will be learning about tidy data. And how to use a tidyverse suite of tools to work with tidy data.

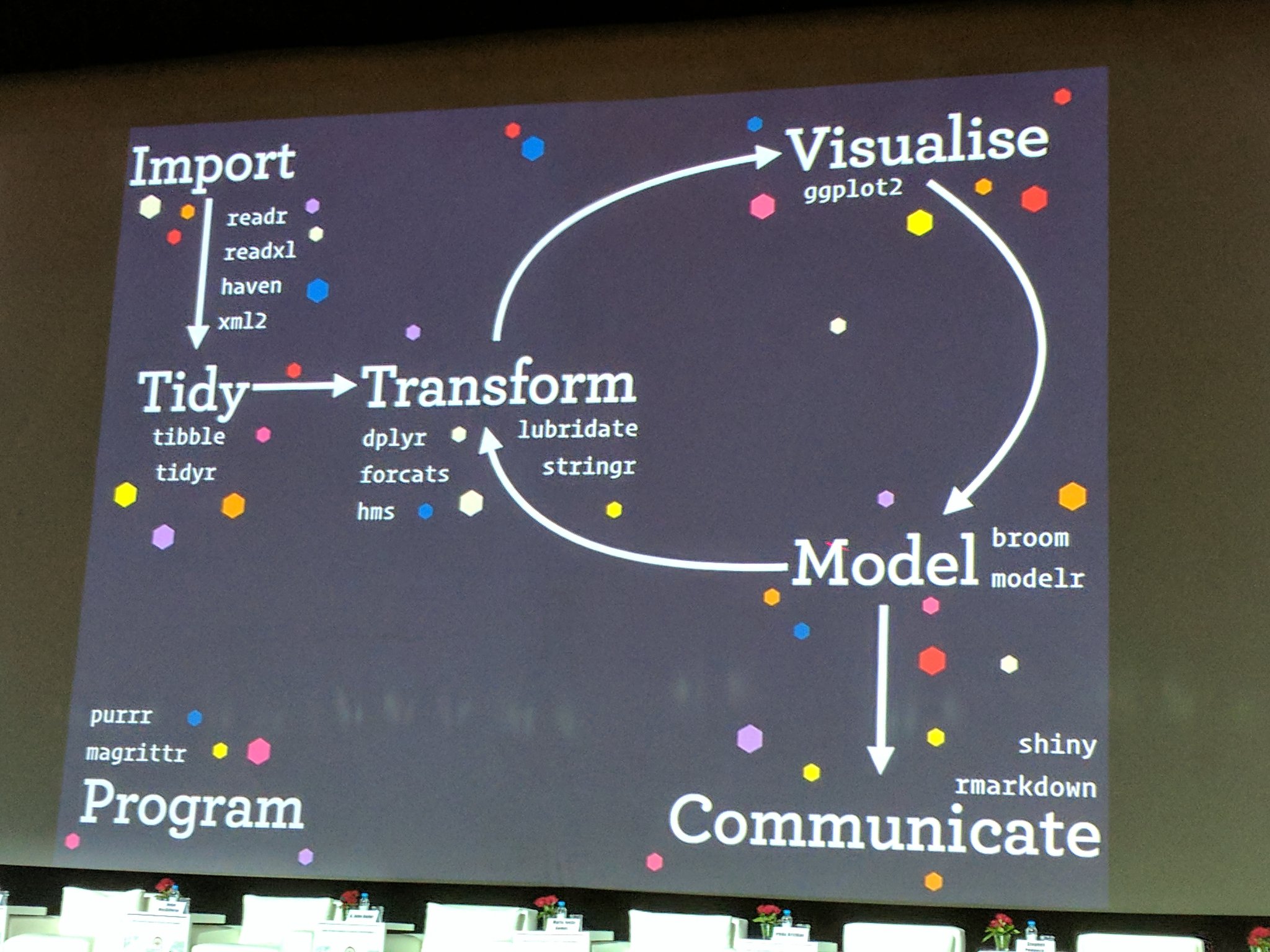

Hadley Wickham and his team have developed a ton of the tools we’ll use today. Here’s an overview of techniques to be covered in Hadley Wickham and Garrett Grolemund of RStudio’s book R for Data Science:

We will be focusing on:

- Tidy:

tidyrto organize rows of data into unique values. - Transform:

dplyrto manipulate/wrangle data based on subsetting by rows or columns, sorting and joining. - Visualize:

ggplot2static plots, using grammar of graphics principles. - Communicate: dynamic documents with

knitrto produce R Markdown notebooks.

This is really critical. Instead of building your analyses around whatever (likely weird) format your data are in, take deliberate steps to make your data tidy. When your data are tidy, you can use a growing assortment of powerful analytical and visualization tools instead of inventing home-grown ways to accommodate your data. This will save you time since you aren’t reinventing the wheel, and will make your work more clear and understandable to your collaborators (most importantly, Future You).

Reference: original paper about tidy datasets from Hadley Wickham.

2.1 Learning with public datasets

One of the most important things you will learn is how to think about data separately from your own research context. Said in another way, you’ll learn to distinguish your data questions from your research questions. Here, we are focusing on data questions, and we will use data that is not specific to your research.

We will be using several different data sets throughout this training, and will help you see the patterns and parallels to your own data, which will ultimately help you in your research.

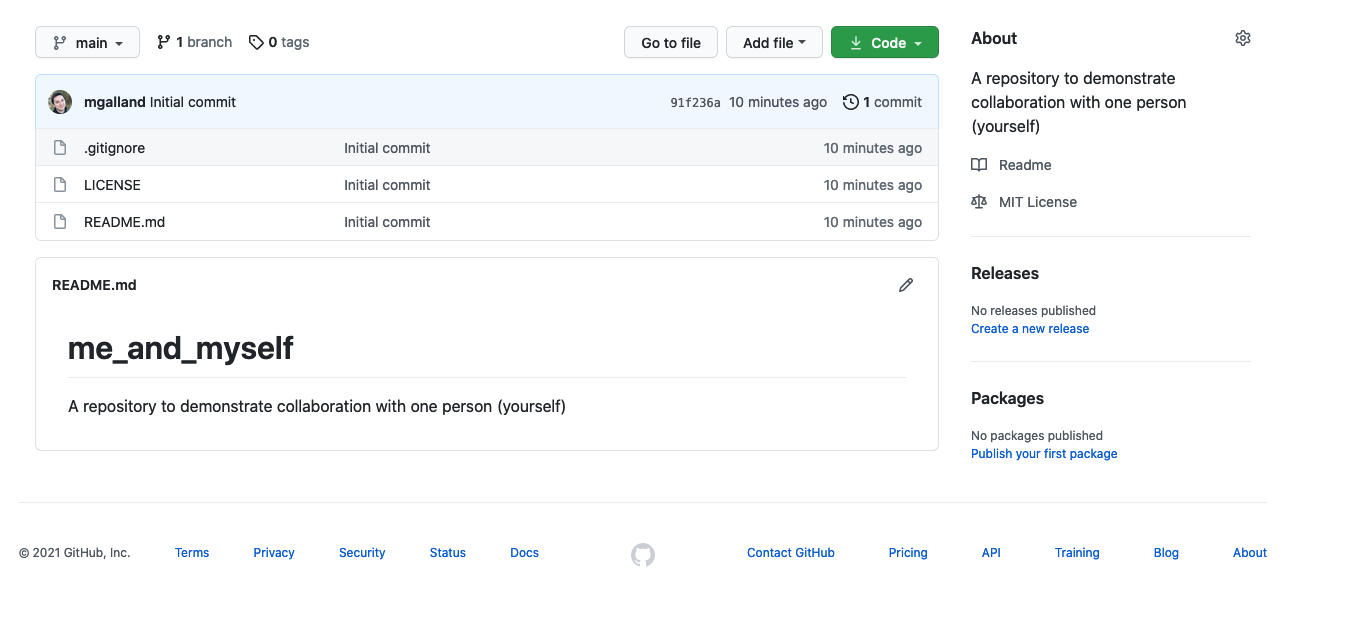

2.2 Emphasizing collaboration

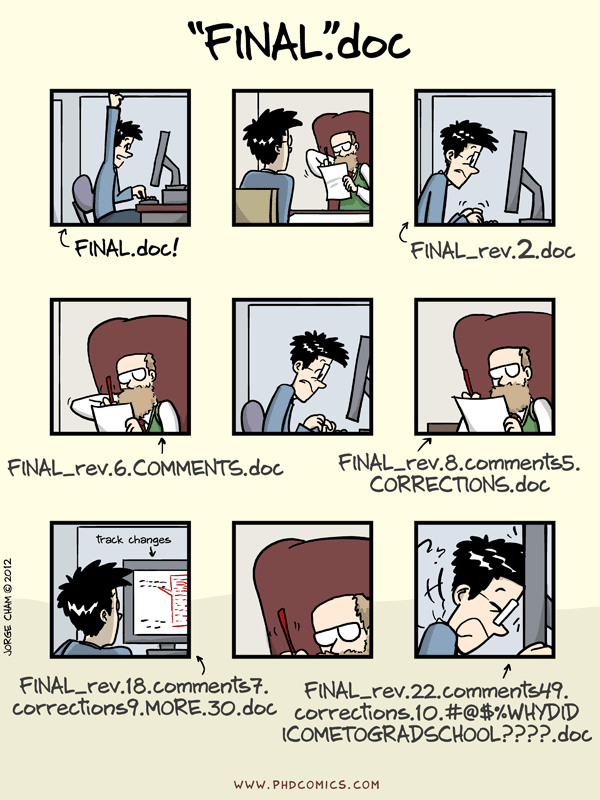

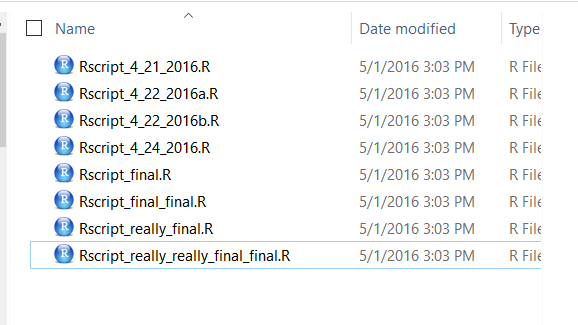

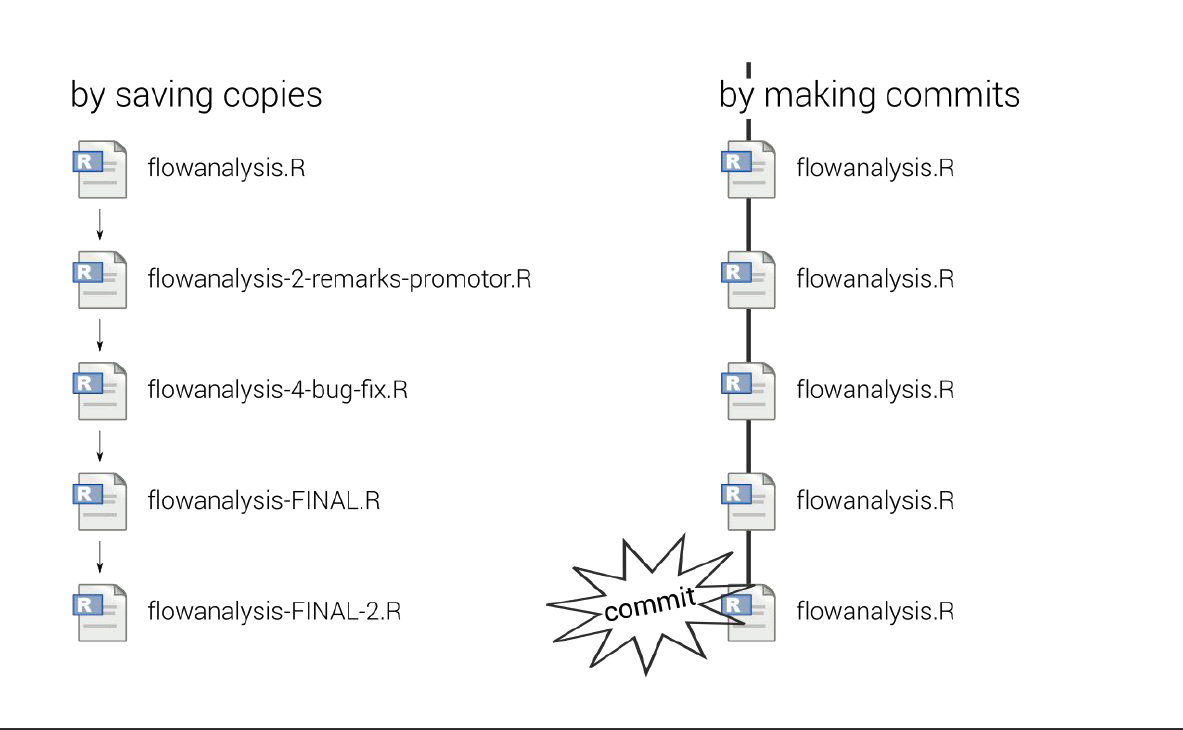

Collaborating efficiently has historically been really hard to do. It’s only been the last 20 years or so that we’ve moved beyond mailing things with the postal service. Being able to email and get feedback on files through track changes was a huge step forward, but it comes with a lot of bookkeeping and reproduciblity issues (did I do my analyses with thesis_final_final.xls or thesis_final_usethisone.xls?). But now, open tools make it much easier to collaborate.

Working with collaborators in mind is critical for reproducibility. And, your most important collaborator is your future self. This training will introduce best practices using open tools, so that collaboration will become second nature to you!

2.3 By the end of the course

By the end of the course, you’ll wrangle a few different data sets, and make your own graphics that you’ll publish on webpages you’ve built collaboratively with GitHub and RMarkdown. Woop!

Here are some important things to keep in mind as you learn (these are joke book covers):

3. Credits

This lesson has been formatted according to the Carpentries Foundation lesson template and following their recommendations on how to teach researchers good practices in programming and data analysis.

This material builds from a lot of fantastic materials developed by others in the open data science community. Most of the content derives from the Ocean Health Index Data Science Training which are greatly acknowledge for the quality of their teaching materials.

It also pulls from the following resources, which are highly recommended for further learning and as resources later on. Specific lessons will also cite more resources.

- R for Data Science by Hadley Wickham and Garrett Grolemund

- STAT 545 by Jenny Bryan

- Happy Git with R by Jenny Bryan

- Software Carpentry by the Carpentries

- Artwork from @juliesquid for @openscapes (illustrated by @allison_horst).

- Artwort from @allisonhorst rstats illustrations

Key Points

Tidy data principles are essential to increase data analysis efficiency and code readability.

Using R and RStudio, it becomes easier to implement good practices in data analysis.

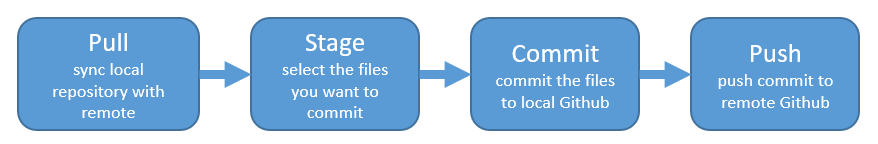

I can make my workflow more reproducible and collaborative by using git and Github.

R & RStudio, R Markdown

Overview

Teaching: 50 min

Exercises: 10 minQuestions

How do I orient myself in the RStudio interface?

How can I work with R in the console?

What are built-in R functions and how do I use their help page?

How can I generate an R Markdown notebook?

Objectives

Learn what is an Integrated Developing Environment.

Learn to work in the R console interactively.

Learn how to generate a reproducible code notebook with R Markdown.

Learn how to create an HTML or PDF document from a R Markdown notebook.

Understand that R Markdown notebooks foster literate programming, reproducibility and open science.

Table of Contents

- 1. Introduction

- 2. A quick touR

- 3. Diving deepeR

- 4. R Markdown notebook

- 5. Import your own data

- 6. Credits and additional resources

1. Introduction

This episode is focusing on the concept of literate programming supported by the ability to combine code, its output and human-readable descriptions in a single R Markdown document.

Literate programming

More generally, the mixture of code, documentation (conclusion, comments) and figures in a notebook is part of the so-called “literate programming” paradigm (Donald Knuth, 1984). Your code and logical steps should be understandable for human beings. In particular these four tips are related to this paradigm:

- Do not write your program only for R but think also of code readers (that includes you).

- Focus on the logic of your workflow. Describe it in plain language (e.g. English) to explain the steps and why you are doing them.

- Explain the “why” and not the “how”.

- Create a report from your analysis using a R Markdown notebook to wrap together the data + code + text.

1.1 The R Markdown format

Dr. Jenny Bryan’s lectures from STAT545 at R Studio Education

Leave your mark

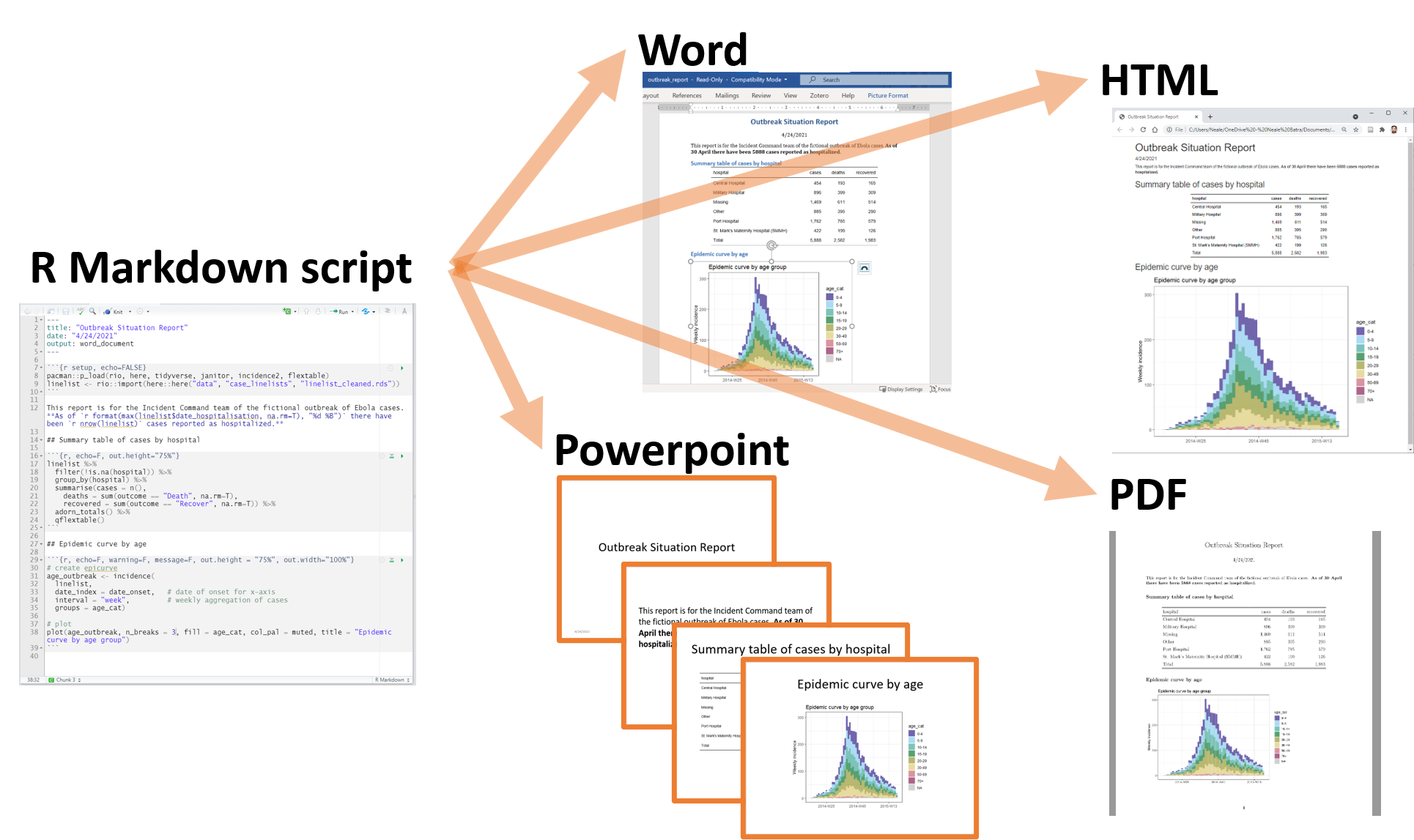

R Markdown allows you to convert your complete analysis into a single report that is easy to share and that should recapitulate the logic of your code and related outputs.

A variety of output formats are supported:

- Word document

- Powerpoint

- HTML

In practice, it is best practice to create a PDF document from your analysis as PDF documents are easy to open and visualise online especially on GitHub.

1.2 Why learn R with RStudio?

You are all here today to learn how to code. Coding made me a better scientist because I was able to think more clearly about analyses, and become more efficient in doing so. Data scientists are creating tools that make coding more intuitive for new coders like us, and there is a wealth of awesome instruction and resources available to learn more and get help.

Here is an analogy to start us off. Think of yourself as a pilot, and R is your airplane. You can use R to go places! With practice you’ll gain skills and confidence; you can fly further distances and get through tricky situations. You will become an awesome pilot and can fly your plane anywhere.

And if R were an airplane, RStudio is the airport. RStudio provides support! Runways, communication, community, and other services that makes your life as a pilot much easier. So it’s not only the infrastructure (the user interface or IDE), although it is a great way to learn and interact with your variables, files, and interact directly with GitHub. It’s also a data science philosophy, R packages, community, and more. So although you can fly your plane without an airport and we could learn R without RStudio, that’s not what we’re going to do.

Take-home message

We are learning R together with RStudio because it offers the power of a programming language with the comfort of an Integrated Development Environment.

Something else to start us off is to mention that you are learning a new language here. It’s an ongoing process, it takes time, you’ll make mistakes, it can be frustrating, but it will be overwhelmingly awesome in the long run. We all speak at least one language; it’s a similar process, really. And no matter how fluent you are, you’ll always be learning, you’ll be trying things in new contexts, learning words that mean the same as others, etc, just like everybody else. And just like any form of communication, there will be miscommunications that can be frustrating, but hands down we are all better off because of it.

While language is a familiar concept, programming languages are in a different context from spoken languages, but you will get to know this context with time. For example: you have a concept that there is a first meal of the day, and there is a name for that: in English it’s “breakfast”. So if you’re learning Spanish, you could expect there is a word for this concept of a first meal. (And you’d be right: ‘desayuno’). We will get you to expect that programming languages also have words (called functions in R) for concepts as well. You’ll soon expect that there is a way to order values numerically. Or alphabetically. Or search for patterns in text. Or calculate the median. Or reorganize columns to rows. Or subset exactly what you want. We will get you increase your expectations and learn to ask and find what you’re looking for.

2. A quick touR

2.1 RStudio panes

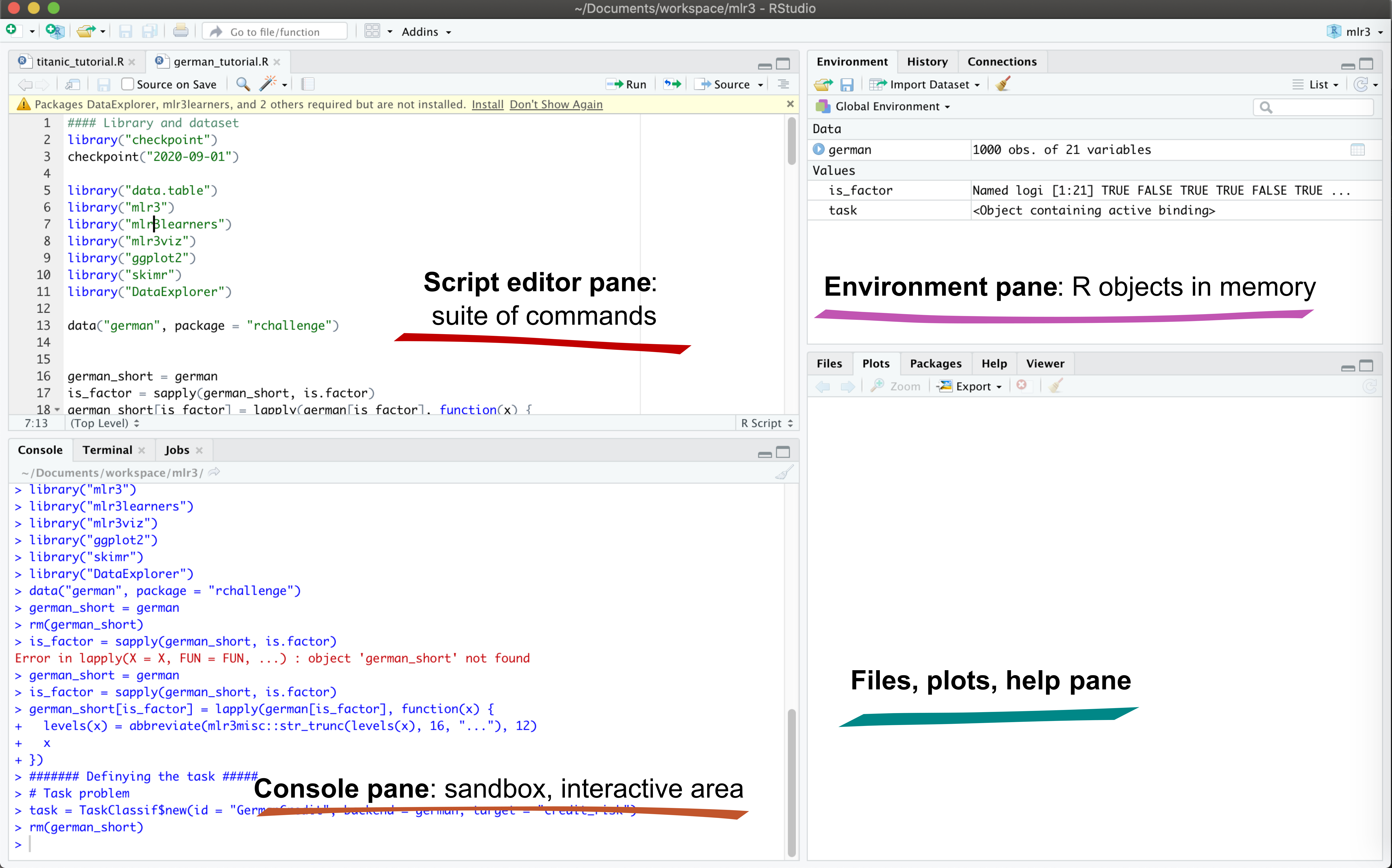

Like a medieval window, RStudio has several panes (sections that divide the entire window).

Launch RStudio/R and identify the different panes.

Notice the default panels:

- Script editor panel (upper left)

- Console (lower right)

- Environment/History (tabbed in upper right)

- Files/Plots/Packages/Help (tabbed in lower right)

Customizing RStudio appearance

You can change the default location of the panes, among many other things: Customizing RStudio.

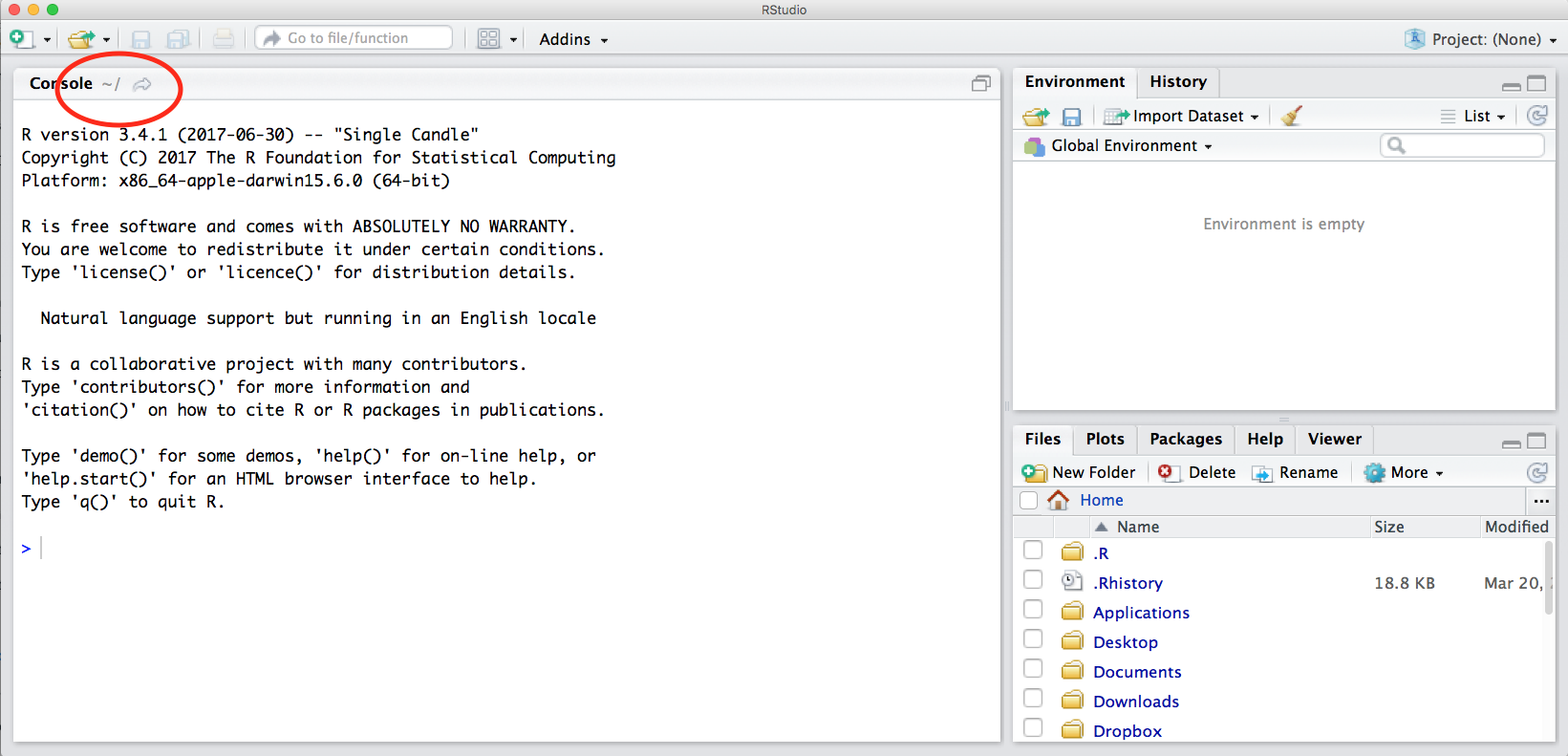

2.2 Locating yourself

An important first question: where are we inside the computer file system?

If you’ve have opened RStudio for the first time, you’ll be in your home directory. This is noted by the ~/ at the top of the console. You can see too that the Files pane in the lower right shows what is in the home directory where you are. You can navigate around within that Files pane and explore, but note that you won’t change where you are: even as you click through you’ll still be Home: ~/.

2.3 First step in the console

OK let’s go into the Console, where we interact with the live R process.

Make an assignment and then inspect the object you created by typing its name on its own.

x <- 3 * 4

x

In my head, I hear e.g., “x gets 12”.

All R statements where you create objects – “assignments” – have this form: objectName <- value.

I’ll write it in the console with a hashtag #, which is the way R comments so it won’t be evaluated.

## objectName <- value

## This is also how you write notes in your code to explain what you are doing.

Object names cannot start with a digit and cannot contain certain other characters such as a comma or a space. You will be wise to adopt a convention for demarcating words in names.

# i_use_snake_case

# other.people.use.periods

# evenOthersUseCamelCase

Make an assignment

this_is_a_really_long_name <- 2.5

To inspect this variable, instead of typing it, we can press the up arrow key and call your command history, with the most recent commands first. Let’s do that, and then delete the assignment:

this_is_a_really_long_name

Another way to inspect this variable is to begin typing this_…and RStudio will automagically have suggested completions for you that you can select by hitting the tab key, then press return.

One more:

science_rocks <- "yes it does!"

You can see that we can assign an object to be a word, not a number. In R, this is called a “string”, and R knows it’s a word and not a number because it has quotes " ". You can work with strings in your data in R pretty easily, thanks to the stringr and tidytext packages. We won’t talk about strings very much specifically, but know that R can handle text, and it can work with text and numbers together (this is a huge benefit of using R).

Let’s try to inspect:

sciencerocks

# Error: object 'sciencerocks' not found

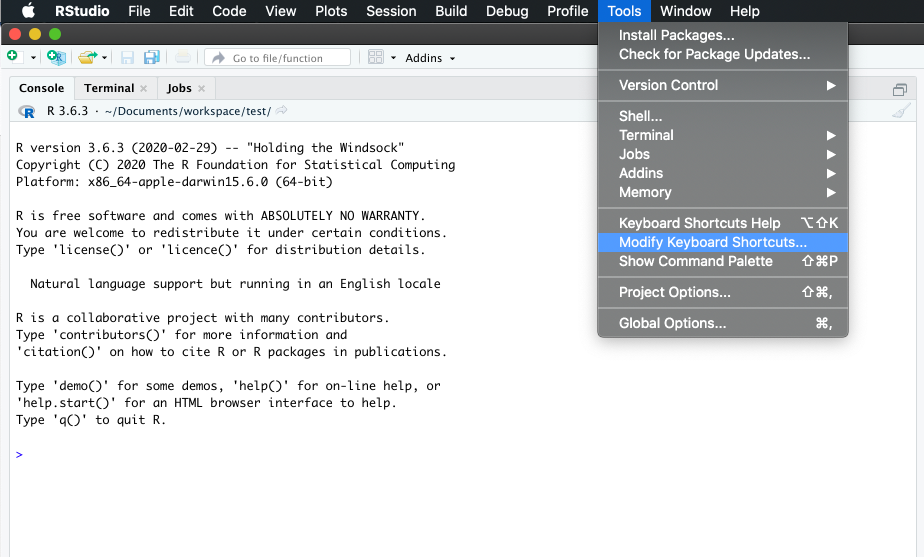

2.4 Make your life easier with keyboard shortcuts

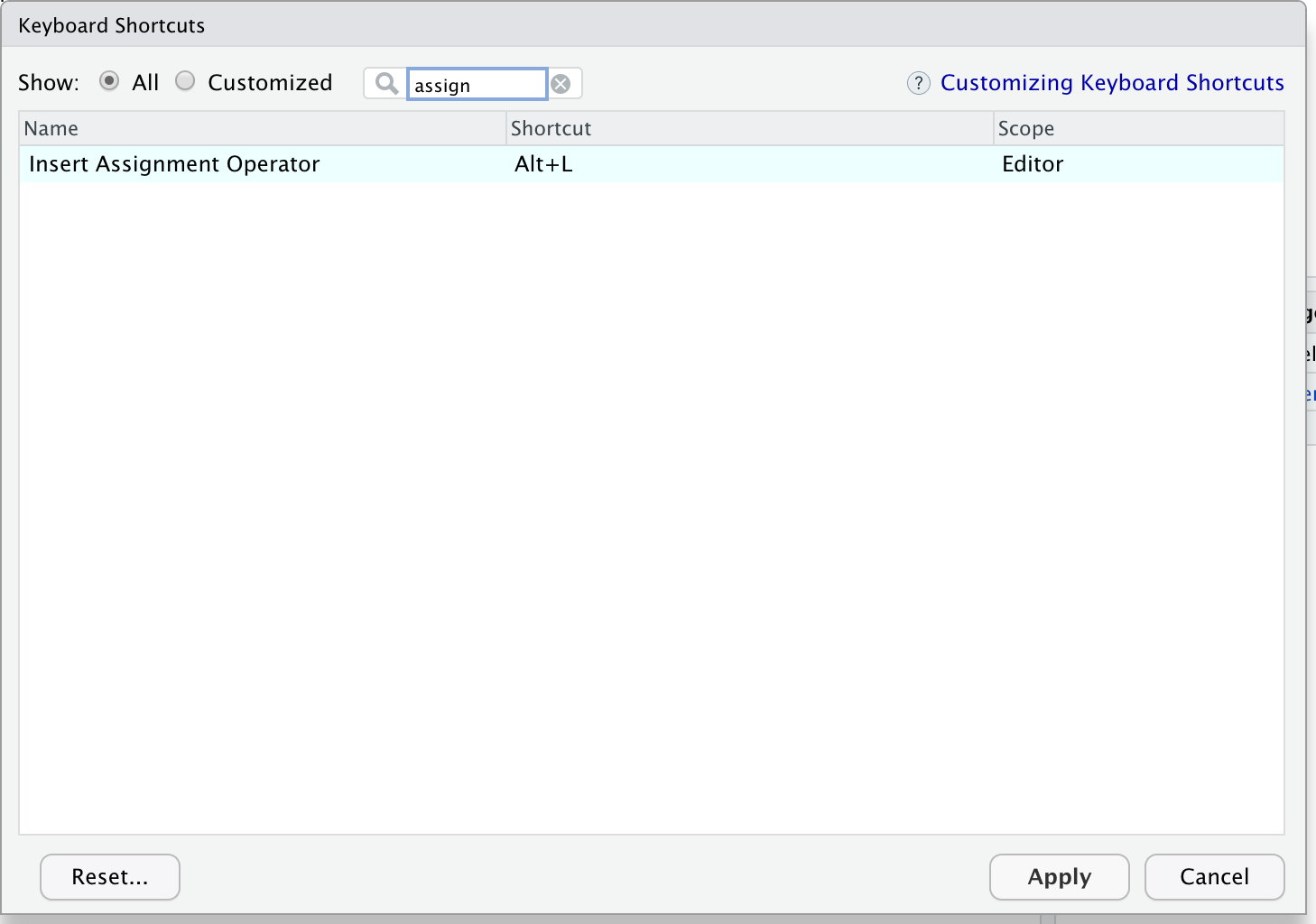

One can rapidly experience that typing the assign operator <- is laborious to type in the long run. Instead, we can create a keyboard shortcut to make our life easier.

With RStudio, this is relatively straightforward. Follow the screenshots to change the default to Alt + L for instance.

Go to “Tools” followed by “Modify Keyboard Shortcuts”:

Then in the “Filter” text box, type “assign” to find the current keyboard shortcut for the assign operator. Change it to Alt + L or any other convenient key combination.

Lovely keyboard shortcuts:

RStudio offers many handy keyboard shortcuts.

Also,Alt + Shift + Kbrings up a keyboard shortcut reference card.

2.5 Error messages are your friends

Implicit contract with the computer / scripting language: Computer will do tedious computation for you. In return, you will be completely precise in your instructions. Typos matter. Case matters. Pay attention to how you type.

Remember that this is a language, not unsimilar to English! There are times you aren’t understood – it’s going to happen. There are different ways this can happen. Sometimes you’ll get an error. This is like someone saying ‘What?’ or ‘Pardon’? Error messages can also be more useful, like when they say ‘I didn’t understand what you said, I was expecting you to say blah’. That is a great type of error message. Error messages are your friend. Google them (copy-and-paste!) to figure out what they mean.

And also know that there are errors that can creep in more subtly, when you are giving information that is understood, but not in the way you meant. Like if I am telling a story about suspenders that my British friend hears but silently interprets in a very different way (true story). This can leave me thinking I’ve gotten something across that the listener (or R) might silently interpreted very differently. And as I continue telling my story you get more and more confused… Clear communication is critical when you code: write clean, well documented code and check your work as you go to minimize these circumstances!

2.6 Logical operators and expressions

A moment about logical operators and expressions. We can ask questions about the objects we made.

==means ‘is equal to’!=means ‘is not equal to’<means ‘is less than’>means ‘is greater than’<=means ‘is less than or equal to’>=means ‘is greater than or equal to’

x == 2

x <= 30

x != 5

2.7 Variable assignment

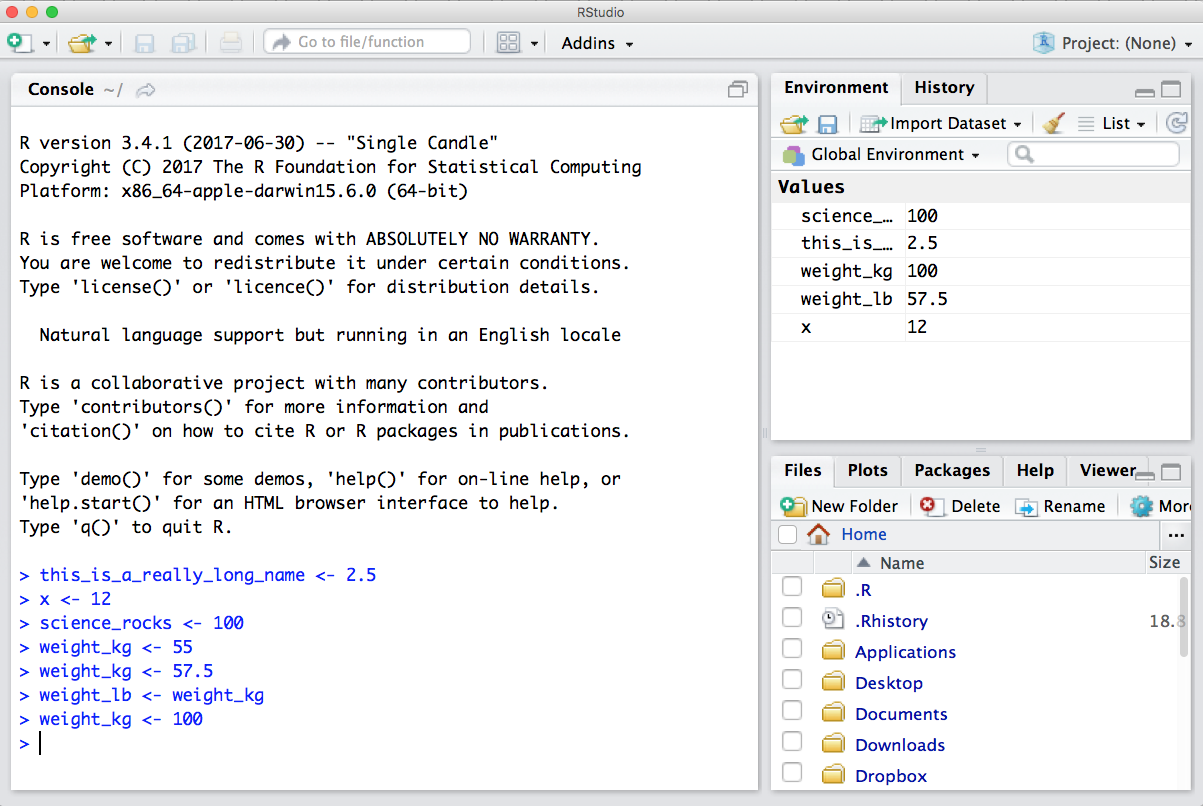

Let’s assign a number to a variable called weight_kg.

weight_kg <- 55 # doesn't print anything

(weight_kg <- 55) # but putting parenthesis around the call prints the value of `weight_kg`

weight_kg # and so does typing the name of the object

When assigning a value to an object, R does not print anything. You can force R to print the value by using parentheses or by typing the object name:

Now that R has weight_kg in memory, we can do arithmetic with it. For

instance, we may want to convert this weight into pounds (weight in pounds is 2.2 times the weight in kg):

weight_kg * 2.2

We can also change a variable’s value by assigning it a new one:

weight_kg <- 57.5

weight_kg * 2.2

And when we multiply it by 2.2, the outcome is based on the value currently assigned to the variable.

OK, let’s store the animal’s weight in pounds in a new variable, weight_lb:

weight_lb <- weight_kg * 2.2

and then change weight_kg to 100.

weight_kg <- 100

What do you think is the current content of the object weight_lb? 126.5 or 220? Why?

It’s 125.6. Why? Because assigning a value to one variable does not change the values of

other variables — if you want weight_kg updated to reflect the new value for weight_lb, you will have to re-execute that code. This is why we re-comment working in scripts and documents rather than the Console, and will introduce those concepts shortly and work there for the rest of the day.

We can create a vector of multiple values using c().

c(weight_lb, weight_kg)

names <- c("Jamie", "Melanie", "Julie")

names

Exercise

- Create a vector that contains the different weights of four fish (you pick the object name!):

- one fish: 12 kg

- two fish: 34 kg

- red fish: 20 kg

- blue fish: 6.6 kg

- Convert the vector of kilos to pounds (hint: 1 kg = 2.2 pounds).

- Calculate the total weight.

Solution

# Q1 fish_weights <- c(12, 34, 20, 6.6) # Q2 fish_weights_lb <- fish_weights * 2.2 # Q3 # we haven't gone over functions like `sum()` yet but this is covered in the next section. sum(fish_weights_lb)

3. Diving deepeR

3.1 Functions and help pages

R has a mind-blowing collection of built-in functions that are used with the same syntax: function name with parentheses around what the function needs to do what it is supposed to do.

function_name(argument1 = value1, argument2 = value2, ...). When you see this syntax, we say we are “calling the function”.

Let’s try using seq() which makes regular sequences of numbers and, while we’re at it, demo more helpful features of RStudio.

Type se and hit TAB. A pop up shows you possible completions. Specify seq() by typing more to disambiguate or using the up/down arrows to select. Notice the floating tool-tip-type help that pops up, reminding you of a function’s arguments. If you want even more help, press F1 as directed to get the full documentation in the help tab of the lower right pane.

Type the arguments 1, 10 and hit return.

seq(1, 10)

We could probably infer that the seq() function makes a sequence, but let’s learn for sure. Type (and you can autocomplete) and let’s explore the help page:

?seq

help(seq) # same as ?seq

Help page

The help page tells the name of the package in the top left, and broken down into sections:

- Description: An extended description of what the function does.

- Usage: The arguments of the function and their default values.

- Arguments: An explanation of the data each argument is expecting.

- Details: Any important details to be aware of.

- Value: The data the function returns.

- See Also: Any related functions you might find useful.

- Examples: Some examples for how to use the function.

seq(from = 1, to = 10) # same as seq(1, 10); R assumes by position

seq(from = 1, to = 10, by = 2)

The above also demonstrates something about how R resolves function arguments. You can always specify in name = value form. But if you do not, R attempts to resolve by position. So above, it is assumed that we want a sequence from = 1 that goes to = 10. Since we didn’t specify step size, the default value of by in the function definition is used, which ends up being 1 in this case. For functions I call often, I might use this resolve by position for the first

argument or maybe the first two. After that, I always use name = value.

The examples from the help pages can be copy-pasted into the console for you to understand what’s going on. Remember we were talking about expecting there to be a function for something you want to do? Let’s try it.

Exercise

Talk to your neighbor(s) and look up the help file for a function that you know or expect to exist. Here are some ideas:

?getwd()?plot()min()max()?mean()?log())Solution

- Gets and prints the current working directory.

- Plotting function.

- Minimum value in a vector or dataframe column.

- Maximum value in a vector or dataframe column.

- Geometric mean (average) of a vector or dataframe column. Generic function for the (trimmed) arithmetic mean.

- Logarithm function. Specific functions exist for log2 and log10 calculations.

And there’s also help for when you only sort of remember the function name: double-question mark:

??install

Not all functions have (or require) arguments:

date()

3.2 Packages

So far we’ve been using a couple functions from base R, such as seq() and date(). But, one of the amazing things about R is that a vast user community is always creating new functions and packages that expand R’s capabilities. In R, the fundamental unit of shareable code is the package. A package bundles together code, data, documentation, and tests, and is easy to share with others. They increase the power of R by improving existing base R functionalities, or by adding new ones.

The traditional place to download packages is from CRAN, the Comprehensive R Archive Network, which is where you downloaded R. You can also install packages from GitHub, which we’ll do tomorrow.

You don’t need to go to CRAN’s website to install packages, this can be accomplished within R using the command install.packages("package-name-in-quotes"). Let’s install a small, fun package praise. You need to use quotes around the package name.:

install.packages("praise")

Now we’ve installed the package, but we need to tell R that we are going to use the functions within the praise package. We do this by using the function library().

What’s the difference between a package and a library?

Sometimes there is a confusion between a package and a library, and you can find people calling “libraries” to packages.

Please don’t get confused: library() is the command used to load a package, and it refers to the place where the package is contained, usually a folder on your computer, while a package is the collection of functions bundled conveniently.

library(praise)

Now that we’ve loaded the praise package, we can use the single function in the package, praise(), which returns a randomized praise to make you feel better.

praise()

3.3 Clearing the environment

Now look at the objects in your environment (workspace) – in the upper right pane. The workspace is where user-defined objects accumulate.

You can also get a listing of these objects with a few different R commands:

objects()

ls()

If you want to remove the object named weight_kg, you can do this:

rm(weight_kg)

To remove everything:

rm(list = ls())

or click the broom 🧹 in RStudio Environment panel.

For reproducibility, it is critical that you delete your objects and restart your R session frequently. You don’t want your whole analysis to only work in whatever way you’ve been working right now — you need it to work next week, after you upgrade your operating system, etc. Restarting your R session will help you identify and account for anything you need for your analysis.

We will keep coming back to this theme but let’s restart our R session together: Go to the top menus: Session > Restart R.

Exercise

Clear your workspace and create a few new variables. Create a variable that is the mean of a sequence of 1-20.

- What’s a good name for your variable?

- Does it matter what your “by” argument is? Why?

Solution

- Any meaningful and relatively short name is good. As a suggestion

mean_seqcould work.- Yes it does. By default “by” is equal to 1 but it can be changed to any increment number.

4. R Markdown notebook

R Markdown will allow you to create your own workflow, save it and generate a high quality report that you can share. It supports collaboration and reproducibility of your work. This is really key for collaborative research, so we’re going to get started with it early and then use it for the rest of the day.

Literate programming

More generally, the mixture of code, documentation (conclusion, comments) and figures in a notebook is part of the so-called “literate programming” paradigm (Donald Knuth, 1984). Your code and logical steps should be understandable for human beings. In particular these four tips are related to this paradigm:

- Do not write your program only for R but think also of code readers (that includes you).

- Focus on the logic of your workflow. Describe it in plain language (e.g. English) to explain the steps and why you are doing them.

- Explain the “why” and not the “how”.

- Create a report from your analysis using a R Markdown notebook to wrap together the data + code + text.

4.1 R Markdown video (1-minute)

What is R Markdown? from RStudio, Inc. on Vimeo.

A minute long introduction to R Markdown

This is also going to introduce us to the fact that RStudio is a sophisticated text editor (among all the other awesome things). You can use it to keep your files and scripts organized within one place (the RStudio IDE) while getting support that you expect from text editors (check-spelling and color, to name a few).

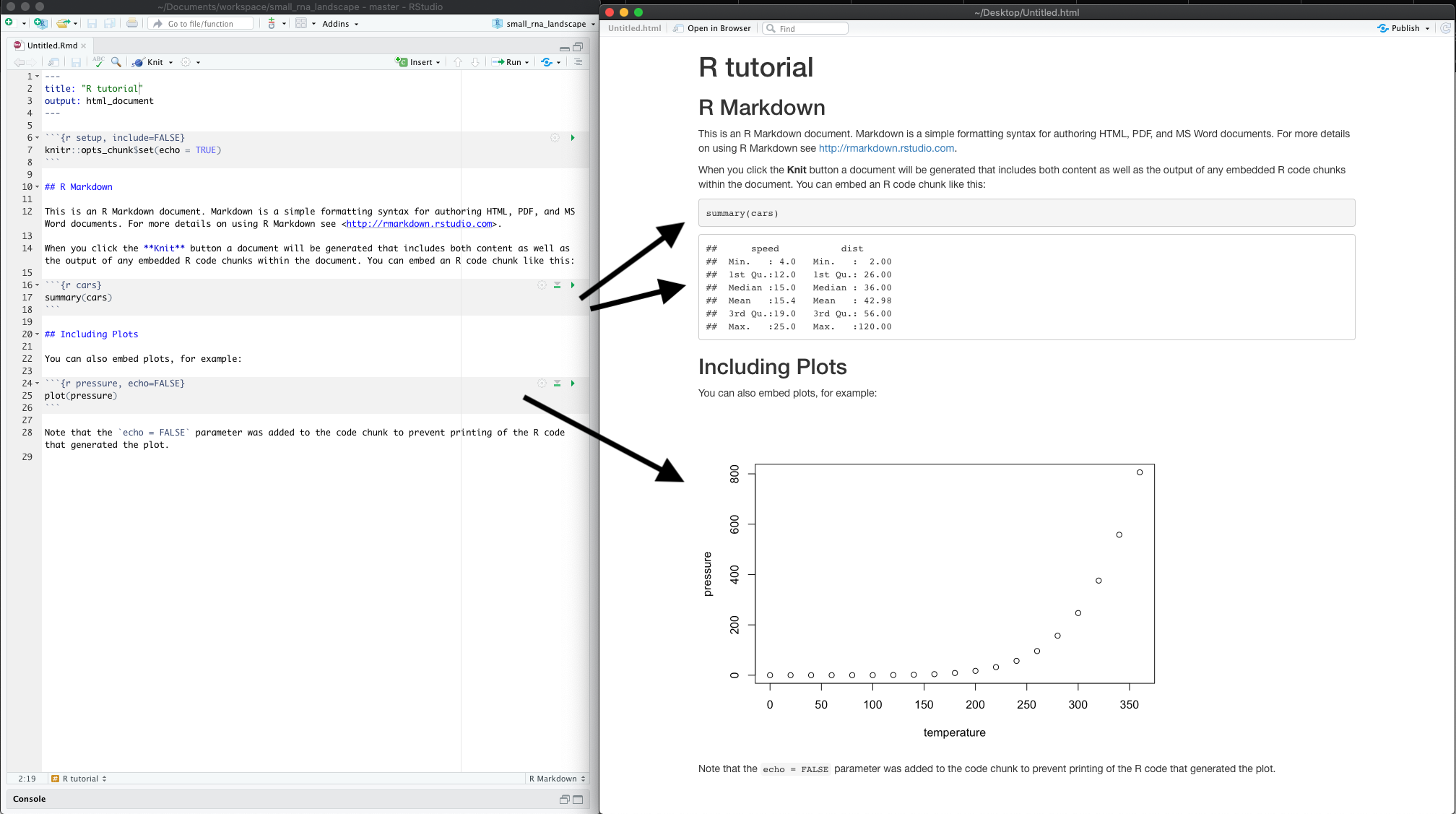

An R Markdown file will allow us to weave markdown text with chunks of R code to be evaluated and output content like tables and plots.

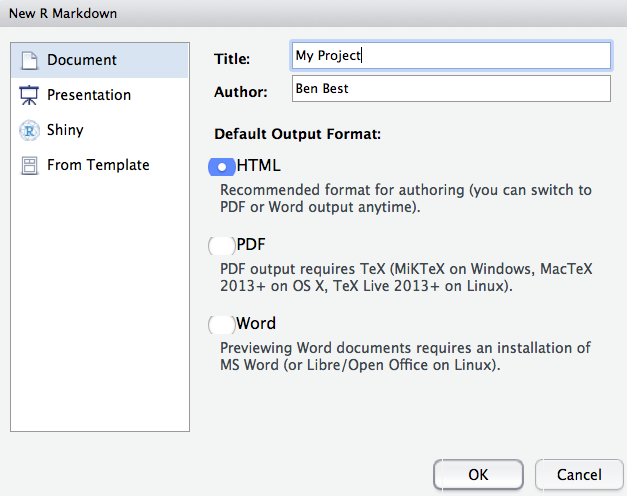

4.2 Create a R Markdown document

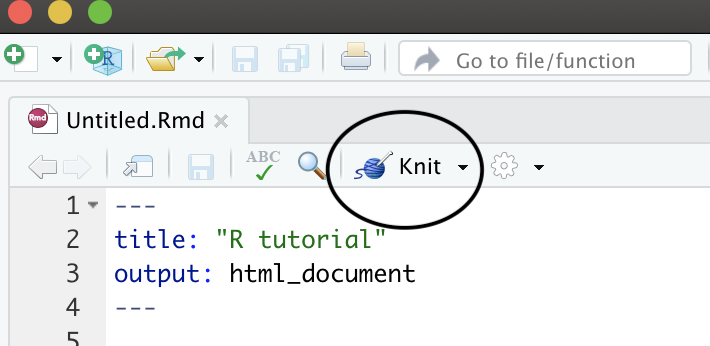

To do so, go to: File -> New File -> R Markdown… -> Document of output format HTML -> click OK.

You can give it a Title like “R tutorial”. Then click OK.

Let’s have a look at this file — it’s not blank; there is some initial text is already provided for you. You can already notice a few parts:

- A document YAML header,

- Usually many different code chunks,

- and formatted text and various outputs (figures, tables)

4.3 The YAML header

The header of your R Markdown document will allow you to personalize the related report from your R Markdown document.

The header follows the YAML syntax (“YAML Ain’t Markup Language”) which usually follows a key:value syntax.

A few YAML parameters are all you need to know to start using R Markdown. Here is an opinionated list of the key parameters:

---

- title: "R tutorial"

- output: html_document

- author: "John Doe"

- date: "Tuesday, February 15 2021"

---

The three dashes --- before and after the option: value are important to delimit the YAML header. Do not forget them!

A note on output format: if you search online, you will find tons of potential output formats available from one R Markdown document. Some of them require additional packages or software installation. For instance, compiling your document to produce a PDF will require LaTeX libraries etc.

Exercise

Open the output formats of the R Markdown definitive guide: https://bookdown.org/yihui/rmarkdown/output-formats.html.

Instead ofoutput: html_document, specifypdf_documentto compile into a PDF (because it is easier to share for instance).

Press the knit button. Is it working? If not, what is missing?

For PDF, you might need to install a distribution of LaTeX for which several options exist. The recommended one is to install TinyTeX from Yihui Xie. Other more comprehensive LaTeX distributions can be obtained from the LaTeX project directly for your OS.

If you feel adventurous, you can try other formats. There are many things you can generate from a R Markdown document even slides for a presentation.

Exercise

Instead of hard-coding the date in the YAML section, search online for a way to dynamically have the today’s date.

Solution

In the YAML header, write:

date: r Sys.Date()

This will add today’s date in the YYYY-MM-DD format when compiling.

More generally, you can use the syntax option: r <some R command> to have options automatically updated by some R command when

compiling your R Markdown notebook into a report.

4.4 Code chunks

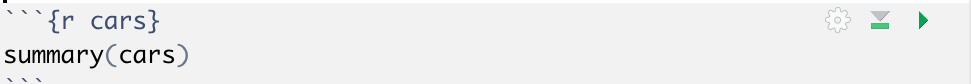

Code chunks appear in grey and will execute the R code when you compile the document.

The following chunk will create a summary of the cars dataframe.

A code chunk is defined by three backticks ```{r} before curly braces with r inside to indicate the coding language.

It is closed by three backticks ```.

```{r}

summary(cars)

```

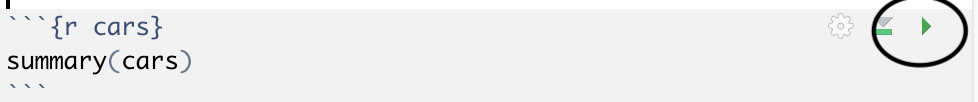

The code chunk will be executed when compiling the report. You can also run it by clicking on the green arrow.

To insert a new code chunk, you can either:

- Use a keyboard shortcut:

Ctrl + Alt + I: to add a code chunk. UseCmd + Alt + Ion Mac OS. - Click on “Add chunk in the toolbar. “

- Place two code chunk:

```{r}to open the code chunk and```to close it.

Exercise

Introduce a new code chunk to produce a histogram of the cars speed.

Compile your R Markdown document and visualise the results.

In the final document, can you find a way to hide the code chunk that generates the plot?Solution

Add a new code chunk:

```{r} hist(cars$speed) ```Inside the curly braces, add:

```{r, echo = FALSE} hist(cars$speed) ```

4.5 Text markdown syntax

You might wonder what the “markdown” in R Markdown stands for.

Between code chunks, you can write normal plain text to comment figures and code outputs. To format titles, paragraphs, format text in italics, etc. you can make use of the markdown syntax that is a simple but efficient method to format text. Altogether, it means that a R Markdown document has 2 different languages within it: R and Markdown.

Markdown is a formatting language for plain text, and there are only about 15 rules to know.

Have a look at your own document. Notice the syntax for:

- headers get rendered at multiple levels:

#,## - bold:

**word** - Web links:

<http://rmarkdown.rstudio.com>or[http://rmarkdown.rstudio.com](http://rmarkdown.rstudio.com).. - In line code: see the

echo = FALSE

There are some good cheatsheets to get you started, and here is one built into RStudio: Go to Help > Markdown Quick Reference

Exercise

In Markdown:

- Format text in italics,

- Make a numbered list,

- Add a web link to the RStudio website in your document,

- Add a “this is a subheader” subheader with the level 2 or 3.

Reknit your document.Solution

- Add one asterisk or one underscore on both sides of the text.

- To make a numbered list, write

1.then add a line and write a second2..- Place the link between squared brackets. RStudio link

- Subheaders can be written with

###or##depending on the level that you want to write.

A complete but short guide on Markdown syntax from Yihui Xie is available here.

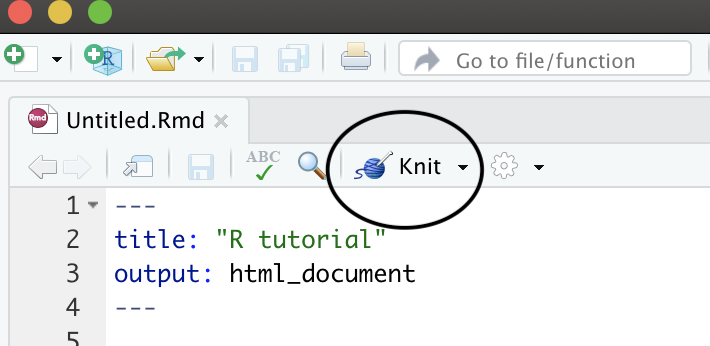

4.6 Compile your R Markdown document

Now that we are all set, we can compile the document to generate the corresponding HTML document. Press the “Knit” button.

This will compile your R Markdown document and open a new window.

What do you notice between the two? So much of learning to code is looking for patterns.

Notice how the grey R code chunks are surrounded by 3 backticks and {r LABEL}. These are evaluated and return the output text in the case of summary(cars) and the output plot in the case of plot(pressure).

Notice how the code plot(pressure) is not shown in the HTML output because of the R code chunk option echo=FALSE.

Compiling takes place in a separate R workspace

When compiling, you will be redirected to the R Markdown tab next to your Console. This is normal as your R Markdown document is compiled in a separate new R workspace.

4.7 Useful tips and common issues

Here is a list of useful keyboard shortcuts:

Useful shortcuts

Place the cursor in the script editor pane. Then type:

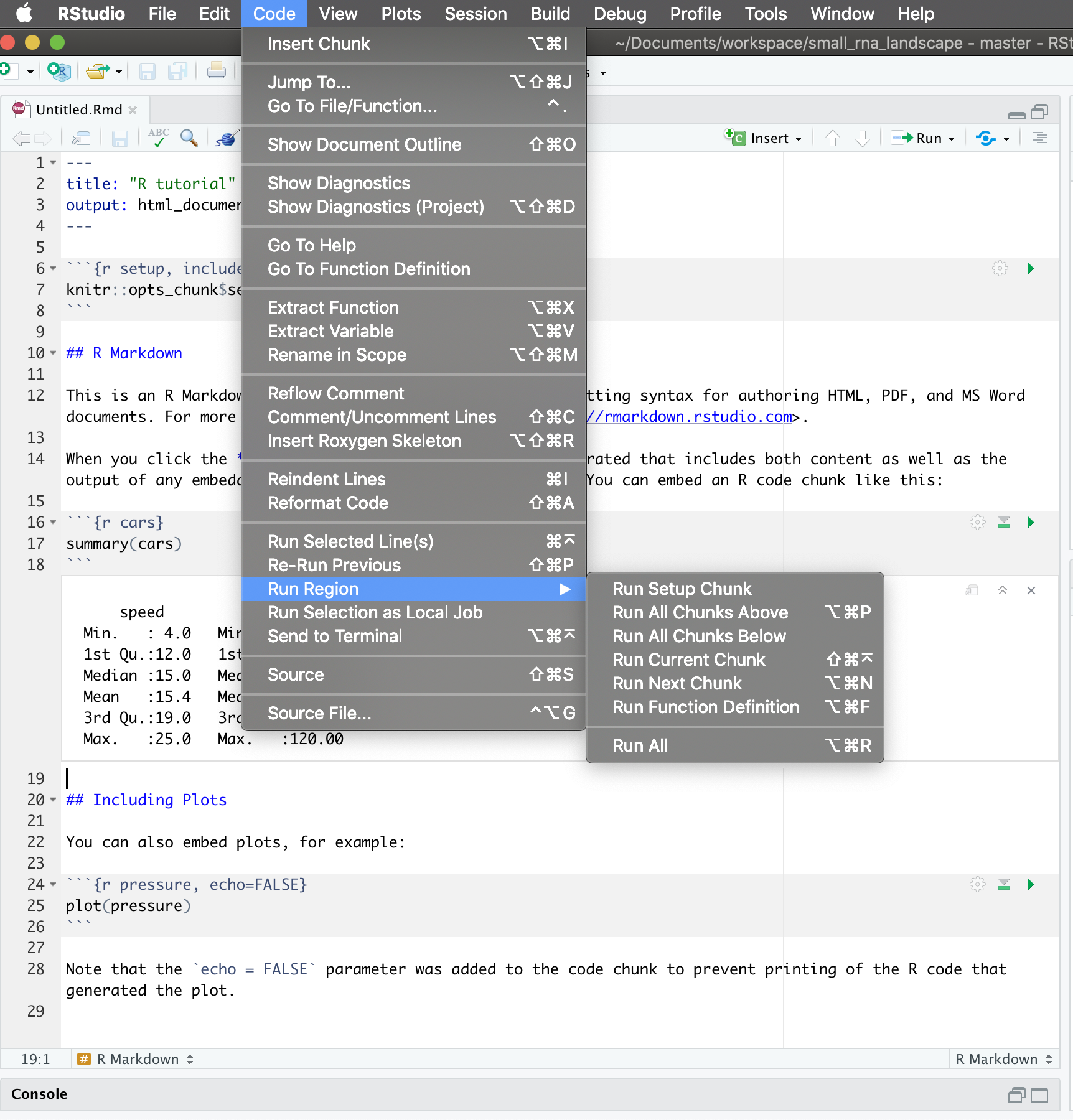

Ctrl + Alt + I: to add a code chunk.Ctrl + Shift + K: compile the R Markdown document to create the related output.Ctrl + Alt + Cto run the current code chunk (your cursor has to be inside a code chunk).Ctrl + Alt + RFor Mac OS users, replace

CtrlwithCmd(Command).

All these shortcuts can be seen in Code > Run Region > …

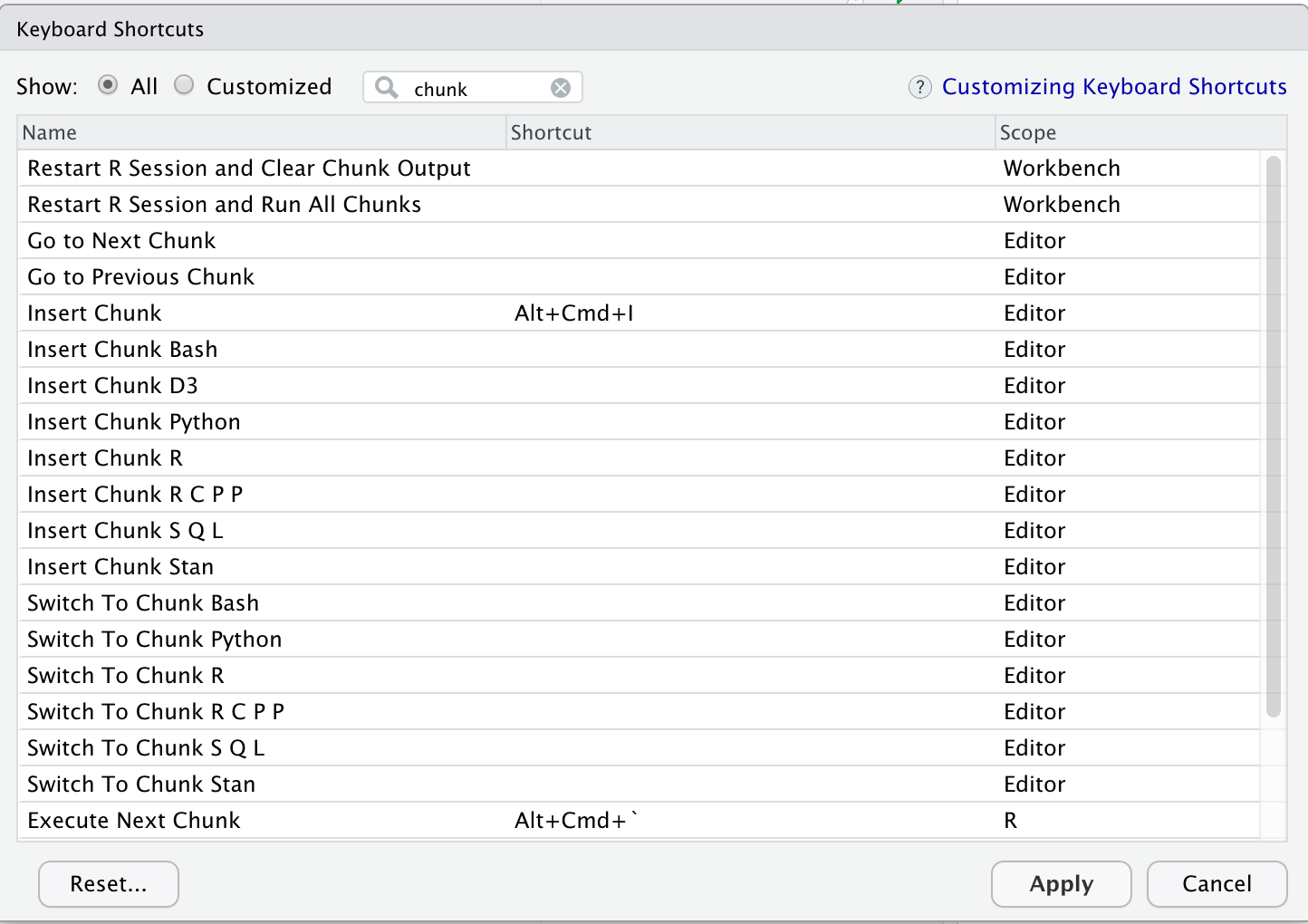

As seen before, you can modify these shortcuts to anything you find convenient: Tools > Modify keyboard shortcuts.

Type “chunk” to filter the shortcuts for code chunks.

Common issues

Separate workspace when compiling When you compile your R Markdown document, it will start from a clean R workspace. Anything you have in your current R interactive session will not be available in the R Markdown tab.

This is often the source of bugs and halting

Exercise

Step 1: In the R console, type:

library(dplyr) tooth_filtered <- dplyr::filter(ToothGrowth, len > 1)You should see the

tooth_filteredR object in your current environment.Step 2: in your R Markdown document, add this line:

with(tooth_filtered, hist(x = len, col = "darkgrey"))Try to knit your document. What bug do you experience?

Solution

Since your R Markdown workspace starts from scratch and creates a new environment, it ignores the

tooth_filteredobject you created in your R console.

The solution is to add thetooth_filtered <- dplyr::filter(ToothGrowth, len > 1)line inside a code chunk.

5. Import your own data

5.1 Functions available

To import your own data, you can use different functions depending on your input format:

read.tableis the generic function to import from various format. You do have to specify the separator as it is not known by default (tabulation or comma for instance).read.csvto import a table with comma-separated values (my_file.csv). You don’t have to specify the separator as it is by default a comma.read.delimto import a table with tabulated-separated values (my_file.tsvormy_file.txt). You don’t have to specify the separator as it is by default a tabulation.

Some important parameters in data import functions:

stringsAsFactors = TRUEis by default converting your characters into factors. This can be an issue for plotting for instance. I recommend to turn it off (stringsAsFactors = FALSEand change your strings to factors explicitely later on usingfactor()for instance.check.names = TRUEis by default checking your column names. For instance, if your column names start with a number, then R will prepend anXbefore your column variable name. To avoid this, addcheck.names = FALSE.

5.2 Important tips

Taken from Anna Krystalli workshop:

read.csv

read.csv(file,

na.strings = c("NA", "-999"),

strip.white = TRUE,

blank.lines.skip = TRUE,

fileEncoding = "mac")

na.string: character vector of values to be coded missing and replaced with NA to argument egstrip.white: Logical. if TRUE strips leading and trailing white space from unquoted character fieldsblank.lines.skip: Logical: if TRUE blank lines in the input are ignored.fileEncoding: if you’re getting funny characters, you probably need to specify the correct encoding.

5.2 Large tables

If you have very large tables (1000s of rows and/or columns), use the fread() function from the data.table package.

6. Credits and additional resources

6.1 Jenny Bryan

- Stat 545 University module: https://stat545.com/

- Main website: https://jennybryan.org/

6.2 RStudio materials

- The official RStudio R Markdown documentation: https://rmarkdown.rstudio.com/

- The RStudio R Markdown cheatsheet

6.3 The definitive R Markdown guide

“The R Markdown definitive guide” by Yihui Xie, J. J. Allaire and Garrett Grolemund: https://bookdown.org/yihui/rmarkdown/

6.4 Others

- Remedy: additional functionalities for markdown in RStudio: https://thinkr-open.github.io/remedy/

- R Markdown Crash Course: a very complete course on R Markdown. https://zsmith27.github.io/rmarkdown_crash-course/

Key Points

R and RStudio make a powerful duo to create R scripts and R Markdown notebooks.

RStudio offers a text editor, a console and some extra features (environment, files, etc.).

R is a functional programming language: everything resolves around functions.

R Markdown notebook support code execution, report creation and reproducibility of your work.

Literate programming is a paradigm to combine code and text so that it remains understandable to humans, not only to machines.

Visualizing data with ggplot2

Overview

Teaching: 30 min

Exercises: 60 minQuestions

How can I make publication-grade plots with

ggplot2?What are the key concepts underlying

ggplot2plotting?What are some of the visualisations available through

ggplot2?How can I save my plot in a specific format (e.g. png)?

Objectives

Install the

ggplot2package by installing tidyverse.Learn basics of

ggplot2with several public datasets.Learn how to customize your plot efficiently (facets, geoms).

See how to use the stat functions to produce on-the-fly summary plots.

Table of Contents

- 1. Introduction

- 2. First plot with

ggplot2 - 3. Building your plots iteratively

- 4. Bar charts

- 5. Resources

1. Introduction

Why do we start with data visualization? Not only is data visualisation a big part of analysis, it’s a way to see your progress as you learn to code.

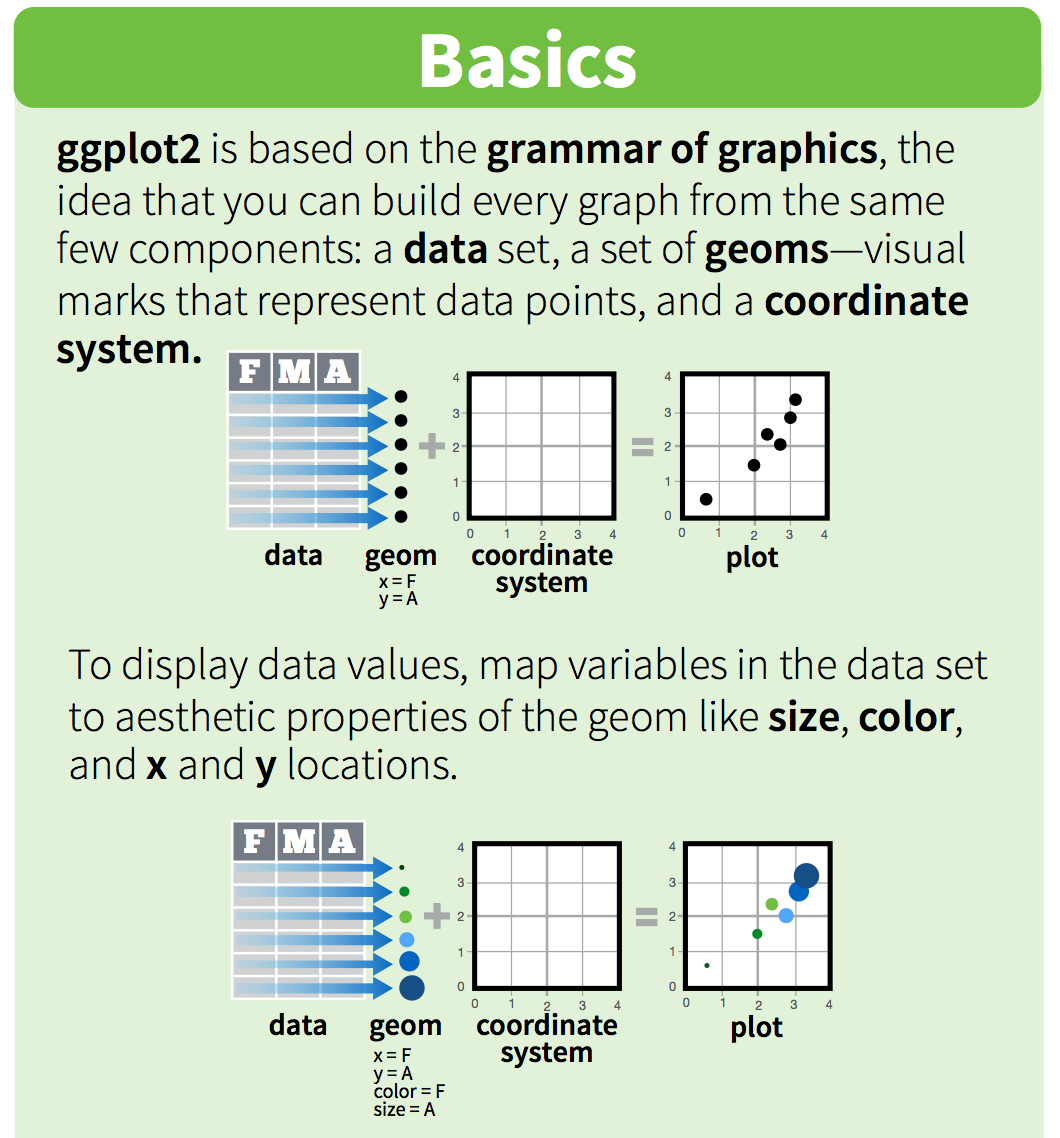

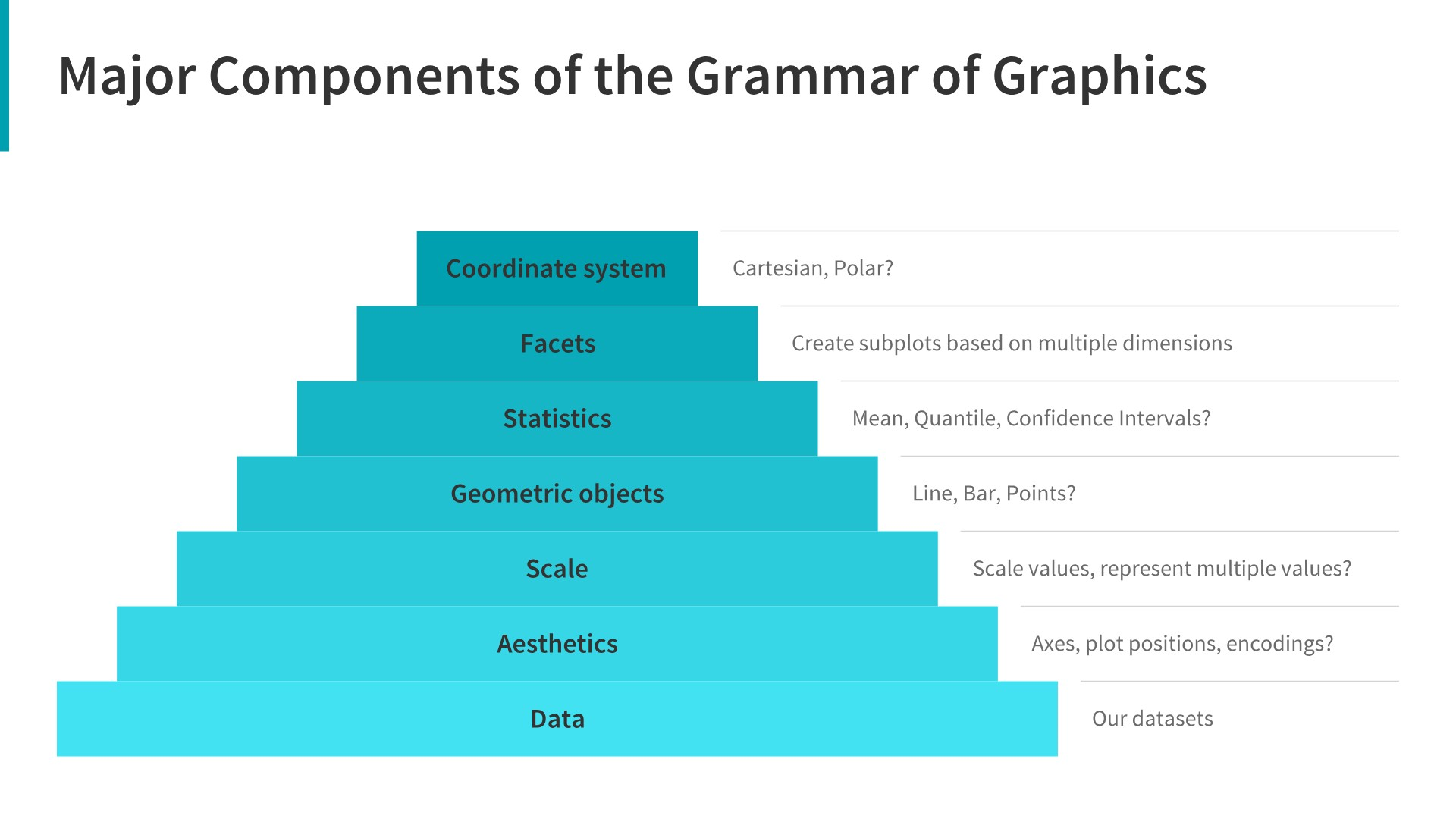

ggplot2implements the grammar of graphics, a coherent system for describing and building graphs. Withggplot2, you can do more faster by learning one system and applying it in many places. - Hadley Wickham, R for Data Science

This lesson borrows heavily from Hadley Wickham’s R for Data Science book, and an EcoDataScience lesson on Data Visualization.

1.1 Install our first package: tidyverse

Packages are bundles of functions, along with help pages and other goodies that make them easier for others to use, (ie. vignettes).

So far we’ve been using packages that are already included in base R. These can be considered out-of-the-box packages and include things such as sum and mean. You can also download and install packages created by the vast and growing R user community. The most traditional place to download packages is from CRAN, the Comprehensive R Archive Network. This is where you went to download R originally, and will go again to look for updates. You can also install packages directly from GitHub, which we’ll do tomorrow.

You don’t need to go to CRAN’s website to install packages, we can do it from within R with the command install.packages("package-name-in-quotes").

We are going to be using the package ggplot2, which is actually bundled into a huge package called tidyverse. We will install tidyverse now, and use a few functions from the packages within. Also, check out tidyverse.org/.

## from CRAN:

install.packages("tidyverse") ## do this once only to install the package on your computer.

library(tidyverse) ## do this every time you restart R and need it

When you do this, it will tell you which packages are inside of tidyverse that have also been installed. Note that there are a few name conflicts; it is alerting you that we’ll be using two functions from dplyr instead of the built-in stats package.

What’s the difference between install.packages() and library()? Why do you need both? Here’s an analogy:

install.packages()is setting up electricity for your house. Just need to do this once (let’s ignore monthly bills).library()is turning on the lights. You only turn them on when you need them, otherwise it wouldn’t be efficient. And when you quit R, it turns the lights off, but the electricity lines are still there. So when you come back, you’ll have to turn them on again withlibrary(), but you already have your electricity set up.

You can also install packages by going to the Packages tab in the bottom right pane. You can see the packages that you have installed (listed) and loaded (checkbox). You can also install packages using the install button, or check to see if any of your installed packages have updates available (update button). You can also click on the name of the package to see all the functions inside it — this is a super helpful feature that I use all the time.

1.2 Load national park datasets

Copy and paste the code chunk below and read it in to your RStudio to load the five datasets we will use in this section.

Important note

The

read_csv()function comes from thereadrpackage part of thetidyversesuite of packages. Make sure you’ve runlibrary(tidyverse)to load the datasets.

# National Parks in California

ca <- read_csv("https://raw.githubusercontent.com/carpentries-incubator/open-science-with-r/gh-pages/data/ca.csv")

# Acadia National Park

acadia <- read_csv("https://raw.githubusercontent.com/carpentries-incubator/open-science-with-r/gh-pages/data/acadia.csv")

# Southeast US National Parks

se <- read_csv("https://raw.githubusercontent.com/carpentries-incubator/open-science-with-r/gh-pages/data/se.csv")

# 2016 Visitation for all Pacific West National Parks

visit_16 <- read_csv("https://raw.githubusercontent.com/carpentries-incubator/open-science-with-r/gh-pages/data/visit_16.csv")

# All Nationally designated sites in Massachusetts

mass <- read_csv("https://raw.githubusercontent.com/carpentries-incubator/open-science-with-r/gh-pages/data/mass.csv")

2. First plot with ggplot2

ggplot2 is a plotting package that makes it simple to create complex plots from data in a data frame. It provides a more programmatic interface for specifying what variables to plot, how they are displayed, and general visual properties. Therefore, we only need minimal changes if the underlying data change or if we decide to change from a bar plot to a scatterplot. This helps in creating publication quality plots with minimal amounts of adjustments and tweaking.

ggplot likes data in the tidy (‘long’) format: i.e., a column for every dimension, and a row for every observation. Well structured data will save you lots of time when making figures with ggplot. We’ll learn more about tidy data in the next section.

ggplot graphics are built step by step by adding new elements. Adding layers in this fashion allows for extensive flexibility and customization of plots.

One can see it as a pyramid of layers too.

2.1 Data description

We are going to use a National Park visitation dataset (from the National Park Service at https://irma.nps.gov/Stats/SSRSReports). Read in the data using read_csv and take a look at the first few rows using head() or View().

head(ca)

This dataframe is already in a tidy format where all rows are an observation and all columns are variables. Among the variables in ca are:

-

region, US region where park is located. -

visitors, the annual visitation for eachyear

# A tibble: 789 x 7

region state code park_name type visitors year

<chr> <chr> <chr> <chr> <chr> <dbl> <dbl>

1 PW CA CHIS Channel Islands National Park National Park 1200 1963

2 PW CA CHIS Channel Islands National Park National Park 1500 1964

3 PW CA CHIS Channel Islands National Park National Park 1600 1965

4 PW CA CHIS Channel Islands National Park National Park 300 1966

5 PW CA CHIS Channel Islands National Park National Park 15700 1967

6 PW CA CHIS Channel Islands National Park National Park 31000 1968

7 PW CA CHIS Channel Islands National Park National Park 33100 1969

8 PW CA CHIS Channel Islands National Park National Park 32000 1970

9 PW CA CHIS Channel Islands National Park National Park 24400 1971

10 PW CA CHIS Channel Islands National Park National Park 31947 1972

# … with 779 more rows

2.2 Building a plot

To build a ggplot, we need to:

- use the

ggplot()function and bind the plot to a specific data frame using thedataargument.

# initiate the plot

ggplot(data=ca)

- add

geoms– graphical representation of the data in the plot (points, lines, bars).ggplot2offers many different geoms; we will use some common ones today, including:

*geom_point()for scatter plots, dot plots, etc.

*geom_bar()for bar charts

*geom_line()for trend lines, time-series, etc.

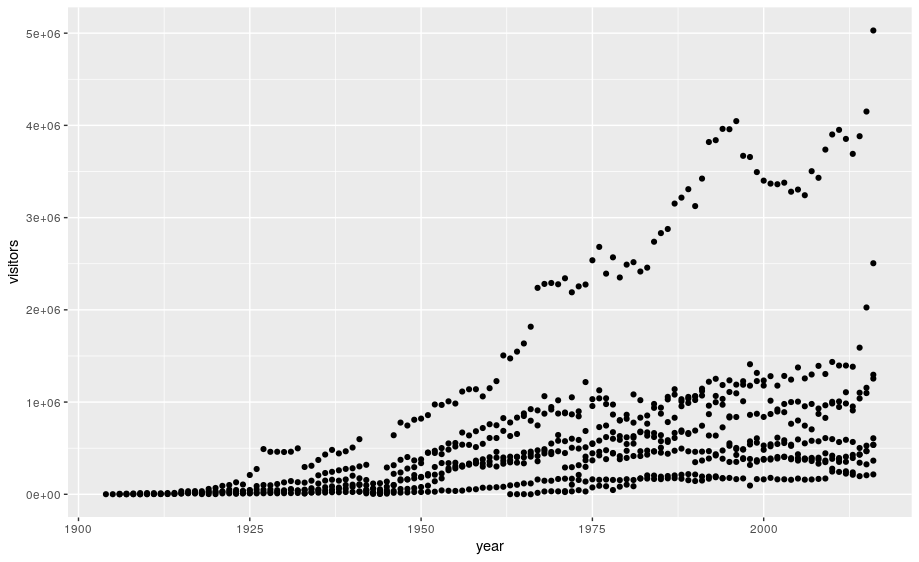

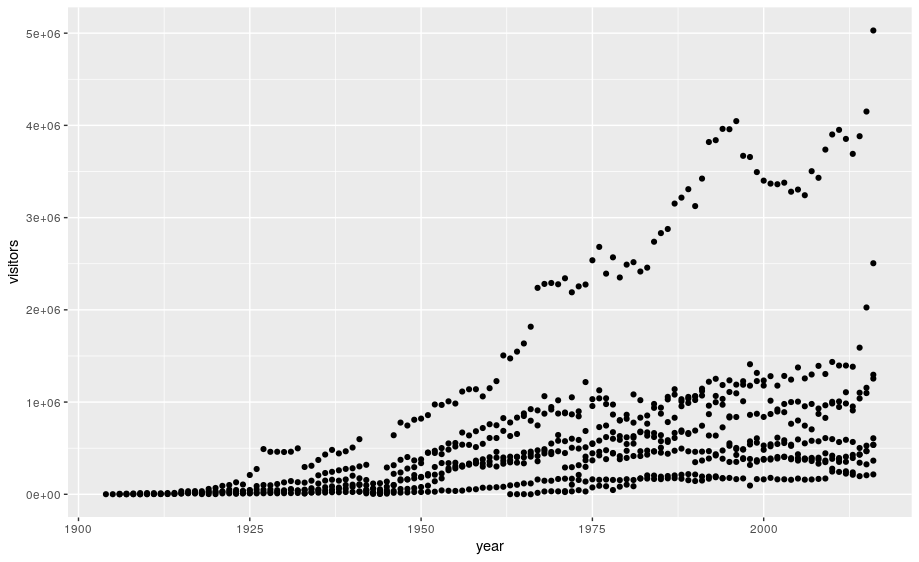

To add a geom to the plot use + operator. Because we have two continuous variables, let’s use geom_point() first and then assign x and y aesthetics (aes).

# add geoms

ggplot(data=ca) +

geom_point(aes(x = year,y = visitors))

Notes:

- Anything you put in the

ggplot()function can be seen by any geom layers that you add (i.e., these are universal plot settings). This includes the x and y axis you set up inaes(). - You can also specify aesthetics for a given geom independently of the

aesthetics defined globally in the

ggplot()function. - The

+sign used to add layers must be placed at the end of each line containing a layer. If, instead, the+sign is added in the line before the other layer,ggplot2will not add the new layer and will return an error message.

3. Building your plots iteratively

Building plots with ggplot is typically an iterative process. We start by defining the dataset we’ll use, lay the axes, and choose a geom:

ggplot(data = ca) +

geom_point(aes(x = year, y = visitors))

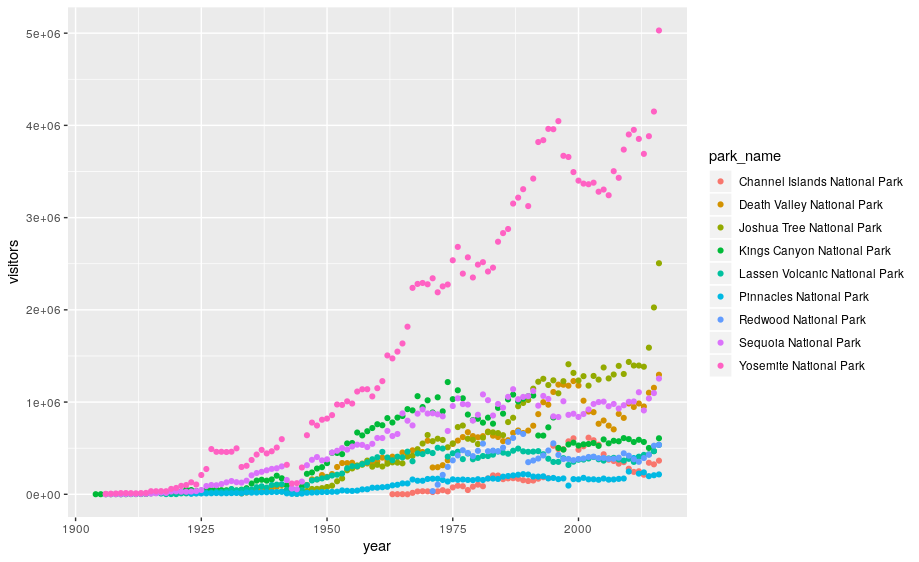

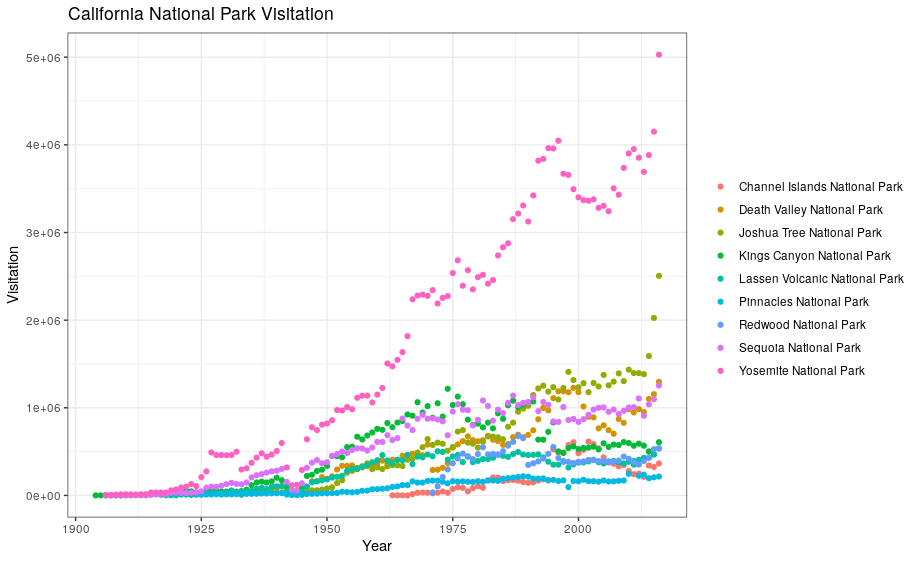

This isn’t necessarily a useful way to look at the data. We can distinguish each park by added the color argument to the aes:

ggplot(data=ca) +

geom_point(aes(x = year, y = visitors, color = park_name))

3.1 Customizing plots

Take a look at the ggplot2 cheat sheet, and think of ways you could improve the plot.

Now, let’s capitalize the x and y axis labels and add a main title to the figure. I also like to remove that standard gray background using a different theme. Many themes come built into the ggplot2 package. My preference is theme_bw() but once you start typing theme_ a list of options will pop up. The last thing I’m going to do is remove the legend title.

ggplot(data = ca) +

geom_point(aes(x = year, y = visitors, color = park_name)) +

labs(x = "Year",

y = "Visitation",

title = "California National Park Visitation") +

theme_bw() +

theme(legend.title=element_blank())

3.2 ggplot2 themes

In addition to theme_bw(), which changes the plot background to white, ggplot2 comes with several other themes which can be useful to quickly change the look of your visualization.

The ggthemes package provides a wide variety of options (including an Excel 2003 theme). The ggplot2 extensions website provides a list of packages that extend the capabilities of ggplot2, including additional themes.

Exercise

- Using the

sedataset, make a scatterplot showing visitation to all national parks in the Southeast region with color identifying individual parks.- Change the plot so that color indicates

state. Customize by adding your own title and theme. You can also change the text sizes and angles. Try applying a 45 degree angle to the x-axis. Use your cheatsheet!- In the following code, why isn’t the data showing up?

ggplot(data = se, aes(x = year, y = visitors))Solution

ggplot(data = se) + geom_point(aes(x = year, y = visitors, color = park_name)).- See the code below:

ggplot(data = se) + geom_point(aes(x = year, y = visitors, color = state)) +labs(x = "Year", y = "Visitation", title = "Southeast States National Park Visitation") +theme_light() + theme(legend.title = element_blank(), axis.text.x = element_text(angle = 45, hjust = 1, size = 14))- The code is missing a geom to describe how the data should be plotted.

3.3 Faceting

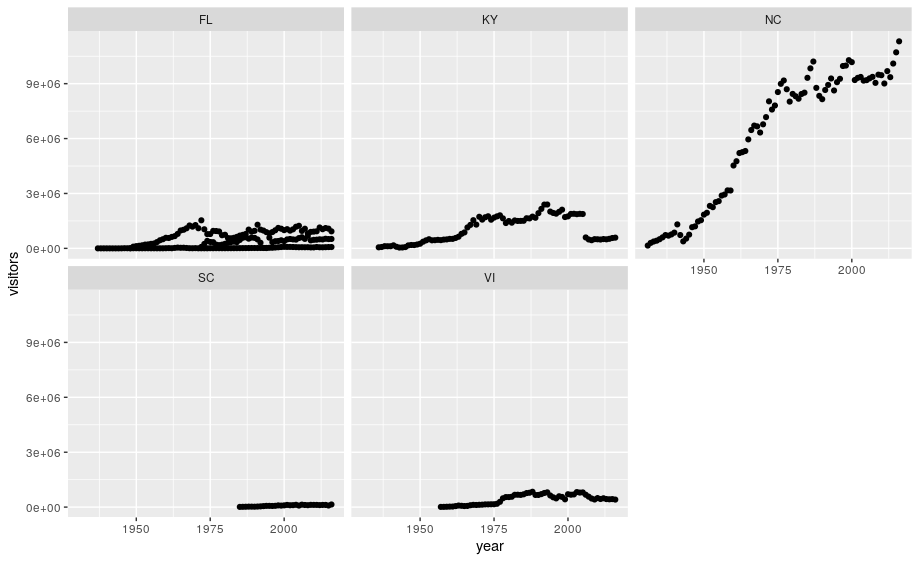

ggplot has a special technique called faceting that allows the user to split one plot into multiple plots based on data in the dataset. We will use it to make a plot of park visitation by state:

ggplot(data = se) +

geom_point(aes(x = year, y = visitors)) +

facet_wrap(~ state)

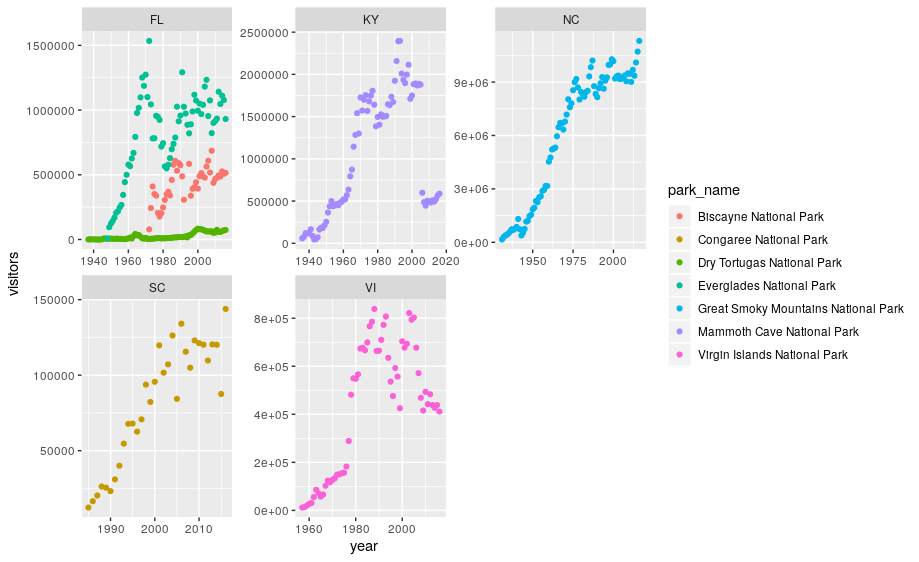

We can now make the faceted plot by splitting further by park using park_name (within a single plot):

ggplot(data = se) +

geom_point(aes(x = year, y = visitors, color = park_name)) +

facet_wrap(~ state, scales = "free")

3.4 Geometric objects (geoms)

A geom is the geometrical object that a plot uses to represent data. People often describe plots by the type of geom that the plot uses. For example, bar charts use bar geoms, line charts use line geoms, boxplots use boxplot geoms, and so on.

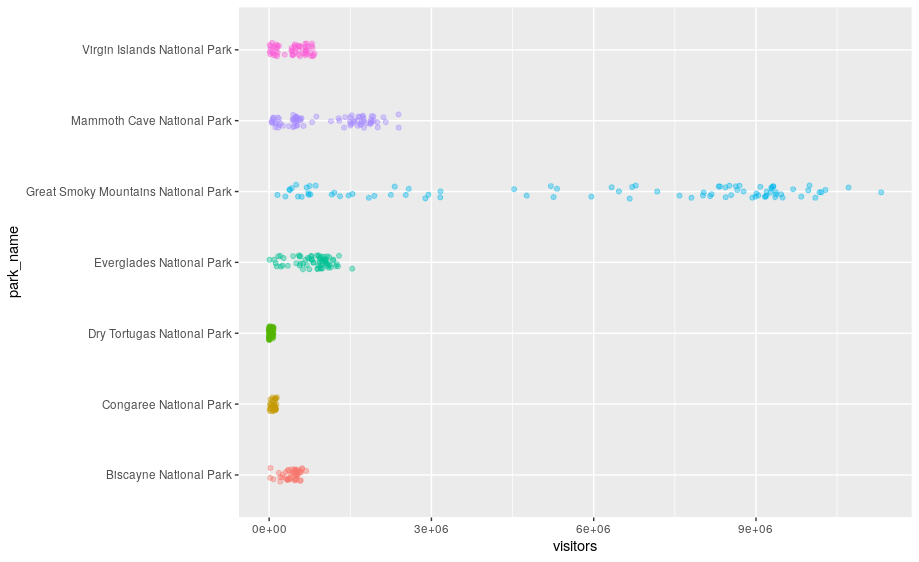

Scatterplots break the trend; they use the point geom. You can use different geoms to plot the same data. To change the geom in your plot, change the geom function that you add to ggplot(). Let’s look at a few ways of viewing the distribution of annual visitation (visitors) for each park (park_name).

# representations as points with a jitter offset

ggplot(data = se) +

geom_jitter(aes(x = park_name, y = visitors, color = park_name),

width = 0.1,

alpha = 0.4) +

coord_flip() +

theme(legend.position = "none")

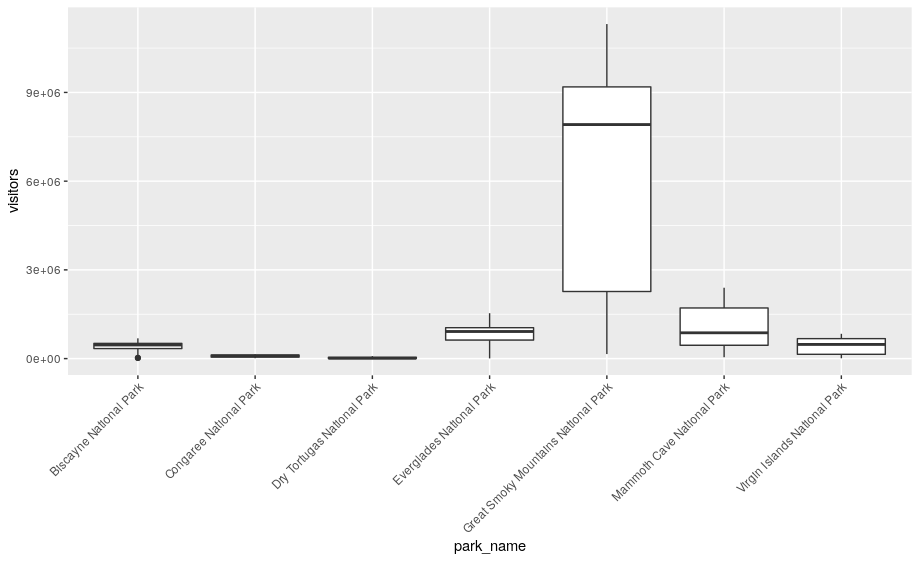

# boxplots

ggplot(se, aes(x = park_name, y = visitors)) +

geom_boxplot() +

theme(axis.text.x = element_text(angle = 45, hjust = 1))

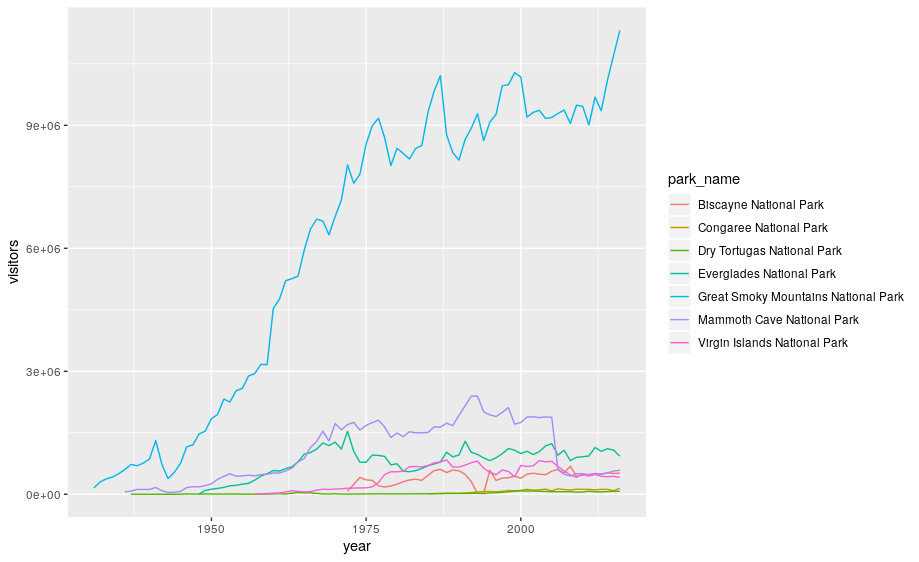

None of these are great for visualizing data over time. We can use geom_line() in the same way we used geom_point.

ggplot(se, aes(x = year, y = visitors, color = park_name)) +

geom_line()

ggplot2 provides over 30 geoms, and extension packages provide even more (see https://exts.ggplot2.tidyverse.org/ for a sampling). The best way to get a comprehensive overview is the ggplot2 cheatsheet. To learn more about any single geom, use help: ?geom_smooth.

To display multiple geoms in the same plot, add multiple geom functions to ggplot():

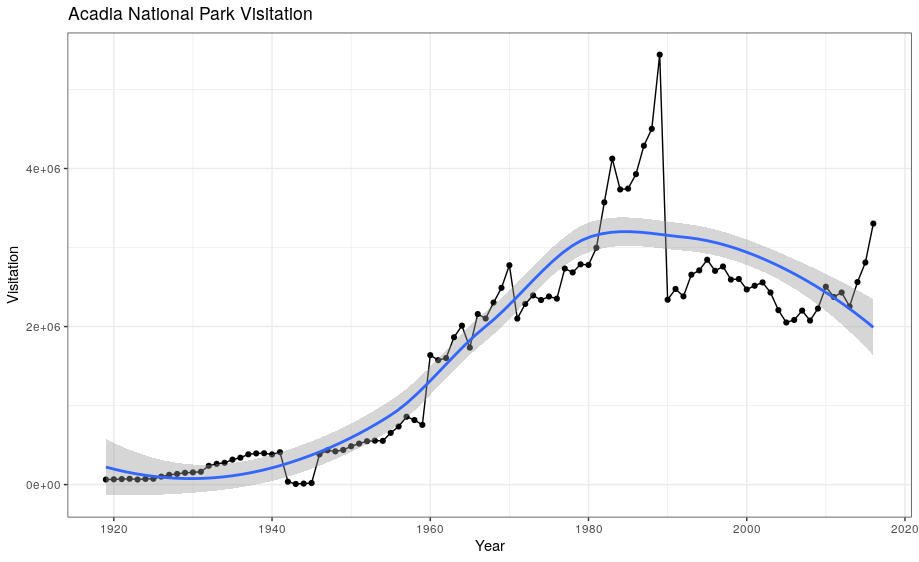

geom_smooth allows you to view a smoothed mean of data. Here we look at the smooth mean of visitation over time to Acadia National Park:

ggplot(data = acadia) +

geom_point(aes(x = year, y = visitors)) +

geom_line(aes(x = year, y = visitors)) +

geom_smooth(aes(x = year, y = visitors)) +

labs(title = "Acadia National Park Visitation",

y = "Visitation",

x = "Year") +

theme_bw()

Notice that this plot contains three geoms in the same graph! Each geom is using the set of mappings in the first line. ggplot2 will treat these mappings as global mappings that apply to each geom in the graph.

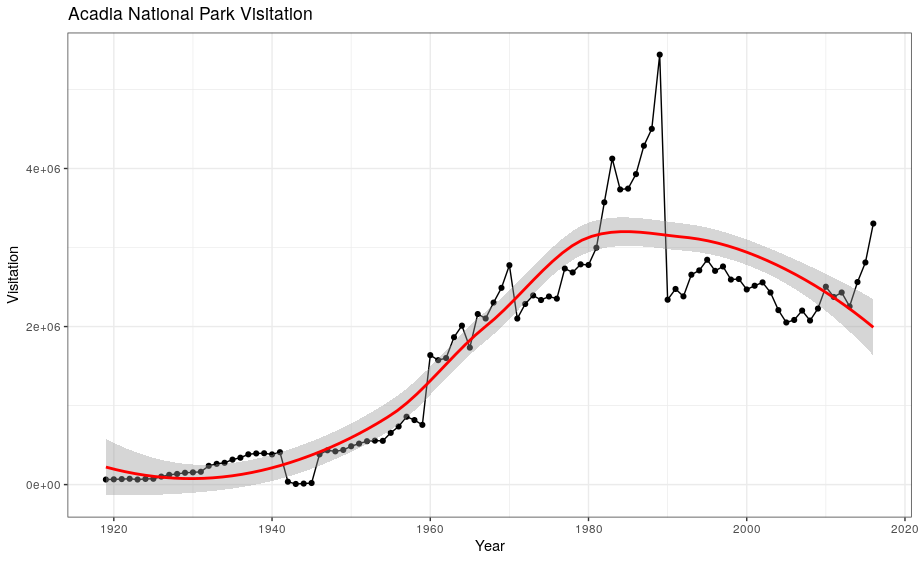

If you place mappings in a geom function, ggplot2 will treat them as local mappings for the layer. It will use these mappings to extend or overwrite the global mappings for that layer only. This makes it possible to display different aesthetics in different layers.

ggplot(data = acadia, aes(x = year, y = visitors)) +

geom_point() +

geom_line() +

geom_smooth(color = "red") +

labs(title = "Acadia National Park Visitation",

y = "Visitation",

x = "Year") +

theme_bw()

Exercise

With all of this information in hand, please take another five minutes to either improve one of the plots generated in this exercise or create a beautiful graph of your own. Use the RStudio

ggplot2cheat sheet for inspiration.Here are some ideas:

- See if you can change the thickness of the lines or line type (e.g. dashed line)

- Can you find a way to change the name of the legend? What about its labels?

- Try using a different color palette: see the R Cookbook.

4. Bar charts

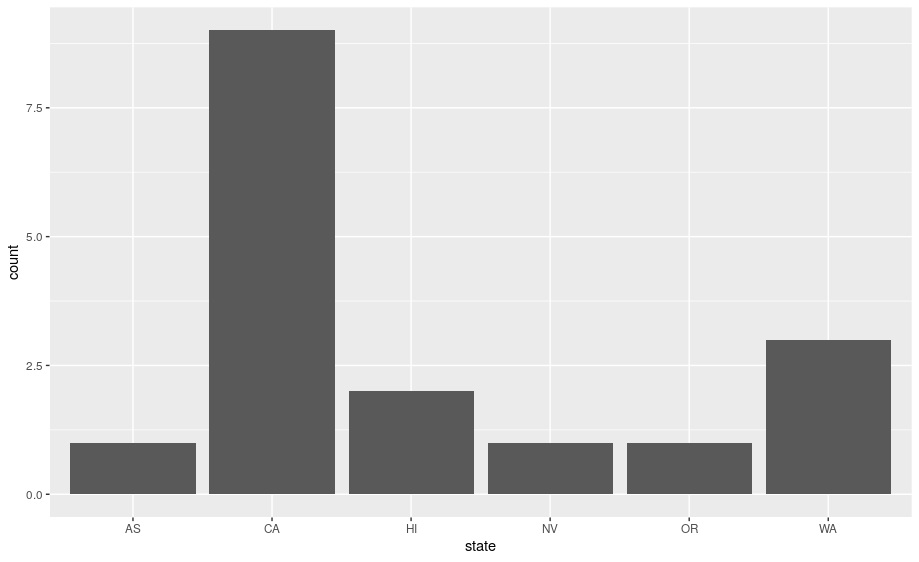

Next, let’s take a look at a bar chart. Bar charts seem simple, but they are interesting because they reveal something subtle about plots. Consider a basic bar chart, as drawn with geom_bar(). The following chart displays the total number of parks in each state within the Pacific West region.

ggplot(data = visit_16, aes(x = state)) +

geom_bar()

On the x-axis, the chart displays state, a variable from visit_16. On the y-axis, it displays count, but count is not a variable in visit_16! Where does count come from? Many graphs, like scatterplots, plot the raw values of your dataset. Other graphs, like bar charts, calculate new values to plot:

-

bar charts, histograms, and frequency polygons bin your data and then plot bin counts, the number of points that fall in each bin.

-

smoothers fit a model to your data and then plot predictions from the model.

-

boxplots compute a robust summary of the distribution and then display a specially formatted box.

The algorithm used to calculate new values for a graph is called a stat, short for statistical transformation.

You can learn which stat a geom uses by inspecting the default value for the stat argument. For example, ?geom_bar shows that the default value for stat is “count”, which means that geom_bar() uses stat_count(). stat_count() is documented on the same page as geom_bar(), and if you scroll down you can find a section called “Computed variables”. That describes how it computes two new variables: count and prop.

ggplot2 provides over 20 stats for you to use. Each stat is a function, so you can get help in the usual way, e.g. ?stat_bin. To see a complete list of stats, try the ggplot2 cheatsheet.

4.1 Position adjustments

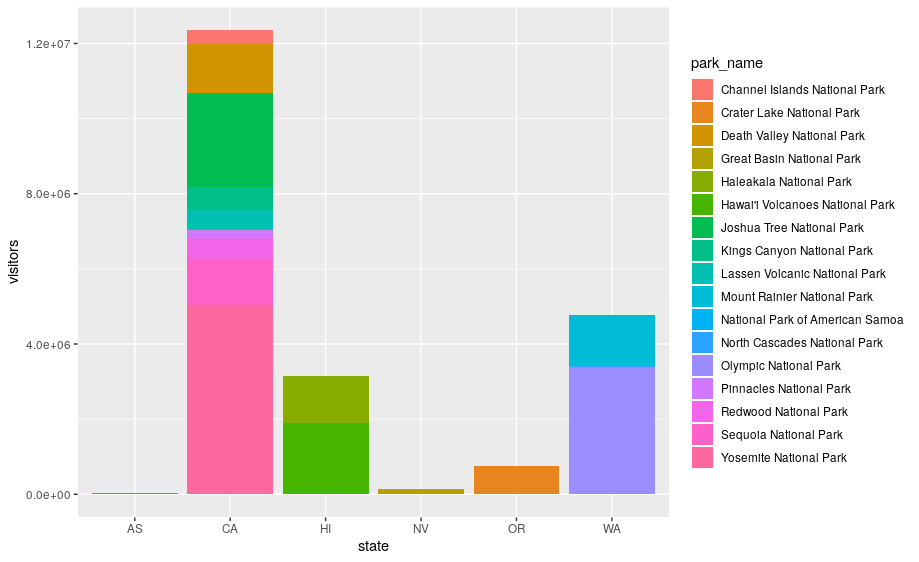

There’s one more piece of magic associated with bar charts. You can colour a bar chart using either the color aesthetic, or, more usefully, fill:

ggplot(data = visit_16, aes(x = state, y = visitors, fill = park_name)) +

geom_bar(stat = "identity")

The stacking is performed automatically by the position adjustment specified by the position argument. If you don’t want a stacked bar chart, you can use "dodge".

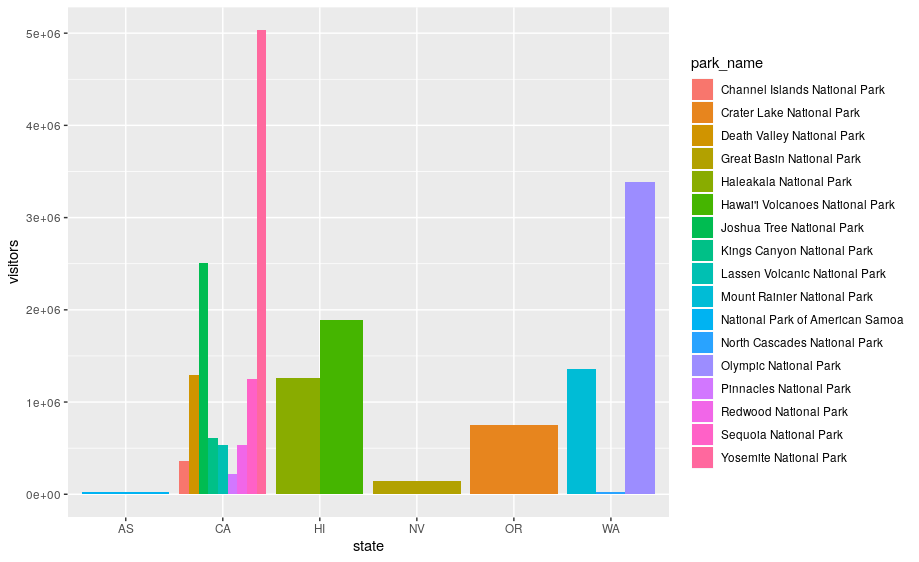

position = "dodge"places overlapping objects directly beside one another. This makes it easier to compare individual values.

ggplot(data = visit_16, aes(x = state, y = visitors, fill = park_name)) +

geom_bar(stat = "identity", position = "dodge")

Exercise

With all of this information in hand, please take another five minutes to either improve one of the plots generated in this exercise or create a beautiful graph of your own. Use the RStudio

ggplot2cheat sheet for inspiration. Remember to use the help documentation (e.g.?geom_bar) Here are some ideas:

- Flip the x and y axes.

- Change the color palette used

- Use

scale_x_discreteto change the x-axis tick labels to the full state names (Arizona, Colorado, etc.)- Make a bar chart using the Massachussets dataset (

mass) and find out how many parks of each type are in the state.Solution

# 1) flip the x and y axes ggplot(data = visit_16, aes(x = state, y = visitors, fill = park_name)) + geom_bar(stat = "identity", position = "dodge") + coord_flip()# 2) change the color palette ggplot(data = visit_16, aes(x = state, y = visitors, fill = park_name)) + geom_bar(stat = "identity", position = "dodge") + coord_flip() + scale_fill_brewer(palette = "Set3")# 3) change x-axis tick labels ggplot(data = visit_16, aes(x = state, y = visitors, fill = park_name)) + geom_bar(stat = "identity", position = "dodge") + coord_flip() + scale_fill_brewer(palette = "Set3") + scale_x_discrete(labels = mass$park_name)# 4) How many of each types of parks are in Massachusetts? ggplot(data = mass) + geom_bar(aes(x = type, fill = type)) + labs(x = "Type of park", y = "Number of parks")+ theme(axis.text.x = element_text(angle = 45, hjust = 1, size = 7))

4.2 Arranging and exporting plots

After creating your plot, you can save it to a file in your favorite format. The Export tab in the Plot pane in RStudio will save your plots at low resolution, which will not be accepted by many journals and will not scale well for posters.

Instead, use the ggsave() function, which allows you easily change the dimension and resolution of your plot by adjusting the appropriate arguments (width, height and dpi):

my_plot <- ggplot(data = mass) +

geom_bar(aes(x = type, fill = park_name)) +

labs(x = "",

y = "")+

theme(axis.text.x = element_text(angle = 45, hjust = 1, size = 7))

ggsave("name_of_file.png", my_plot, width = 15, height = 10)

Note: The parameters width and height also determine the font size in the saved plot.

4.3 bonus 1: animated graph

So as you can see, ggplot2 is a fantastic package for visualizing data. But there are some additional packages that let you make plots interactive. plotly, gganimate.

# install package if necessary and load library

# install.packages("plotly")

library(plotly)

my_plot <- ggplot(data = mass) +

geom_bar(aes(x = type, fill = park_name)) +

labs(x = "",

y = "")+

theme(axis.text.x = element_text(angle = 45, hjust = 1, size = 7))

ggplotly(my_plot)

acad_vis <- ggplot(data = acadia, aes(x = year, y = visitors)) +

geom_point() +

geom_line() +

geom_smooth(color = "red") +

labs(title = "Acadia National Park Visitation",

y = "Visitation",

x = "Year") +

theme_bw()

ggplotly(acad_vis)

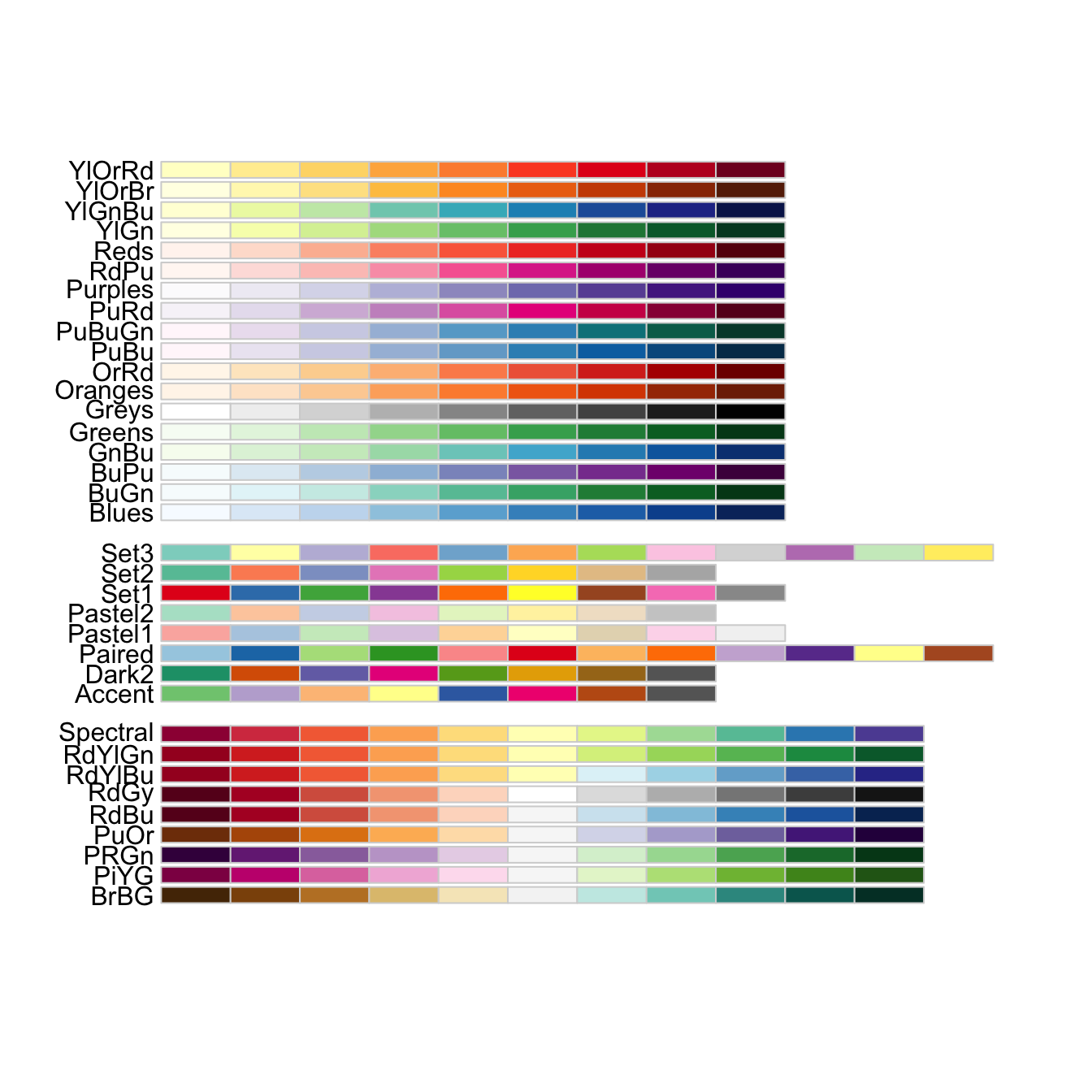

4.4 bonus 2: additional colours with scale_colour_brewer

We can use the scale_colour_brewer from the ggplot2 package to change the colour scheme of our plot.

From the help page of the function:

The brewer scales provides sequential, diverging and qualitative colour schemes from ColorBrewer. These are particularly well suited to display discrete values on a map. See http://colorbrewer2.org for more information.

ggplot(data = ca, aes(x = year, y = visitors, color = park_name)) +

geom_point() +

geom_line() +

labs(title = "Acadia National Park Visitation",

y = "Visitation",

x = "Year") +

theme_bw() +

scale_colour_brewer(type = "qual", palette = "Set1")

All palettes are visible below. Always make sure that you have enough colors in the palette for the number of categories you want to display.

5. Resources

Here are some additional resources for data visualization in R:

- ggplot2-cheatsheet-2.1.pdf

- Interactive Plots and Maps - Environmental Informatics

- Graphs with ggplot2 - Cookbook for R

- ggplot2 Essentials - STHDA

- “Why I use ggplot2” - David Robinson Blog Post

- “The Grammar of Graphics explained” - Towards Data Science blog series

Key Points

ggplot2relies on the grammar of graphics, an advanced methodology to visualise data.ggplot() creates a coordinate system that you can add layers to.

You pass a mapping using

aes()to link dataset variables to visual properties.You add one or more layers (or

geoms) to theggplotcoordinate system andaesmapping.Building a minimal plot requires to supply a dataset, mapping aesthetics and geometric layers (geoms).

ggplot2offers advanced graphical visualisations to plot extra information from the dataset.

Data transformation with dplyr

Overview

Teaching: 45 min

Exercises: 15 minQuestions

How do I perform data transformations such as removing columns on my data using R?

What are tidy data (in opposition to messy data)?

How do I import data into R (e.g. from a web link)?

How can I make my code more readable when performing a series of transformation?

Objectives

Learn how to explore a publically available dataset (gapminder).

Learn how to perform data transformation with the

dplyrfunctions from thetidyversepackage

Table of contents

- 1. Introduction

- 2. Explore the gapminder dataframe

- 3.

dplyrbasics - 4. All together now

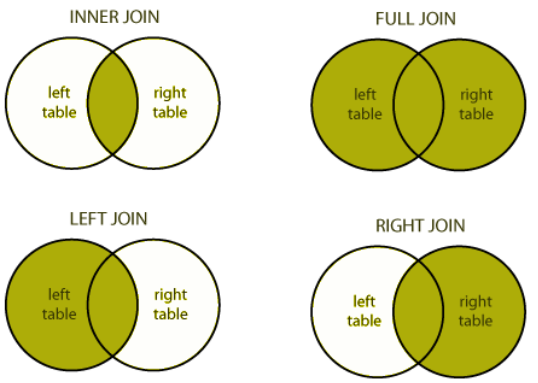

- 5. Joining datasets

- 6. Resources and credits

1. Introduction

1.1 Why should we care about data transformation?

Data scientists, according to interviews and expert estimates, spend from 50 percent to 80 percent of their time mired in the mundane labor of collecting and preparing data, before it can be explored for useful information. - NYTimes (2014)

What are some common things you like to do with your data? Maybe remove rows or columns, do calculations and maybe add new columns? This is called data wrangling (or more simply data transformation). It’s not data management or data manipulation: you keep the raw data raw and do these things programatically in R with the tidyverse.

We are going to introduce you to data wrangling in R first with the tidyverse. The tidyverse is a new suite of packages that match a philosophy of data science developed by Hadley Wickham and the RStudio team. I find it to be a more straight-forward way to learn R. We will also show you by comparison what code will look like in base-R, which means, in R without any additional packages (like the tidyverse package) installed. I like David Robinson’s blog post on the topic of teaching the tidyverse first.

For some things, base-R is more straightforward, and we’ll show you that too. Whenever we use a function that is from the tidyverse, we will prefix it so you’ll know for sure.

1.2 Gapminder dataset

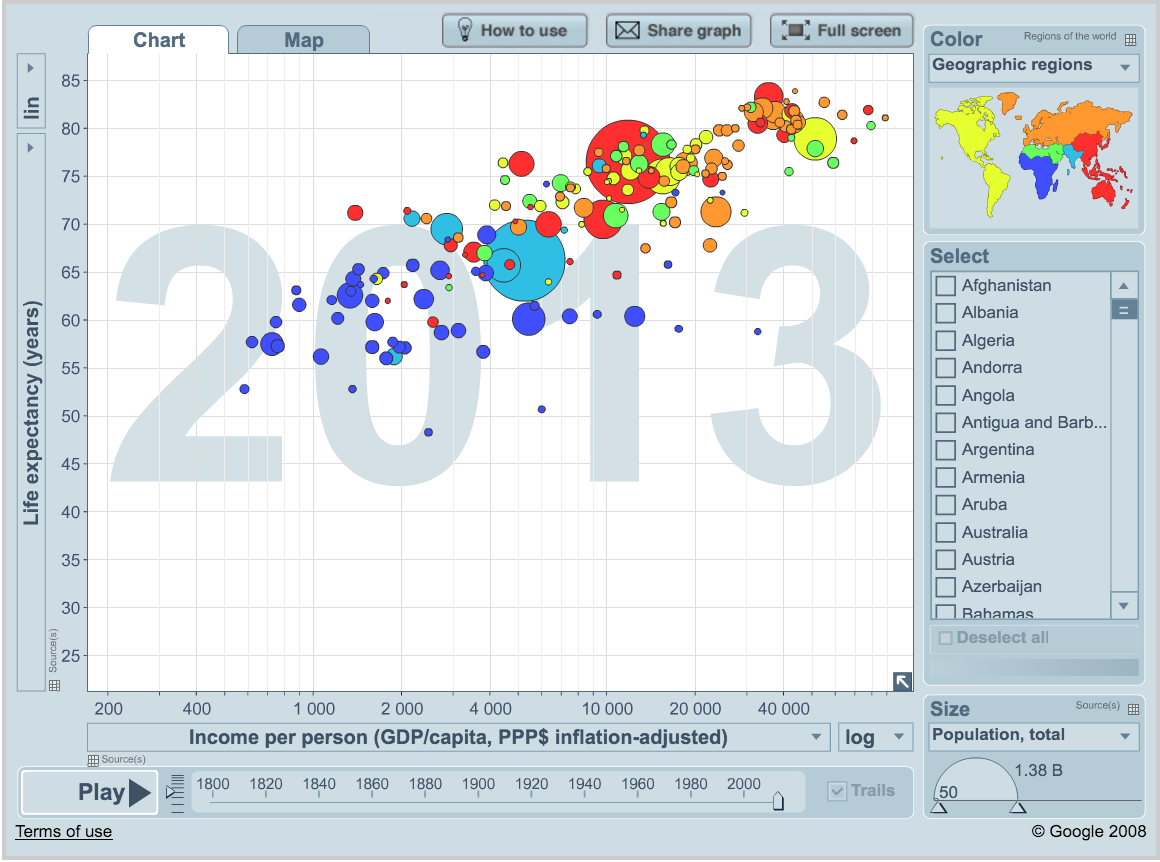

We’ll be using Gapminder data, which represents the health and wealth of nations. It was pioneered by Hans Rosling, who is famous for describing the prosperity of nations over time through famines, wars and other historic events with this beautiful data visualization in his 2006 TED Talk: The best stats you’ve ever seen:

1.3 Load the tidyverse suite

We’ll use the package dplyr, which is bundled within the tidyverse suite of packages. Please load the tidyverse if not already done.

library("tidyverse")

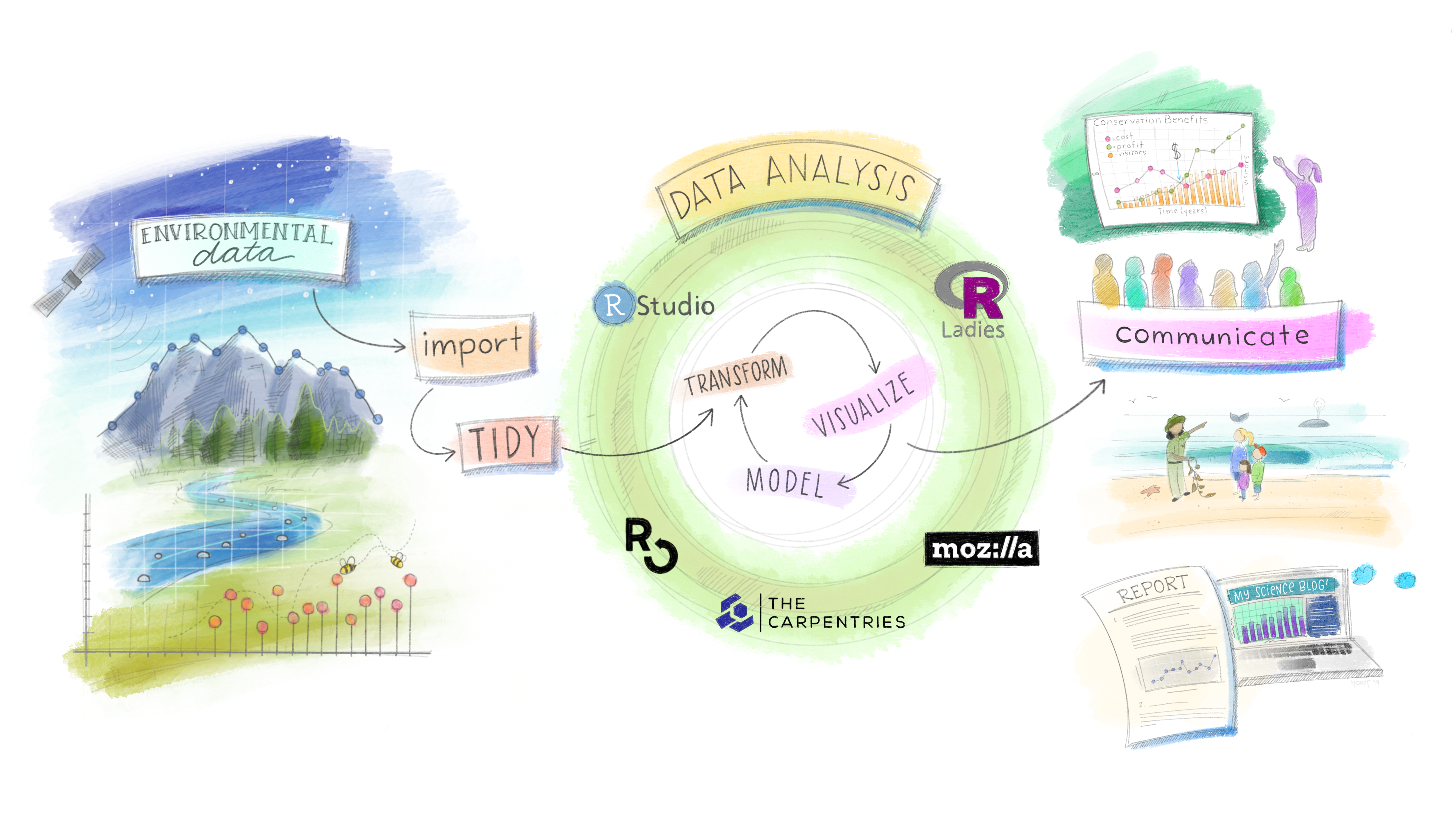

The tidyverse package suite contains all the tools you need for data science. Actually, Hadley Wickham and RStudio have created a ton of packages that help you at every step of the way here. This is from one of Hadley’s presentations:

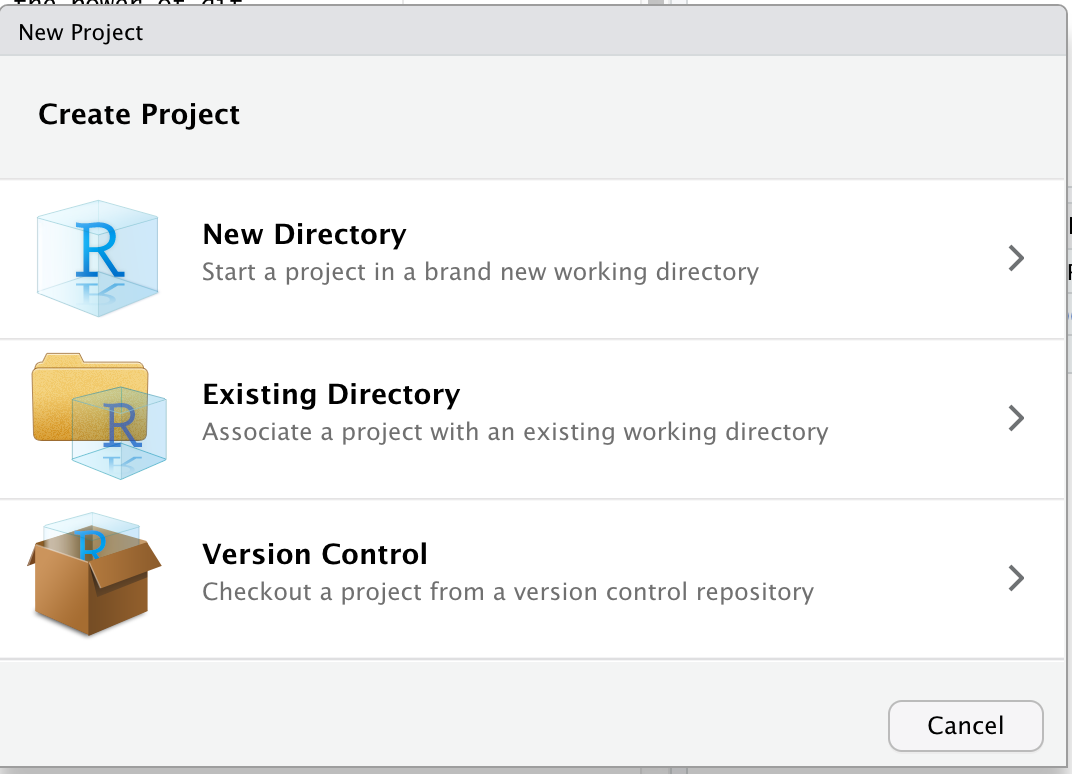

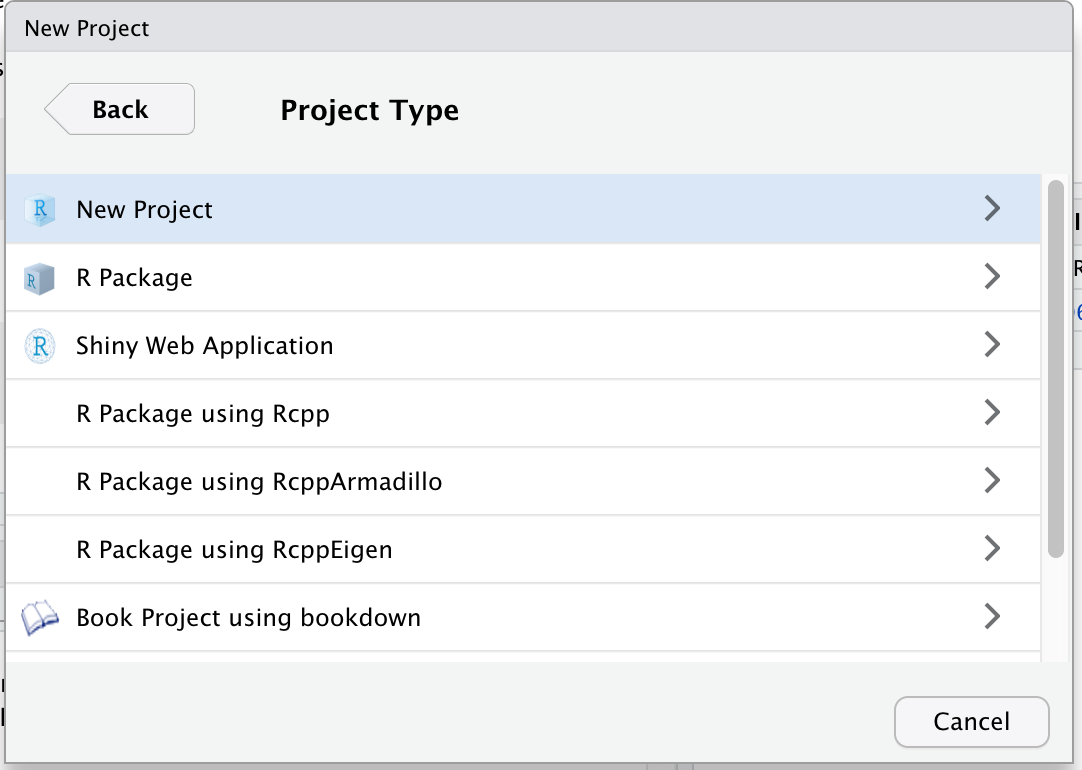

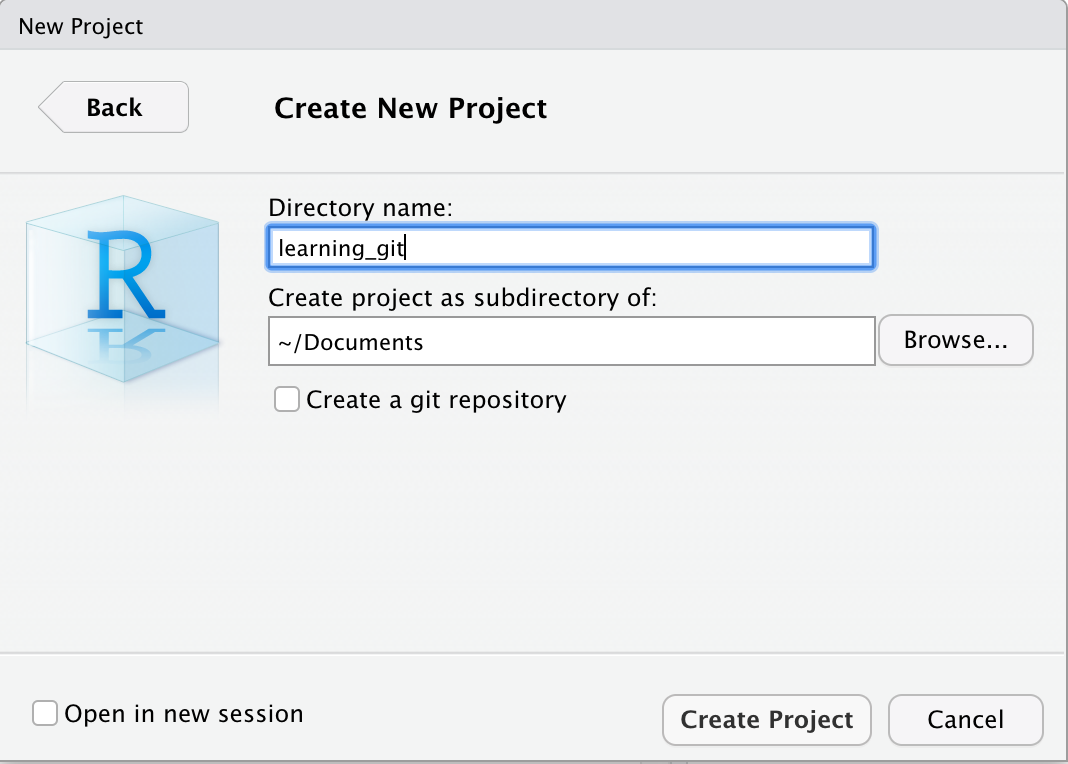

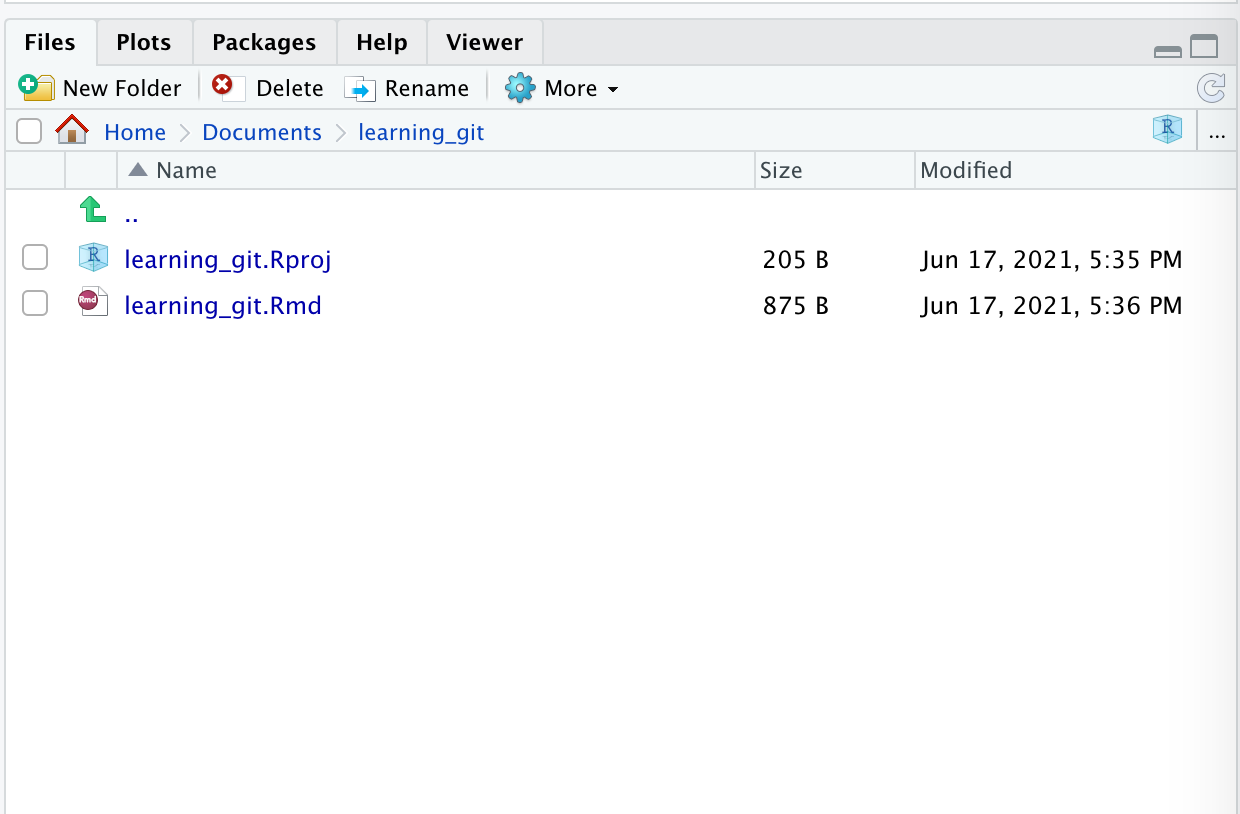

1.4 Create a new R Markdown file.

We’ll do this in a new R Markdown file.

Here’s what to do:

- Clear your workspace (Session > Restart R)

- New File > R Markdown…

- Save as

gapminder-wrangle.Rmd - Delete the irrelevant text and write a little note to yourself about this section: “cleaning and transforming the gapminder dataset.”

2. Explore the gapminder dataframe

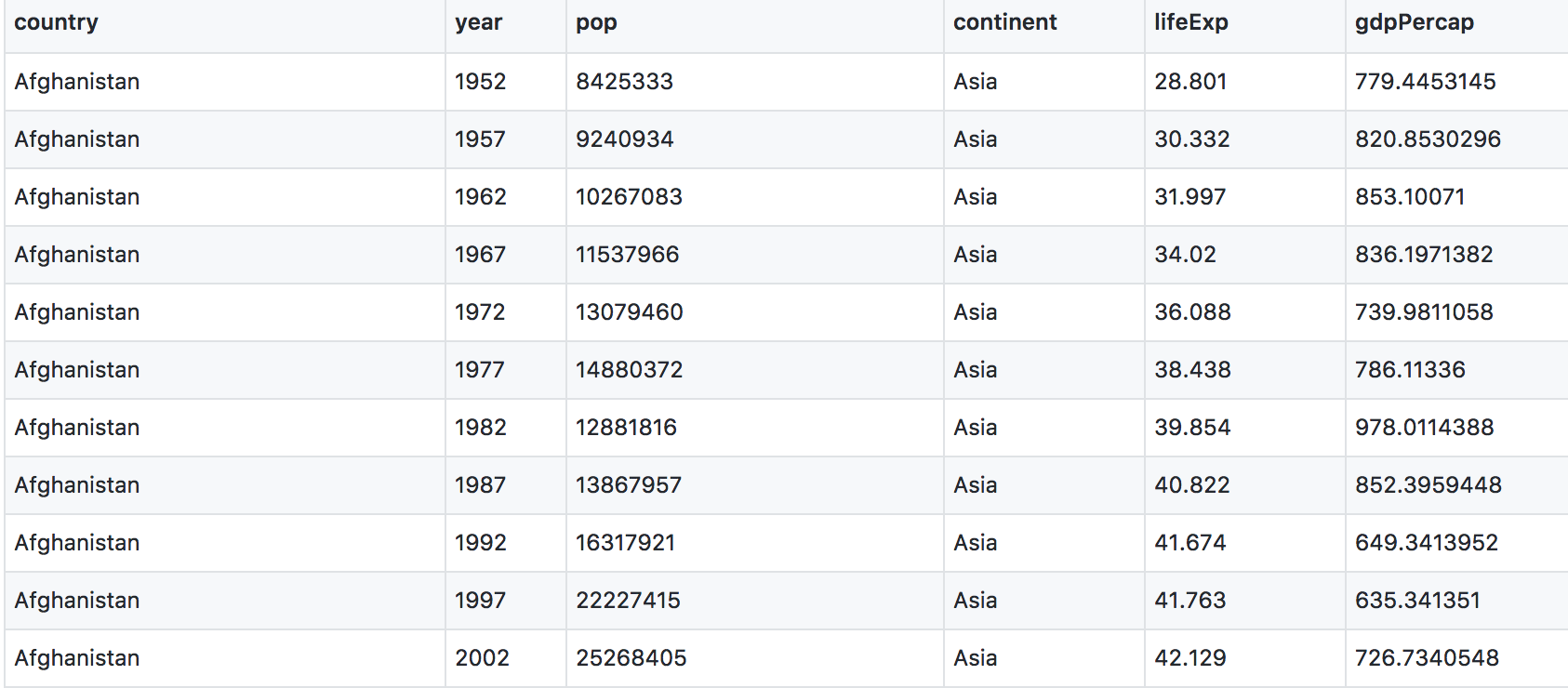

Previously, we explored the national parks dataframe visually. Today, we’ll explore a dataset by the numbers. We will work with some of the data from the Gapminder project.

The data are on GitHub. Navigate to: https://github.com/carpentries-incubator/open-science-with-r/blob/gh-pages/data/gapminder.csv.

This is data-view mode: so we can have a quick look at the data. It’s a .csv file, which you’ve probably encountered before, but GitHub has formatted it nicely so it’s easy to look at. You can see that for every country and year, there are several columns with data in them.

2.1 Import data with readr::read_csv()

We can read this data into R directly from GitHub, without downloading it. But we can’t read this data in view-mode. We have to click on the Raw button on the top-right of the data. This displays it as the raw csv file, without formatting.

Copy the url for raw data: https://raw.githubusercontent.com/carpentries-incubator/open-science-with-r/gh-pages/data/gapminder.csv

Now, let’s go back to RStudio. In our R Markdown, let’s read this .csv file and name the variable gapminder. We will use the read_csv() function from the readr package (part of the tidyverse, so it’s already installed!).

## read gapminder csv. Note the readr:: prefix identifies which package it's in

gapminder <- readr::read_csv('https://raw.githubusercontent.com/carpentries-incubator/open-science-with-r/gh-pages/data/gapminder.csv')

Note

read_csvworks with local filepaths as well, you could use one from your computer.

2.2 Dataset inspection

Let’s inspect the data with head and tail:

head(gapminder) # shows first 6

tail(gapminder) # shows last 6

head(gapminder, n = 10) # shows first X that you indicate

tail(gapminder, n = 12) # guess what this does!

str() will provide a sensible description of almost anything: when in doubt, inspect using str() on some of the recently created objects to get some ideas about what to do next.

str(gapminder) # ?str - displays the structure of an object

str(gapminder)

Classes ‘spec_tbl_df’, ‘tbl_df’, ‘tbl’ and 'data.frame': 1704 obs. of 6 variables:

$ country : chr "Afghanistan" "Afghanistan" "Afghanistan" "Afghanistan" ...

$ year : num 1952 1957 1962 1967 1972 ...

$ pop : num 8425333 9240934 10267083 11537966 13079460 ...

$ continent: chr "Asia" "Asia" "Asia" "Asia" ...

$ lifeExp : num 28.8 30.3 32 34 36.1 ...

$ gdpPercap: num 779 821 853 836 740 ...

- attr(*, "spec")=

.. cols(

.. country = col_character(),

.. year = col_double(),

.. pop = col_double(),

.. continent = col_character(),

.. lifeExp = col_double(),

.. gdpPercap = col_double()

.. )

This will show how R understood your data types. Check that numbers are indeed understood as num/numeric and strings as chr/character.

You can get the number of rows and columns of the gapminder dataframe with dim().

dim(gapminder)

[1] 1704 6

It shows that our dataframe has 1704 rows and 6 columns.

R imports gapminder as a dataframe. We aren’t going to get into the other types of data receptacles today (‘arrays’, ‘matrices’), because working with dataframes is what you should primarily use. Why?

- dataframes contain related variables neatly together, great for analysis

- most functions, including the latest and greatest packages actually require that your data be in a dataframe

- dataframes can hold variables of different flavors such as:

- character data (country or continent names; “Characters (chr)”)

- quantitative data (years, population; “Integers (int)” or “Numeric (num)”)

- categorical information (male vs. female)

We can also see the gapminder variable in RStudio’s Environment pane (top right).

More ways to learn basic info on a dataframe.

names(gapminder) # column names

ncol(gapminder) # ?ncol number of columns

nrow(gapminder) # ?nrow number of rows

2.3 Descriptive statistics of the gapminder dataset

A statistical overview can be obtained with summary(), or with skimr::skim()

summary(gapminder)

country year pop continent lifeExp gdpPercap

Length:1704 Min. :1952 Min. :6.001e+04 Length:1704 Min. :23.60 Min. : 241.2

Class :character 1st Qu.:1966 1st Qu.:2.794e+06 Class :character 1st Qu.:48.20 1st Qu.: 1202.1

Mode :character Median :1980 Median :7.024e+06 Mode :character Median :60.71 Median : 3531.8

Mean :1980 Mean :2.960e+07 Mean :59.47 Mean : 7215.3

3rd Qu.:1993 3rd Qu.:1.959e+07 3rd Qu.:70.85 3rd Qu.: 9325.5

Max. :2007 Max. :1.319e+09 Max. :82.60 Max. :113523.1

This will give simple descriptive statistics (e.g. median, average) for each column if numeric.

Finally, the skimr package provides a powerful descriptive function for dataframes.

library(skimr)

skim(gapminder)

── Data Summary ────────────────────────

Values

Name gapminder

Number of rows 1704

Number of columns 6

_______________________

Column type frequency:

character 2

numeric 4

________________________

Group variables None

── Variable type: character ──────────────────────────────────────────────────────────────────────────────────────────────────────────────

skim_variable n_missing complete_rate min max empty n_unique whitespace

1 country 0 1 4 24 0 142 0

2 continent 0 1 4 8 0 5 0

── Variable type: numeric ────────────────────────────────────────────────────────────────────────────────────────────────────────────────

skim_variable n_missing complete_rate mean sd p0 p25 p50 p75 p100 hist

1 year 0 1 1980. 17.3 1952 1966. 1980. 1993. 2007 ▇▅▅▅▇

2 pop 0 1 29601212. 106157897. 60011 2793664 7023596. 19585222. 1318683096 ▇▁▁▁▁

3 lifeExp 0 1 59.5 12.9 23.6 48.2 60.7 70.8 82.6 ▁▆▇▇▇

4 gdpPercap 0 1 7215. 9857. 241. 1202. 3532. 9325. 113523. ▇▁▁▁▁

This gives you a comprehensive view of your data at a glance.

3. dplyr basics

OK, so let’s start wrangling with the dplyr collection of functions. .

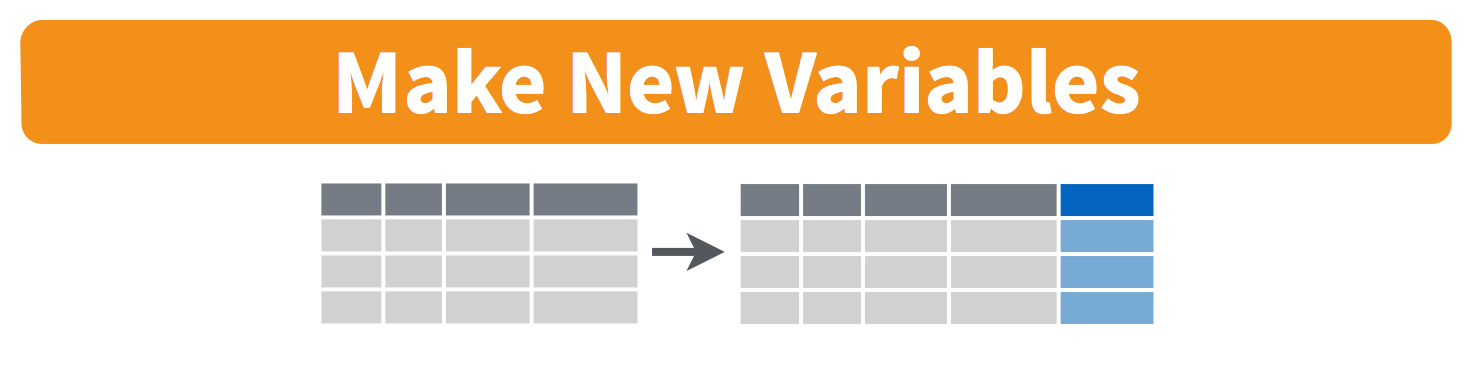

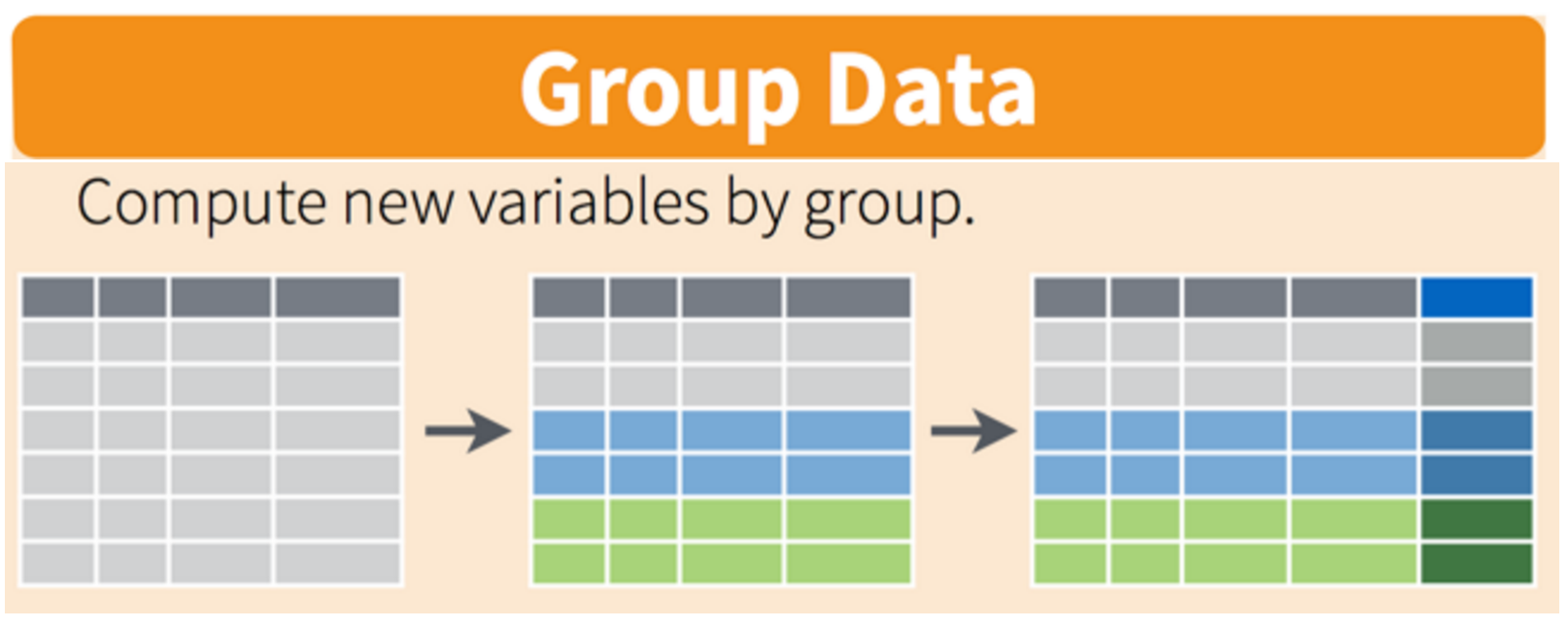

There are five dplyr functions that you will use to do the vast majority of data manipulations:

-

filter(): pick observations by their values

-

select(): pick variables by their names

-

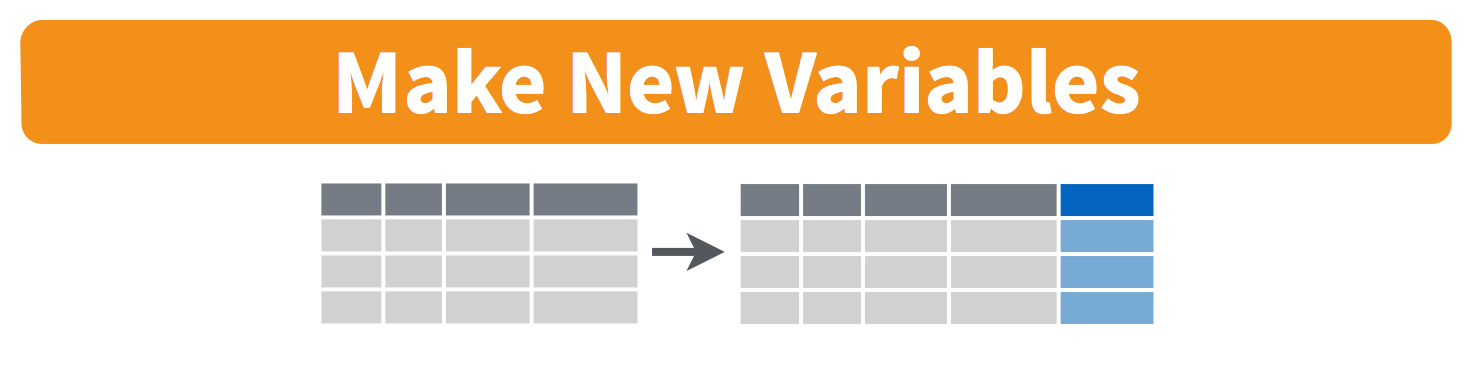

mutate(): create new variables with functions of existing variables

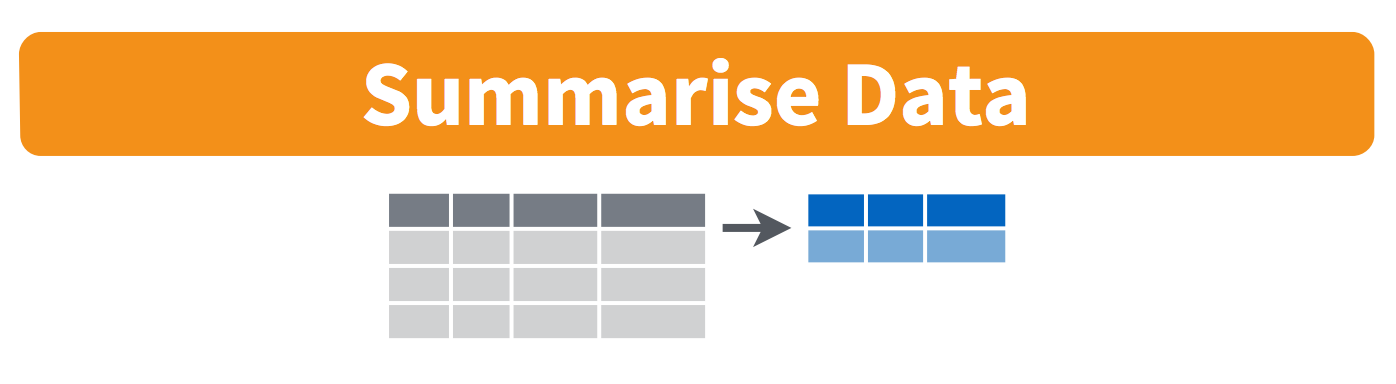

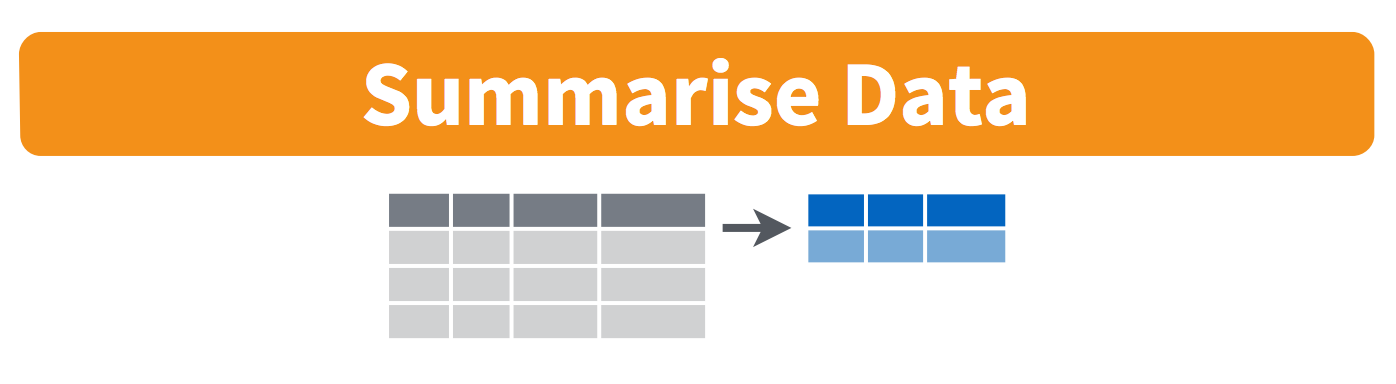

-

summarise(): collapse many values down to a single summary

-

arrange(): reorder the rows

These can all be used in conjunction with group_by() which changes the scope of each function from operating on the entire dataset to operating on it group-by-group. These six functions provide the verbs for a language of data manipulation.

All verbs work similarly:

- The first argument is a data frame.

- The subsequent arguments describe what to do with the data frame. You can refer to columns in the data frame directly without using

$. - The result is a new data frame.

Together these properties make it easy to chain together multiple simple steps to achieve a complex result.

3.1 filter() observations

You will want to isolate bits of your data; maybe you want to only look at a single country or a few years. R calls this subsetting.

filter() is a function in dplyr that takes logical expressions and returns the rows for which all are TRUE.

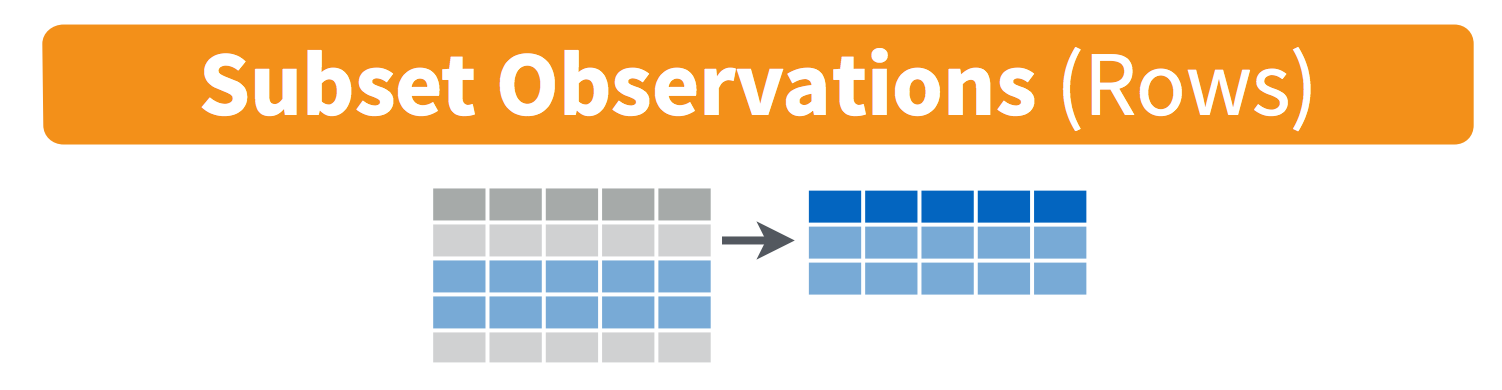

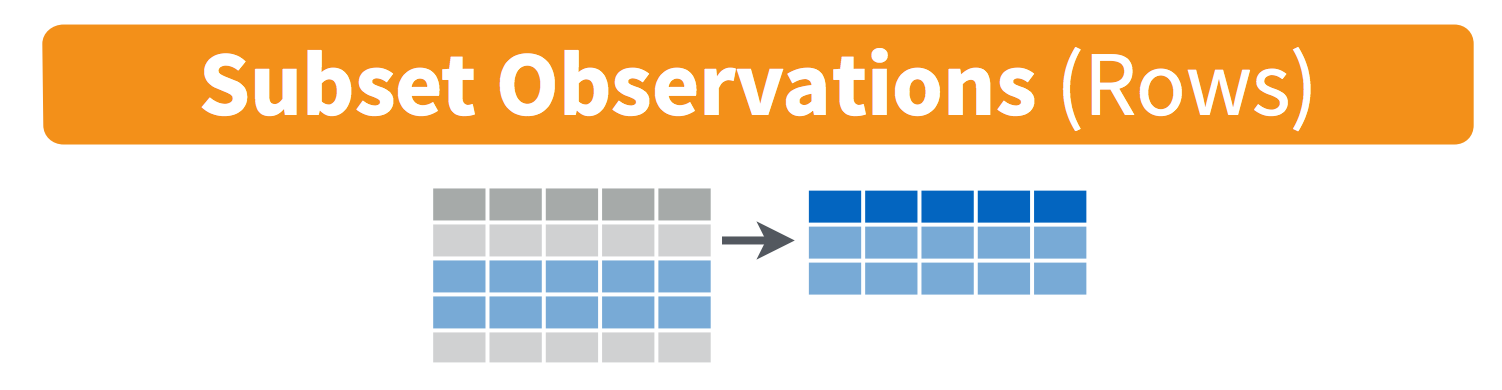

Visually, we are doing this:

Remember your logical expressions from this morning? We’ll use < and == here.

filter(gapminder, lifeExp < 29)

You can say this out loud: “Filter the gapminder data for life expectancy less than 29”. Notice that when we do this, all the columns are returned, but only the rows that have the life expectancy less than 29. We’ve subsetted by row.

Let’s try another: “Filter the gapminder data for the country Mexico”.

filter(gapminder, country == "Mexico")

How about if we want two country names? We can’t use the == operator here, because it can only operate on one thing at a time. We will use the %in% operator:

filter(gapminder, country %in% c("Mexico", "Peru"))

How about if we want Mexico in 2002? You can pass filter different criteria:

filter(gapminder, country == "Mexico", year == 2002)

Exercise

What is the mean life expectancy of Sweden?

Hint: do this in 2 steps by assigning a variable and then using themean()function.Solution

sweden <- filter(gapminder, country == "Sweden")

mean(sweden$lifeExp)

3.2 select() variables

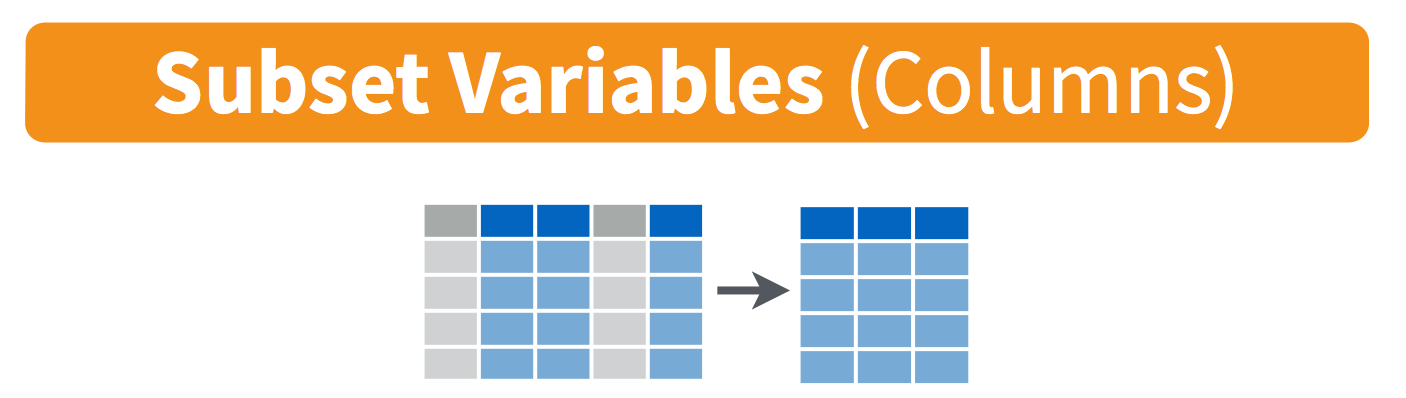

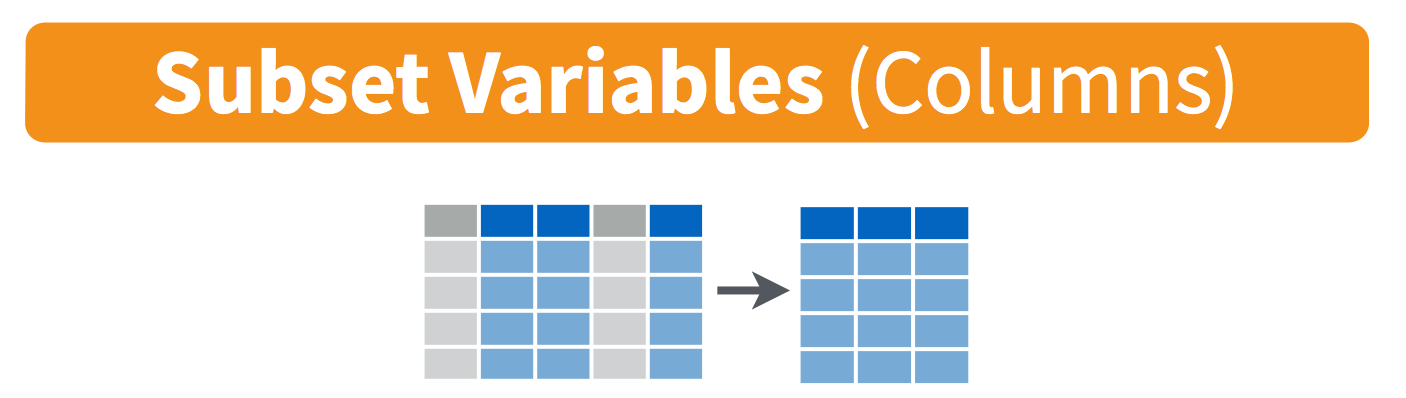

We use select() to subset the data on variables or columns.

Visually, we are doing this:

We can select multiple columns with a comma, after we specify the data frame (gapminder).

select(gapminder, year, lifeExp)

We can also use - to deselect columns

select(gapminder, -continent, -lifeExp) # you can use - to deselect columns

3.3 The pipe %>% operator

What if we want to use select() and filter() together?

Let’s filter for Cambodia and remove the continent and lifeExp columns. We’ll save this as a variable. Actually, as two temporary variables, which means that for the second one we need to operate on gap_cambodia, not gapminder.

gap_cambodia <- filter(gapminder, country == "Cambodia")

gap_cambodia2 <- select(gap_cambodia, -continent, -lifeExp)

We also could have called them both gap_cambodia and overwritten the first assignment. Either way, naming them and keeping track of them gets super cumbersome, which means more time to understand what’s going on and opportunities for confusion or error.

Good thing there is an awesome alternative.

Before we go any further, we should exploit the new pipe operator that comes from the magrittr package by Stefan Bache. The package name refers to the Belgium surrealist artist René Magritte that made a famous painting with a pipe.

The %>% operator is going to change your life. You no longer need to enact multi-operation commands by nesting them inside each other. And we won’t need to make temporary variables like we did in the Cambodia example above. This new syntax leads to code that is much easier to write and to read: it actually tells the story of your analysis.

Here’s what it looks like: %>%.

Keyboard shortcuts for the pipe operator

The RStudio keyboard shortcut:

Ctrl+Shift+M(Windows),Cmd+Shift+M(Mac).

Let’s demo then I’ll explain:

gapminder %>% head()

# A tibble: 6 x 6

country year pop continent lifeExp gdpPercap

<chr> <dbl> <dbl> <chr> <dbl> <dbl>

1 Afghanistan 1952 8425333 Asia 28.8 779.

2 Afghanistan 1957 9240934 Asia 30.3 821.

3 Afghanistan 1962 10267083 Asia 32.0 853.

4 Afghanistan 1967 11537966 Asia 34.0 836.

5 Afghanistan 1972 13079460 Asia 36.1 740.

6 Afghanistan 1977 14880372 Asia 38.4 786.

This is equivalent to head(gapminder).

This pipe operator takes the thing on the left-hand-side and pipes it into the function call on the right-hand-side. It literally drops it in as the first argument.

Never fear, you can still specify other arguments to this function! To see the first 3 rows of Gapminder, we could say head(gapminder, n = 3) or this:

gapminder %>% head(n = 3)

I’ve advised you to think “gets” whenever you see the assignment operator, <-. Similarly, you should think “and then” whenever you see the pipe operator, %>%.

One of the most awesome things about this is that you START with the data before you say what you’re doing to DO to it. So above: “take the gapminder data, and then give me the first three entries”.

This means that instead of this:

## instead of this...

gap_cambodia <- filter(gapminder, country == "Cambodia")

gap_cambodia2 <- select(gap_cambodia, -continent, -lifeExp)

## ...we can do this

gap_cambodia <- gapminder %>% filter(country == "Cambodia")

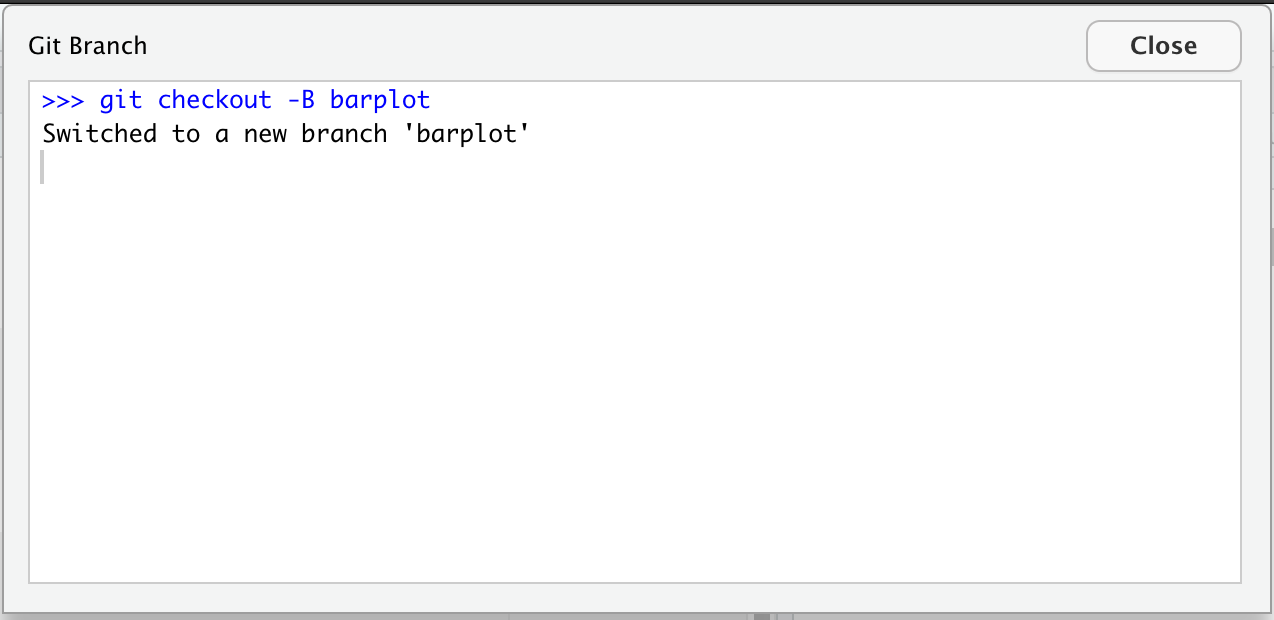

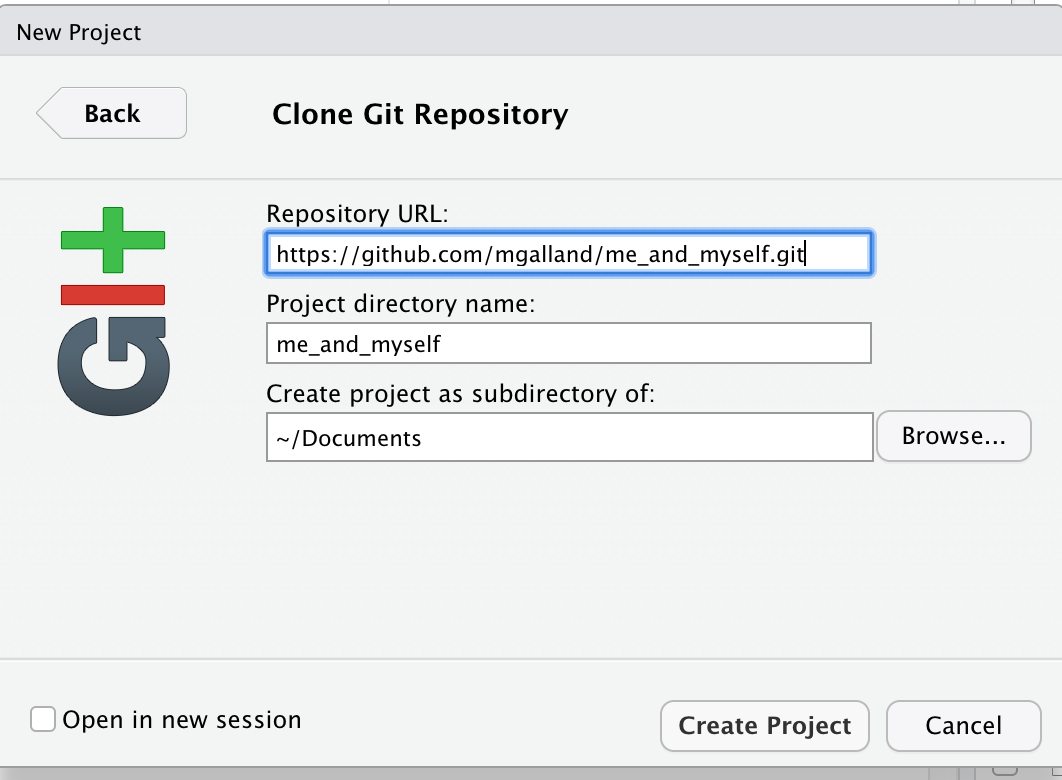

gap_cambodia2 <- gap_cambodia %>% select(-continent, -lifeExp)