Why care about open (data) science?

Overview

Teaching: 40 min

Exercises: 10 minQuestions

What is Open Science?

What is Open and Reproducible Research Practices?

Objectives

To understand the importance to share data and code

To value code and data for what they are: the true foundations of any scientific statement.

To promote good practices for open & reproducible science

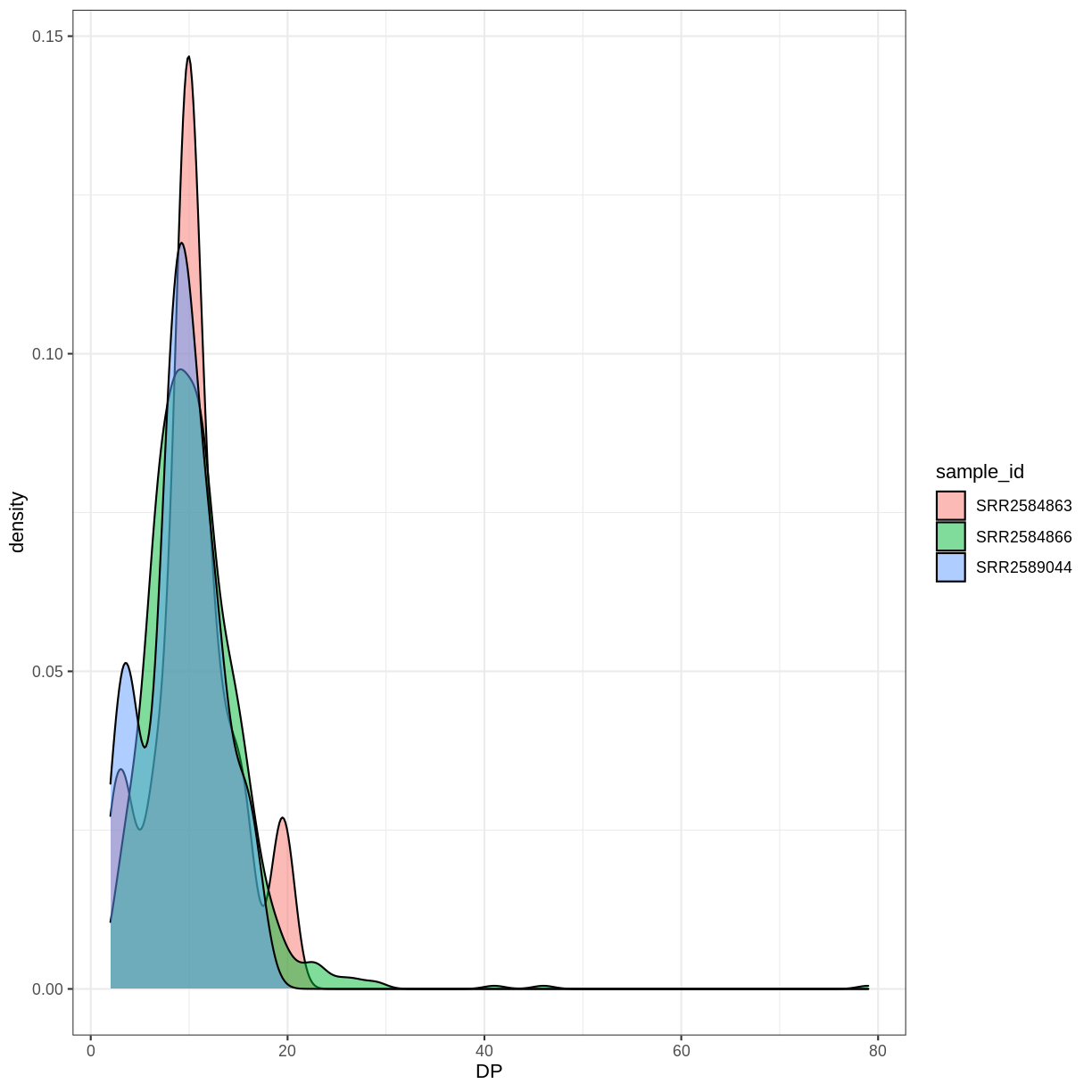

The Crisis of Confidence

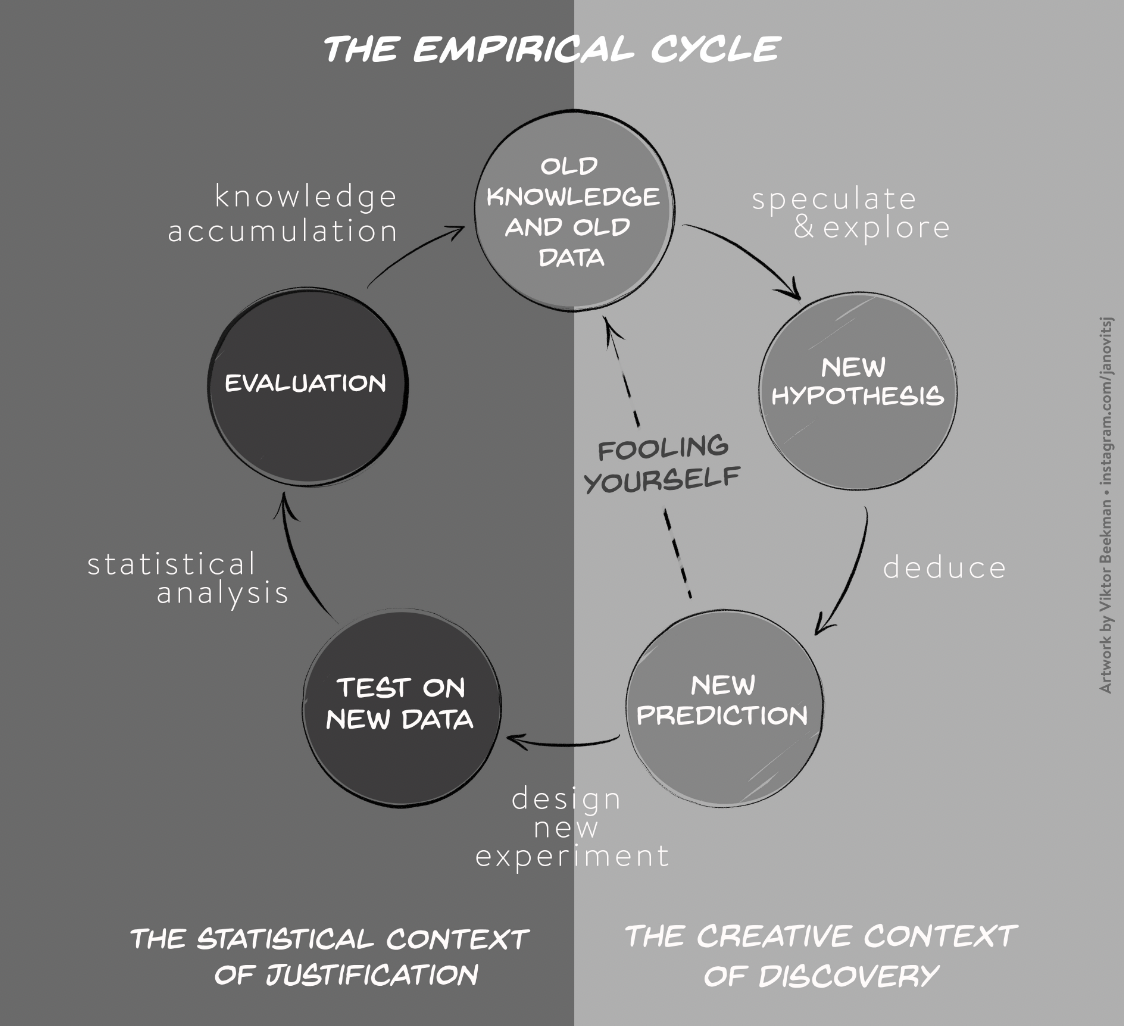

The crisis of confidence poses a general problem across most empirical research disciplines and is characterized by an alarmingly low rate of key findings that are reproducible (e.g., Fidler et al. 2017; Open Science Collaboration, 2015; Poldrack et al., 2017; Wager et at., 2009). A low reproducibility rate can arise when scientists do not respect the empirical cycle. Scientific research methods for experimental research are based on the hypothetico-deductive approach (see e.g., de Groot, 1969; Peirce, 1878), which is illustrated in Figure 1.

Figure 1. The two stages of the empirical cycle; after the initial stage of creative discovery and hypothesis generation (illustrated in the right panel), researchers test their hypotheses in a statistical context of justification (illustrated in the left panel). However, scientists fool themselves, if they test their new predictions on old knowledge and old data (dotted line).”

The empirical cycle suggests that scientists initially find themselves in “the creative context of discovery”, where the primary goal is to generate hypotheses and predictions based on exploration and data-dependent analyses. Subsequently, this initial stage of discovery is followed by “the statistical context of justification”. This is the stage of hypothesis-testing in which the statistical analysis must be independent of the outcome. Scientists may fool themselves whenever the results from the creative context of discovery with its data-dependent analyses are treated as if they came from the statistical context of justification. Since the selection of hypotheses now capitalizes on chance fluctuations, the corresponding findings are unlikely to replicate.

This suggests that the crisis of confidence is partly due to a blurred distinction between statistical analyses that are pre-planned and post-hoc, caused by the scientists degree of freedom in conducting the experiment, analyzing the data, and reporting the outcome. In a research environment with a high degree of freedom it is tempting to present the data exploration efforts as confirmatory (Carp, 2013). Kerr (1998, p. 204) attributed this biased reporting of favorable outcomes to an implicit effect of a hindsight bias: “After we have the results in hand and with the benefit of hindsight, it may be easy to misrecall that we had really ‘known it all along’, that what turned out to be the best post hoc explanation had also been our preferred a priori explanation.”

To overcome the crisis of confidence the research community must change the way scientists conduct their research. The alternatives to current research practices generally aim to increase transparency, openness, and reproducibility. Applied to the field of ecology, Ellison (2010, p. 2536) suggests that “repeatability and reproducibility of ecological synthesis requires full disclosure not only of hypotheses and predictions, but also of the raw data, methods used to produce derived data sets, choices made as to which data or data sets were included in, and which were excluded from, the derived data sets, and tools and techniques used to analyze the derived data sets.” To facilitate their uptake, however, it is essential that these open and reproducible research practices are concrete and practical.

Open and Reproducible Research Practices

In this section, we focus on open and reproducible research practices that researchers can implement directly into their workflow, such as data sharing, creating reproducible analyses, and the preregistration of studies.

Data Sharing

International collaboration is a cornerstone for the field of ecology and thus the documentation, and archiving of large volume of (multinational) data and metadata is becoming increasingly important. Even though many scientists are reluctant to make their data publicly available, data sharing can increase the impact of their research. For instance, in cancer research, studies for which data were publicly available received higher citation rates compared to studies for which data were not available (Piwowar, Day, & Fridsma, 2007). This is due to the fact that other researchers can build directly on existing data, analyze them using utilize novel statistical techniques and modelling tools, and mine them from new perspectives (Carpenter et al., 2009).

Reproducibility of Statistical Results

One of the core scientific values is reprodicibility. The reproducibility of experimental designs and methods allows the scientific community to determine the validity of alledged effects.

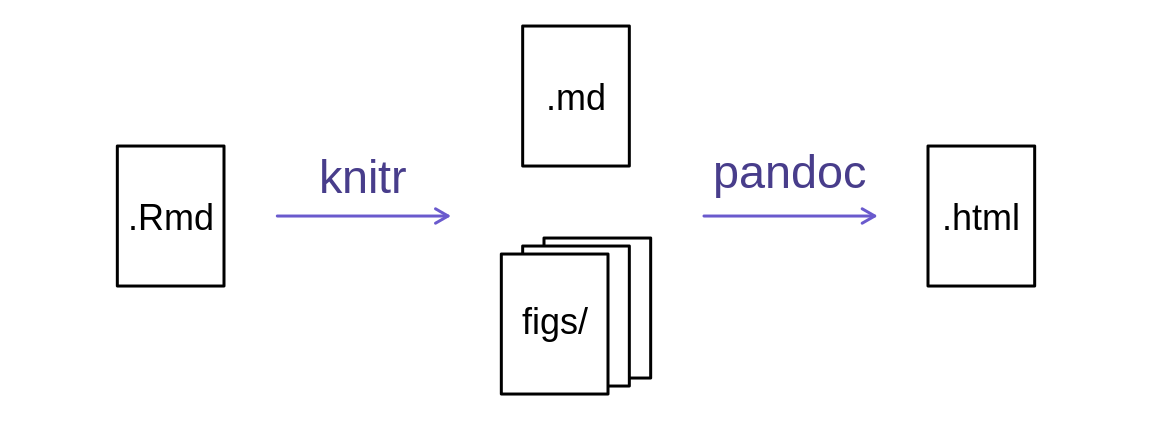

The benefit of publishing fully reporducible statistical results (including the reporting of all data preprocessing steps) is that collaborators, peer-reviewers, and independent researchers can repeat the analysis –from raw data and to the creation of relevant figures and tables– and verify the correctness of the results. Scientific articles are not free from typographical mistakes and it has been shown that the prevalence for statistical reporting errors is shockingly high. For instance, Nuijten et al. (2015) examined the prevalence of statistical reporting errors in the field of psychology and found that almost 50% or all psychological articles papers contain at least one error. These reporting errors can lead to erroneous substantive conclusions and influence, for instance, the results of meta-analyses. Most importantly, however, is that these errors are preventable. Through tools, such as git and RMarkdown, researchers can automate their statistical reporting and produce fully reproducible research papers.

Preregistration and Registered Reports

A blurred distinction between statistical analyses that are pre-planned and post-hoc causes many researchers to (unintentionally) use questionable research practices to produce significant findings (QRPs; John, Loewenstein, & Prelec, 2012). The most effective method to combat questionable research practices is preregistration, a procedure to curtail scientists’ degrees of freedom (e.g., Wagenmakers & Dutilh, 2016. When preregistering studies, scientists commit to an analysis plan in advance of data collection. By making a sharp distinction between hypothesis generating and analyzing the data, preregistration eliminates the confusion between exploratory and confirmatory research.

Over the last years, preregistration has quickly gained popularity and has established itself over several platforms and formats. Scientists can now choose to preregister their work either independently —for instance on platforms like https://asPredicted.org or the Open Science Framework (OSF)— or preregister their studies directly in a journal in the format of a Registered Report as promoted by Chris Chambers (2013). Currently about 200 journals —including Nature: Human Behaviour— accept Registered Reports either as a regular submission option or as part of a single special issue (see https://cos.io/rr/ for the full list).

Preregistration is encouraged in the transparency and openness promotion (TOP) guidelines (Nosek et al., 2015 and represents the standard for the analysis of clinical trials; for instance, in the New England Journal of Medicine —the world’s highest impact journal— the registration of Clinical Trials is a prerequisite for publication.

Challenges

- Data sharing: ethical concerns (share data that harm others, e.g., lowering property values or private data that are collected, for instance, through satellites); Solution = share anonimized data, policies need to be developed

- Preregistration: Loosing the flexibility to adapt analysis plans to unexpected peculiarities of the data; Solution = data blinding, which is standard practice in astrophysics)

- Reproducibility: Additional costs associated with the time it takes to adequatly annotate and archive the code so that independent researchers can understand and reproduce fugures and results; Solution = reproducible workflow, for instance by working in Git and Rmarkdown

Exercise: Reflect on your own reserach!

Have you ever had a problem reproducing your own research or someone else’s research? Why do think some research is irreproducible?

Solution

Read more about reproducibility crisis here.

Three messages

If there are 3 things to communicate to others after this workshop, I think they would be:

1. Data science is a discipline that can improve your analyses

- There are concepts, theory, and tools for thinking about and working with data.

- Your study system is not unique when it comes to data, and accepting this will speed up your analyses.

This helps your science:

- Think deliberately about data: when you distinguish data questions from research questions, you’ll learn how and who to ask for help

- Save heartache: you don’t have to reinvent the wheel

- Save time: when you expect there’s a better way to do what you are doing, you’ll find the solution faster. Focus on the science.

2. Open data science tools exist

- Data science tools that enable open science are game-changing for analysis, collaboration and communication.

- Open science is “the concept of transparency at all stages of the research process, coupled with free and open access to data, code, and papers” (Hampton et al. 2015)

- For empirical researchers: transparency checklist (https://eltedecisionlab.shinyapps.io/TransparencyChecklist/)

- Repositories such as the Open Science Framework (https://osf.io/preregistration) offer preregistration templates and the tools to archive your projects

This helps your science:

- Blogpost: Seven Reasons To Work Reproducibly (written by the Center of Open Science): https://cos.io/blog/seven-reasons-work-reproducibly/

- Have confidence in your analyses from this traceable, reusable record

- Save time through automation, thinking ahead of your immediate task, reduced bookkeeping, and collaboration

- Take advantage of convenient access: working openly online is like having an extended memory _ Making your data and code publicly available can increase the impact of research.

3. Learn these tools with collaborators and community (redefined):

- Your most important collaborator is Future You.

- Community should also be beyond the colleagues in your field.

- Learn from, with, and for others.

This helps your science:

- If you learn to talk about your data, you’ll find solutions faster.

- Build confidence: these skills are transferable beyond your science.

- Be empathetic and inclusive and build a network of allies

Build and/or join a local coding community

Join existing communities locally and online, and start local chapters with friends!

-

RLadies Dammam. Informal but efficient communities centered on R data analysis meant to be inclusive and supportive.

These meetups can be for skill-sharing, showcasing how people work, or building community so you can troubleshoot together. They can be an informal “hacky hour” at a cafe or pub!

Open Science Community Saudi Arabia

Open Science Community Saudi Arabia (OSCSA) was established in line with Saudi Arabia’s Vision 2030, which focuses on installing values, enhancing knowledge and improving equal access to education. It aims to provide a place where newcomers and experienced peers interact, inspire each other to embed open science practices and values in their workflows and provide feedback on policies, infrastructures, and support services. Our community is part of the International Network of Open Science & Scholarship Communities (INOSC).

Why Join the Community?

- Learn about Open Science practices through Workshops/Meetings.

- Support to lead your own project, and provide more visibility and discoverability for your work.

- OSCSA helps you to get connected with like-minded people from other communnities that adopt Open Science practices (e.g. Turing Way (UK), Carpentries, ArabR, …).

- Nominate yourself in the and vote for the international International Network of Open Science & Scholarship (INOSC) Board

Going further / Bibliography

- The Replication Crisis in Wikipedia

- A special issue in Science on Reproducibility

- Open Science And Reproducibility: presentation by Alexandra Sarafoglou (PhD student, UvA)

Key Points

Make your data and code available to others

Make your analyses reproducible

Make a sharp distincion between exploratory and confirmatory research

Introducing R and RStudio IDE

Overview

Teaching: 30 min

Exercises: 15 minQuestions

Why use R?

Why use RStudio and how does it differ from R?

Objectives

Know advantages of analyzing data in R

Know advantages of using RStudio

Create an RStudio project, and know the benefits of working within a project

Be able to customize the RStudio layout

Be able to locate and change the current working directory with

getwd()andsetwd()Compose an R script file containing comments and commands

Understand what an R function is

Locate help for an R function using

?,??, andargs()

Getting ready to use R for the first time

In this lesson we will take you through the very first things you need to get R working.

Tip: This lesson works best on the cloud

Remember, these lessons assume we are using the pre-configured virtual machine instances provided to you at a genomics workshop. Much of this work could be done on your laptop, but we use instances to simplify workshop setup requirements, and to get you familiar with using the cloud (a common requirement for working with big data). Visit the Genomics Workshop setup page for details on getting this instance running on your own, or for the info you need to do this on your own computer.

A Brief History of R

R has been around since 1995, and was created by Ross Ihaka and Robert Gentleman at the University of Auckland, New Zealand. R is based off the S programming language developed at Bell Labs and was developed to teach intro statistics. See this slide deck by Ross Ihaka for more info on the subject.

Advantages of using R

At more than 20 years old, R is fairly mature and growing in popularity. However, programming isn’t a popularity contest. Here are key advantages of analyzing data in R:

- R is open source. This means R is free - an advantage if you are at an institution where you have to pay for your own MATLAB or SAS license. Open source, is important to your colleagues in parts of the world where expensive software in inaccessible. It also means that R is actively developed by a community (see r-project.org), and there are regular updates.

- R is widely used. Ok, maybe programming is a popularity contest. Because, R is used in many areas (not just bioinformatics), you are more likely to find help online when you need it. Chances are, almost any error message you run into, someone else has already experienced.

- R is powerful. R runs on multiple platforms (Windows/MacOS/Linux). It can work with much larger datasets than popular spreadsheet programs like Microsoft Excel, and because of its scripting capabilities is far more reproducible. Also, there are thousands of available software packages for science, including genomics and other areas of life science.

Discussion: Your experience

What has motivated you to learn R? Have you had a research question for which spreadsheet programs such as Excel have proven difficult to use, or where the size of the data set created issues?

Introducing RStudio Server

In these lessons, we will be making use of a software called RStudio, an Integrated Development Environment (IDE). RStudio, like most IDEs, provides a graphical interface to R, making it more user-friendly, and providing dozens of useful features. We will introduce additional benefits of using RStudio as you cover the lessons. In this case, we are specifically using RStudio Server, a version of RStudio that can be accessed in your web browser. RStudio Server has the same features of the Desktop version of RStudio you could download as standalone software.

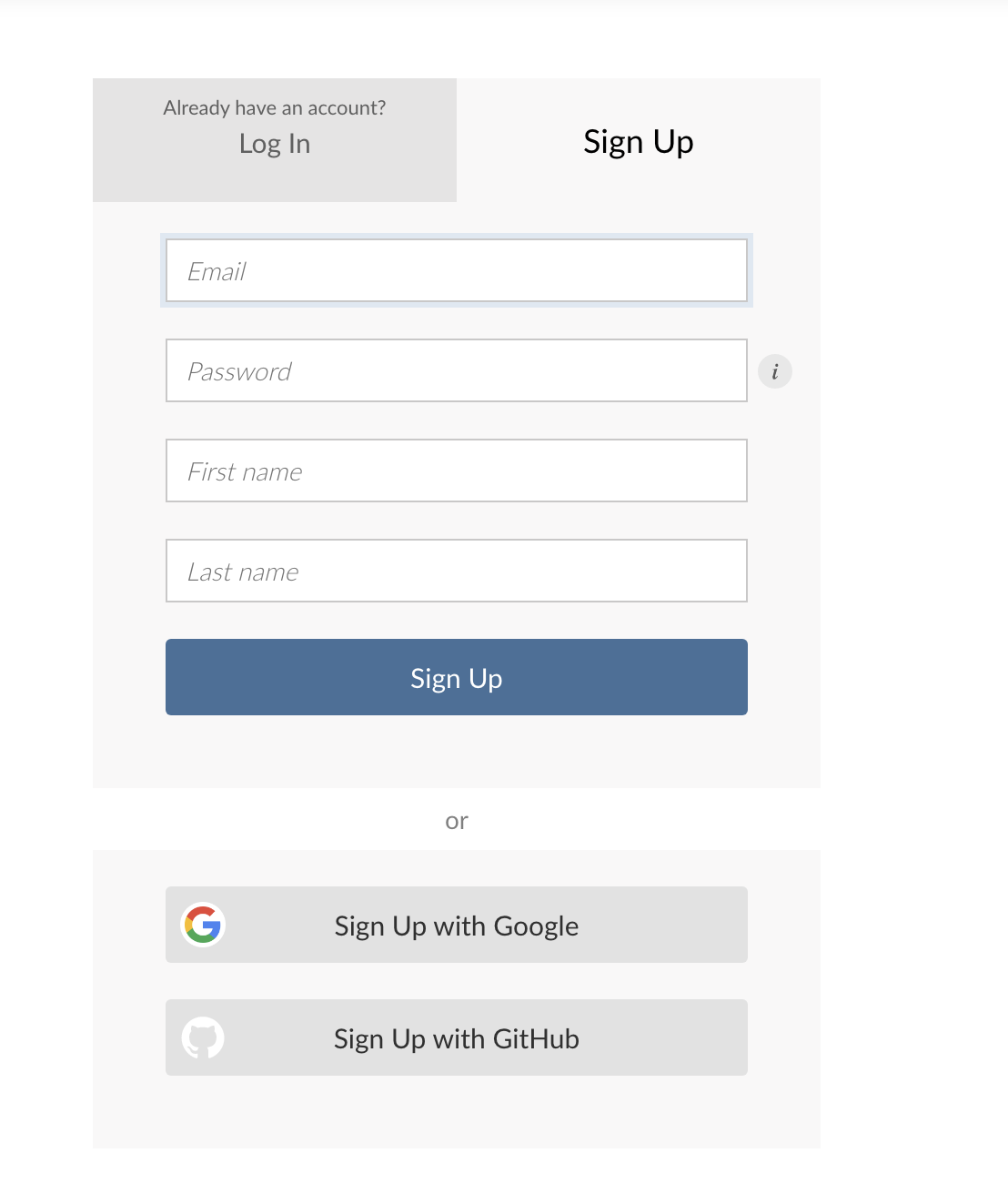

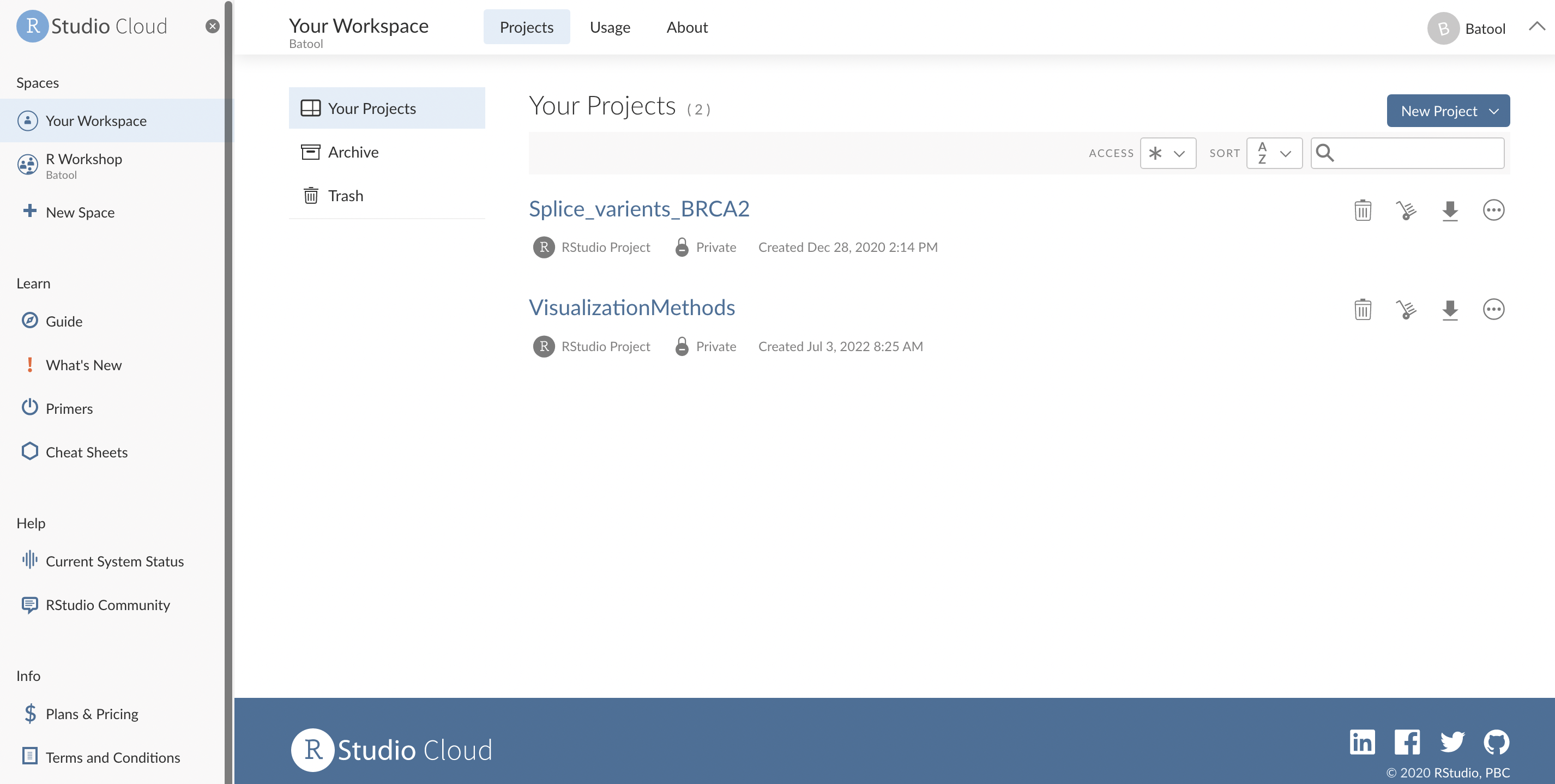

Log on to RStudio Cloud

Sign up on RStudio Cloud using either Google or GitHub.

Tip: If you have an account in GitHub, you are recommanded to use Github rather than Google.

You should now be looking at a page that will allow you to login to the RStudio cloud:

After signing up with GitHub, you should now see the RStudio Cloud:

Tip: Make sure there are no spaces before or after your URL or

your web browser may interpret it as a search query.

You should now be looking at a page that will allow you to login to the RStudio server:

Enter your user credentials and click Sign In. The credentials for the genomics Data Carpentry instances will be provided by your instructors.

You should now see the RStudio interface:

Create an RStudio project

One of the first benefits we will take advantage of in RStudio is something called an RStudio Project. An RStudio project allows you to more easily:

- Save data, files, variables, packages, etc. related to a specific analysis project

- Restart work where you left off

- Collaborate, especially if you are using version control such as git.

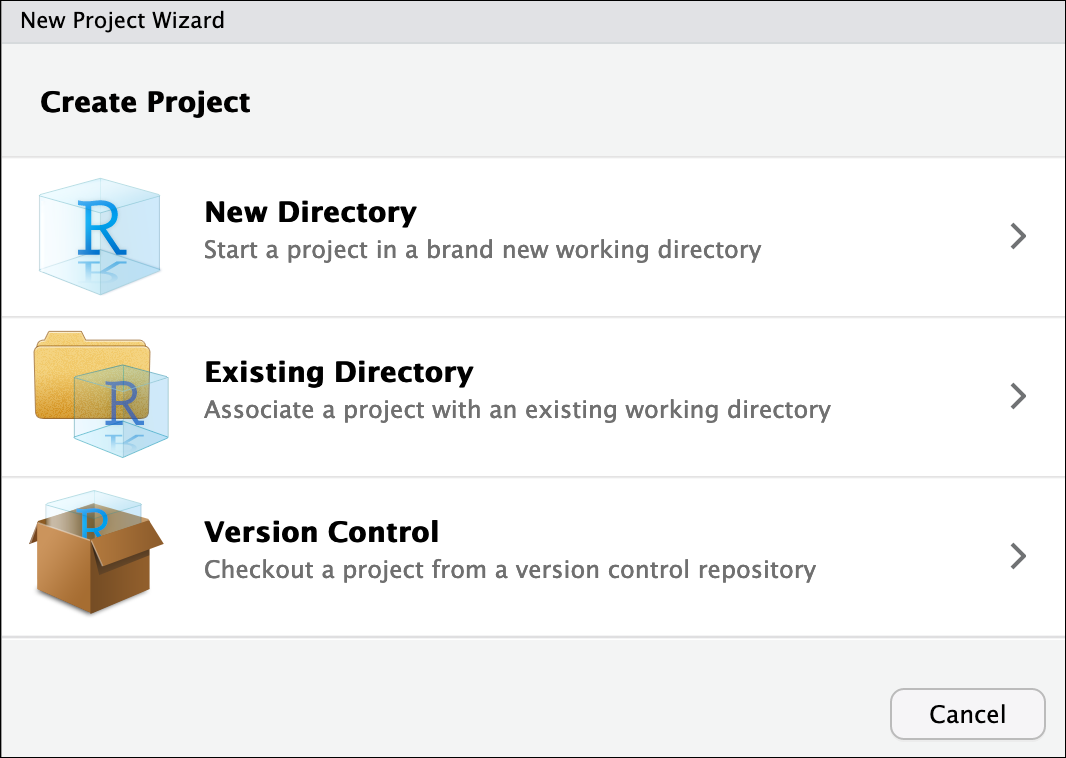

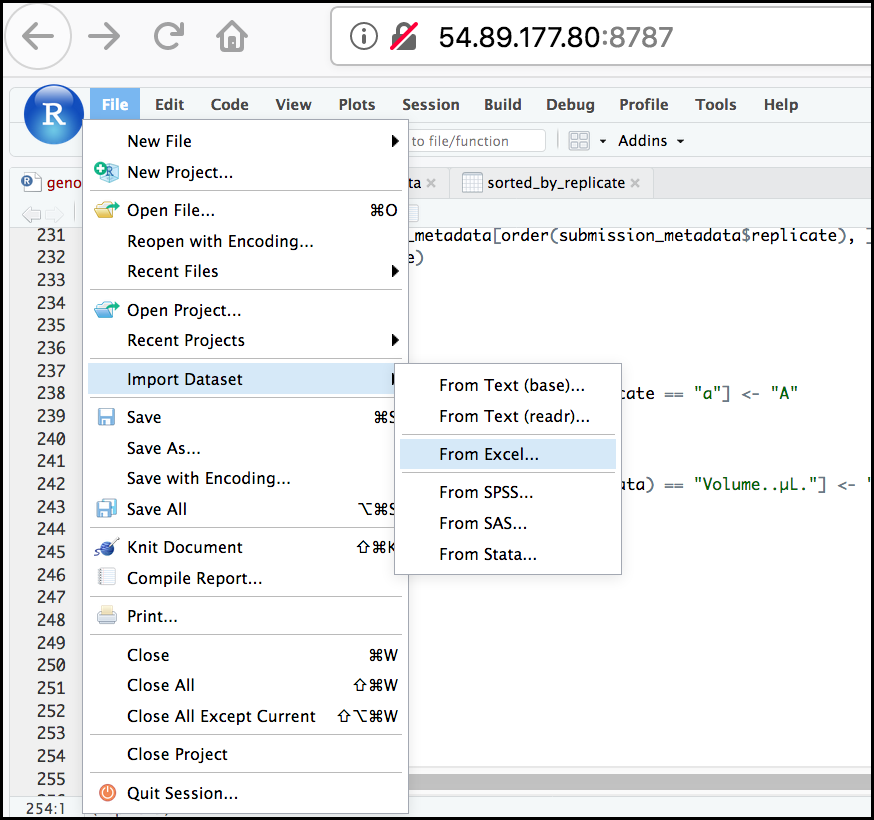

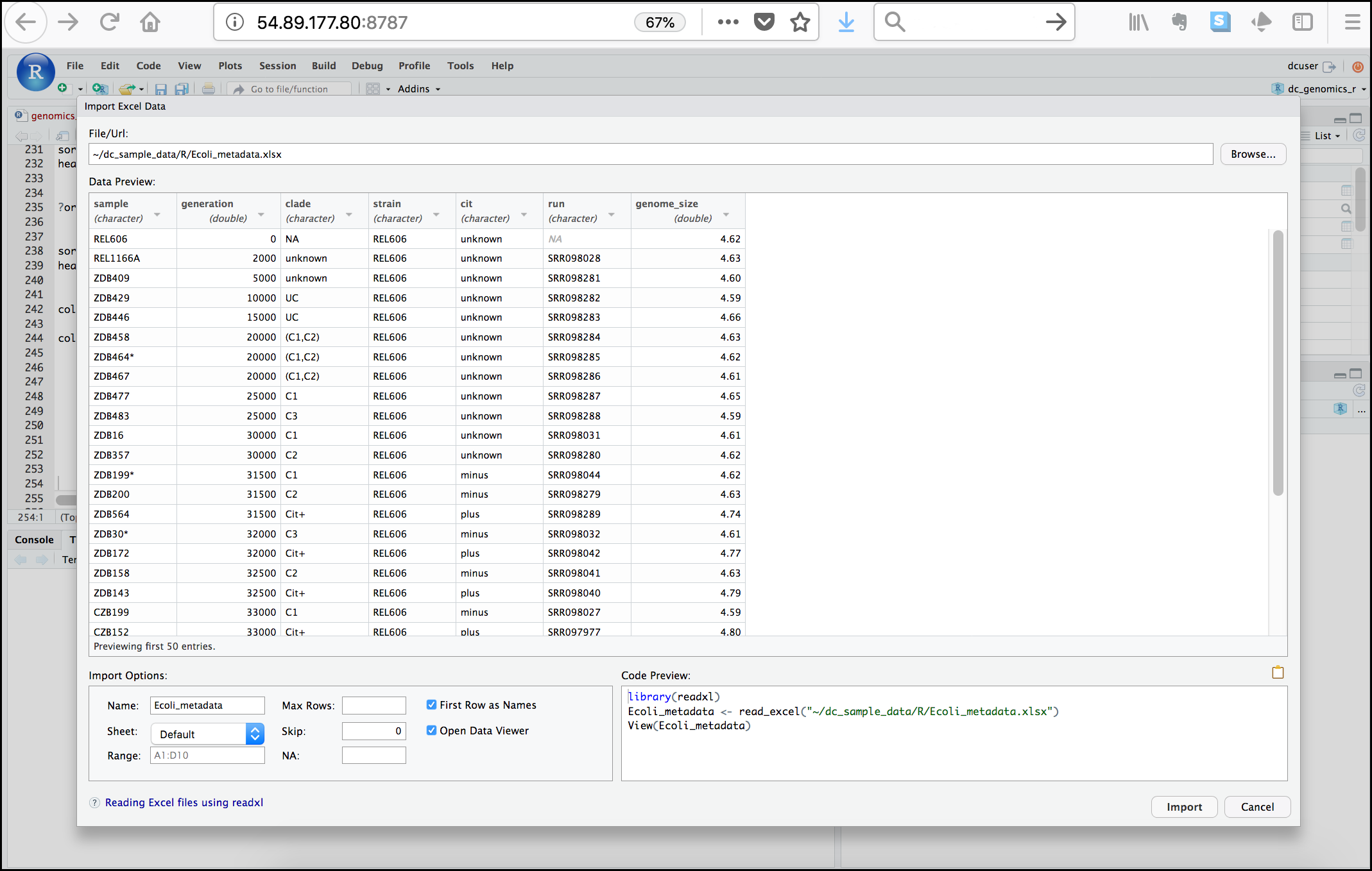

- To create a project, go to the File menu, and click New Project....

-

In the window that opens select New Directory, then New Project. For “Directory name:” enter dc_genomics_r. For “Create project as subdirectory of”, click Browse... and then click Choose which will select your home directory “~”.

-

Finally click Create Project. In the “Files” tab of your output pane (more about the RStudio layout in a moment), you should see an RStudio project file, dc_genomics_r.Rproj. All RStudio projects end with the “.Rproj” file extension.

Tip: Make your project more reproducible with renv

One of the most wonderful and also frustrating aspects of working with R is managing packages. We will talk more about them, but packages (e.g. ggplot2) are add-ons that extend what you can do with R. Unfortunately it is very common that you may run into versions of R and/or R packages that are not compatible. This may make it difficult for someone to run your R script using their version of R or a given R package, and/or make it more difficult to run their scripts on your machine. renv is an RStudio add-on that will associate your packages and project so that your work is more portable and reproducible. To turn on renv click on the Tools menu and select Project Options. Under Enviornments check off “Use renv with this project” and follow any installation instructions.

Creating your first R script

Now that we are ready to start exploring R, we will want to keep a record of the commands we are using. To do this we can create an R script:

Click the File menu and select New File and then R Script. Before we go any further, save your script by clicking the save/disk icon that is in the bar above the first line in the script editor, or click the File menu and select save. In the “Save File” window that opens, name your file “genomics_r_basics”. The new script genomics_r_basics.R should appear under “files” in the output pane. By convention, R scripts end with the file extension .R.

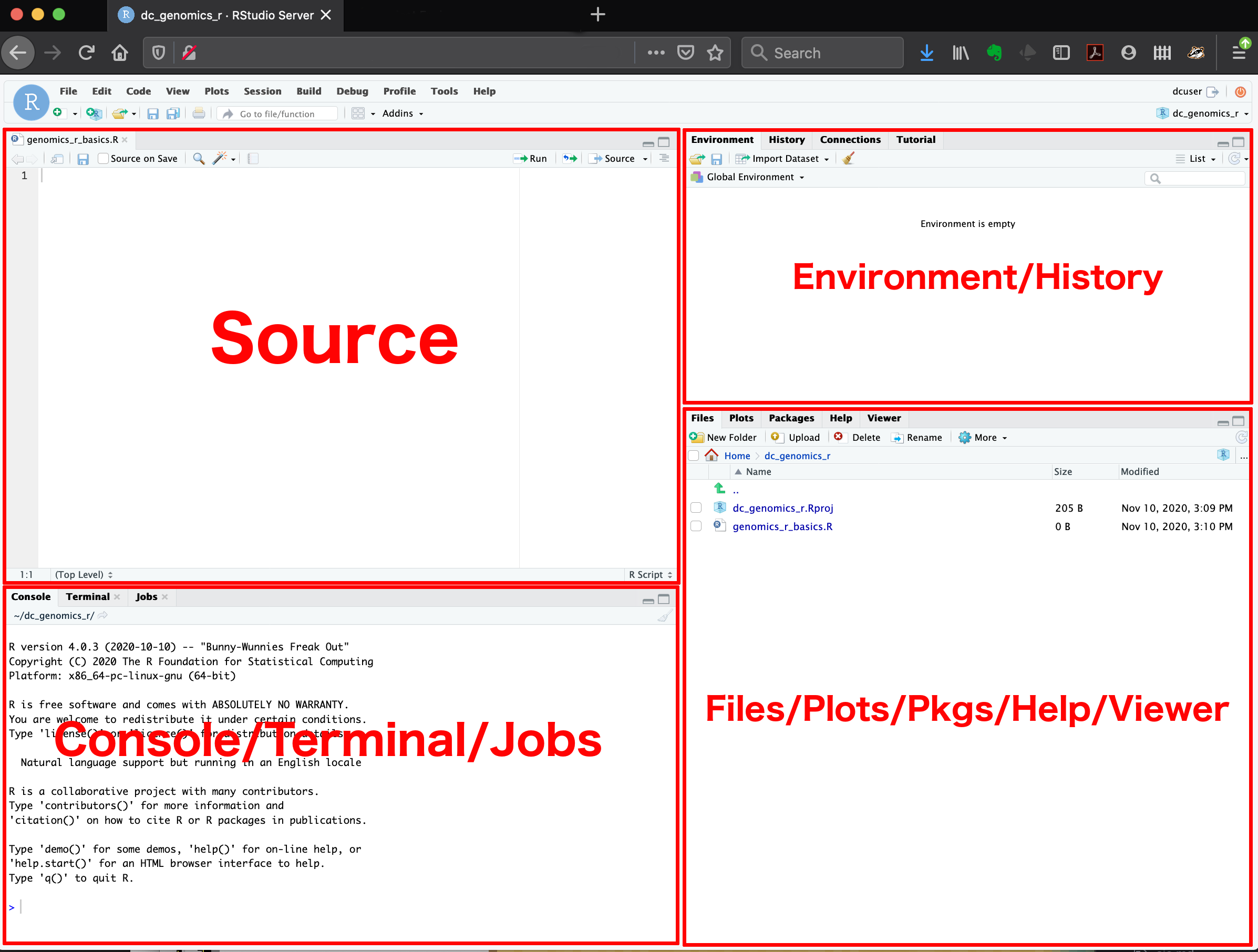

Overview and customization of the RStudio layout

Here are the major windows (or panes) of the RStudio environment:

- Source: This pane is where you will write/view R scripts. Some outputs

(such as if you view a dataset using

View()) will appear as a tab here. - Console/Terminal/Jobs: This is actually where you see the execution of commands. This is the same display you would see if you were using R at the command line without RStudio. You can work interactively (i.e. enter R commands here), but for the most part we will run a script (or lines in a script) in the source pane and watch their execution and output here. The “Terminal” tab give you access to the BASH terminal (the Linux operating system, unrelated to R). RStudio also allows you to run jobs (analyses) in the background. This is useful if some analysis will take a while to run. You can see the status of those jobs in the background.

- Environment/History: Here, RStudio will show you what datasets and objects (variables) you have created and which are defined in memory. You can also see some properties of objects/datasets such as their type and dimensions. The “History” tab contains a history of the R commands you’ve executed R.

- Files/Plots/Packages/Help/Viewer: This multipurpose pane will show you the contents of directories on your computer. You can also use the “Files” tab to navigate and set the working directory. The “Plots” tab will show the output of any plots generated. In “Packages” you will see what packages are actively loaded, or you can attach installed packages. “Help” will display help files for R functions and packages. “Viewer” will allow you to view local web content (e.g. HTML outputs).

Tip: Uploads and downloads in the cloud

In the “Files” tab you can select a file and download it from your cloud instance (click the “more” button) to your local computer. Uploads are also possible.

All of the panes in RStudio have configuration options. For example, you can minimize/maximize a pane, or by moving your mouse in the space between panes you can resize as needed. The most important customization options for pane layout are in the View menu. Other options such as font sizes, colors/themes, and more are in the Tools menu under Global Options.

You are working with R

Although we won’t be working with R at the terminal, there are lots of reasons to. For example, once you have written an RScript, you can run it at any Linux or Windows terminal without the need to start up RStudio. We don’t want you to get confused - RStudio runs R, but R is not RStudio. For more on running an R Script at the terminal see this Software Carpentry lesson.

Getting to work with R: navigating directories

Now that we have covered the more aesthetic aspects of RStudio, we can get to work using some commands. We will write, execute, and save the commands we learn in our genomics_r_basics.R script that is loaded in the Source pane. First, lets see what directory we are in. To do so, type the following command into the script:

getwd()

To execute this command, make sure your cursor is on the same line the command is written. Then click the Run button that is just above the first line of your script in the header of the Source pane.

In the console, we expect to see the following output*:

[1] "/home/dcuser/dc_genomics_r"

* Notice, at the Console, you will also see the instruction you executed above the output in blue.

Since we will be learning several commands, we may already want to keep some

short notes in our script to explain the purpose of the command. Entering a #

before any line in an R script turns that line into a comment, which R will

not try to interpret as code. Edit your script to include a comment on the

purpose of commands you are learning, e.g.:

# this command shows the current working directory

getwd()

Exercise: Work interactively in R

What happens when you try to enter the

getwd()command in the Console pane?Solution

You will get the same output you did as when you ran

getwd()from the source. You can run any command in the Console, however, executing it from the source script will make it easier for us to record what we have done, and ultimately run an entire script, instead of entering commands one-by-one.

For the purposes of this exercise we want you to be in the directory "/home/dcuser/R_data".

What if you weren’t? You can set your home directory using the setwd()

command. Enter this command in your script, but don’t run this yet.

# This sets the working directory

setwd()

You may have guessed, you need to tell the setwd() command

what directory you want to set as your working directory. To do so, inside of

the parentheses, open a set of quotes. Inside the quotes enter a / which is

the root directory for Linux. Next, use the Tab key, to take

advantage of RStudio’s Tab-autocompletion method, to select home, dcuser,

and dc_genomics_r directory. The path in your script should look like this:

# This sets the working directory

setwd("/home/dcuser/dc_genomics_r")

When you run this command, the console repeats the command, but gives you no

output. Instead, you see the blank R prompt: >. Congratulations! Although it

seems small, knowing what your working directory is and being able to set your

working directory is the first step to analyzing your data.

Tip: Never use

setwd()Wait, what was the last 2 minutes about? Well, setting your working directory is something you need to do, you need to be very careful about using this as a step in your script. For example, what if your script is being on a computer that has a different directory structure? The top-level path in a Unix file system is root

/, but on Windows it is likelyC:\. This is one of several ways you might cause a script to break because a file path is configured differently than your script anticipates. R packages like here and file.path allow you to specify file paths is a way that is more operating system independent. See Jenny Bryan’s blog post for this and other R tips.

Using functions in R, without needing to master them

A function in R (or any computing language) is a short

program that takes some input and returns some output. Functions may seem like an advanced topic (and they are), but you have already

used at least one function in R. getwd() is a function! The next sections will help you understand what is happening in

any R script.

Exercise: What do these functions do?

Try the following functions by writing them in your script. See if you can guess what they do, and make sure to add comments to your script about your assumed purpose.

dir()sessionInfo()date()Sys.time()Solution

dir()# Lists files in the working directorysessionInfo()# Gives the version of R and additional info including on attached packagesdate()# Gives the current dateSys.time()# Gives the current timeNotice: Commands are case sensitive!

You have hopefully noticed a pattern - an R function has three key properties:

- Functions have a name (e.g.

dir,getwd); note that functions are case sensitive! - Following the name, functions have a pair of

() - Inside the parentheses, a function may take 0 or more arguments

An argument may be a specific input for your function and/or may modify the

function’s behavior. For example the function round() will round a number

with a decimal:

# This will round a number to the nearest integer

round(3.14)

[1] 3

Getting help with function arguments

What if you wanted to round to one significant digit? round() can

do this, but you may first need to read the help to find out how. To see the help

(In R sometimes also called a “vignette”) enter a ? in front of the function

name:

?round()

The “Help” tab will show you information (often, too much information). You

will slowly learn how to read and make sense of help files. Checking the “Usage” or “Examples”

headings is often a good place to look first. If you look under “Arguments,” we

also see what arguments we can pass to this function to modify its behavior.

You can also see a function’s argument using the args() function:

args(round)

function (x, digits = 0)

NULL

round() takes two arguments, x, which is the number to be

rounded, and a

digits argument. The = sign indicates that a default (in this case 0) is

already set. Since x is not set, round() requires we provide it, in contrast

to digits where R will use the default value 0 unless you explicitly provide

a different value. We can explicitly set the digits parameter when we call the

function:

round(3.14159, digits = 2)

[1] 3.14

Or, R accepts what we call “positional arguments”, if you pass a function

arguments separated by commas, R assumes that they are in the order you saw

when we used args(). In the case below that means that x is 3.14159 and

digits is 2.

round(3.14159, 2)

[1] 3.14

Finally, what if you are using ? to get help for a function in a package not installed on your system, such as when you are running a script which has dependencies.

?geom_point()

will return an error:

Error in .helpForCall(topicExpr, parent.frame()) :

no methods for ‘geom_point’ and no documentation for it as a function

Use two question marks (i.e. ??geom_point()) and R will return

results from a search of the documentation for packages you have installed on your computer

in the “Help” tab. Finally, if you think there

should be a function, for example a statistical test, but you aren’t

sure what it is called in R, or what functions may be available, use

the help.search() function.

Exercise: Searching for R functions

Use

help.search()to find R functions for the following statistical functions. Remember to put your search query in quotes inside the function’s parentheses.

- Chi-Squared test

- Student t-test

- mixed linear model

Solution

While your search results may return several tests, we list a few you might find:

- Chi-Squared test:

stats::Chisquare- Student t-test:

stats::t.test- mixed linear model:

stats::lm.glm

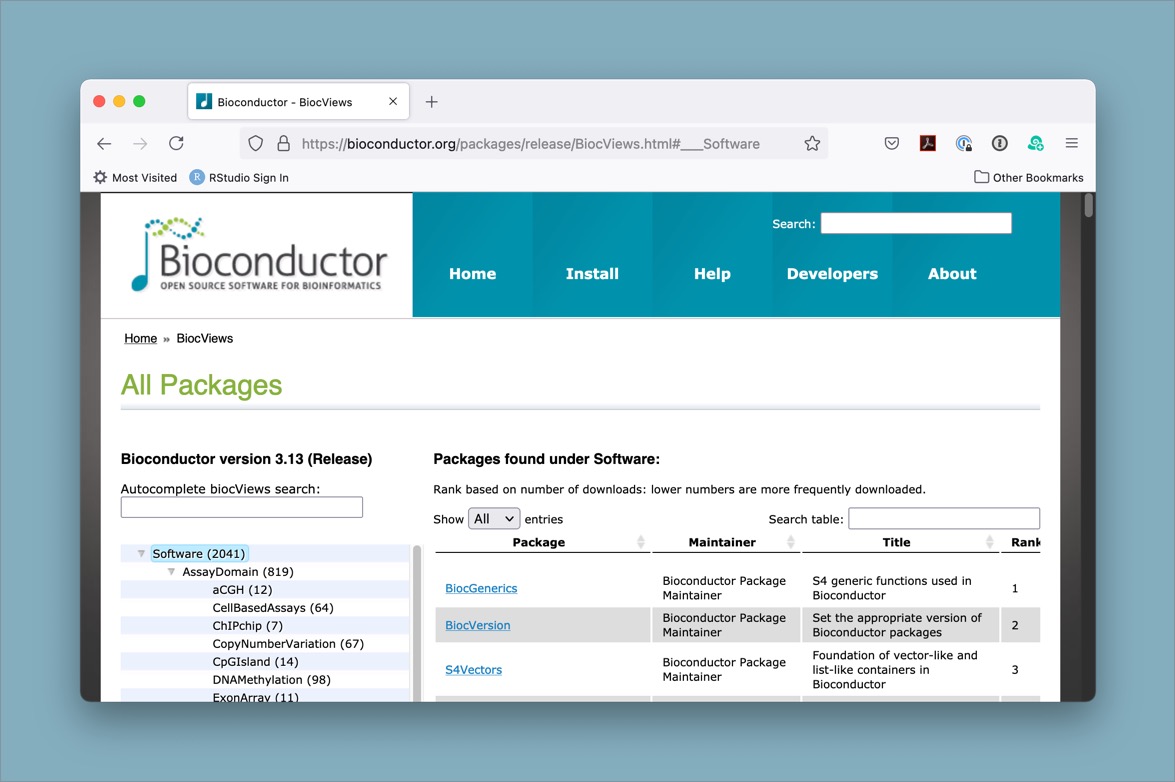

We will discuss more on where to look for the libraries and packages that contain functions you want to use. For now, be aware that two important ones are CRAN - the main repository for R, and Bioconductor - a popular repository for bioinformatics-related R packages.

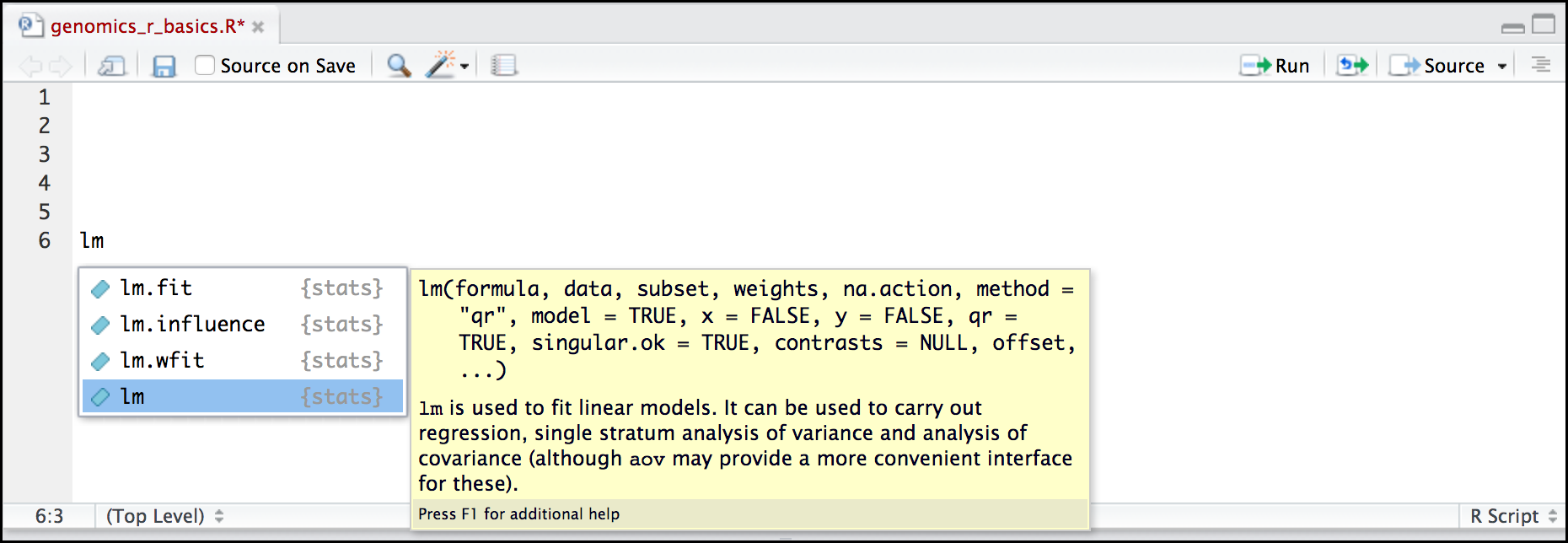

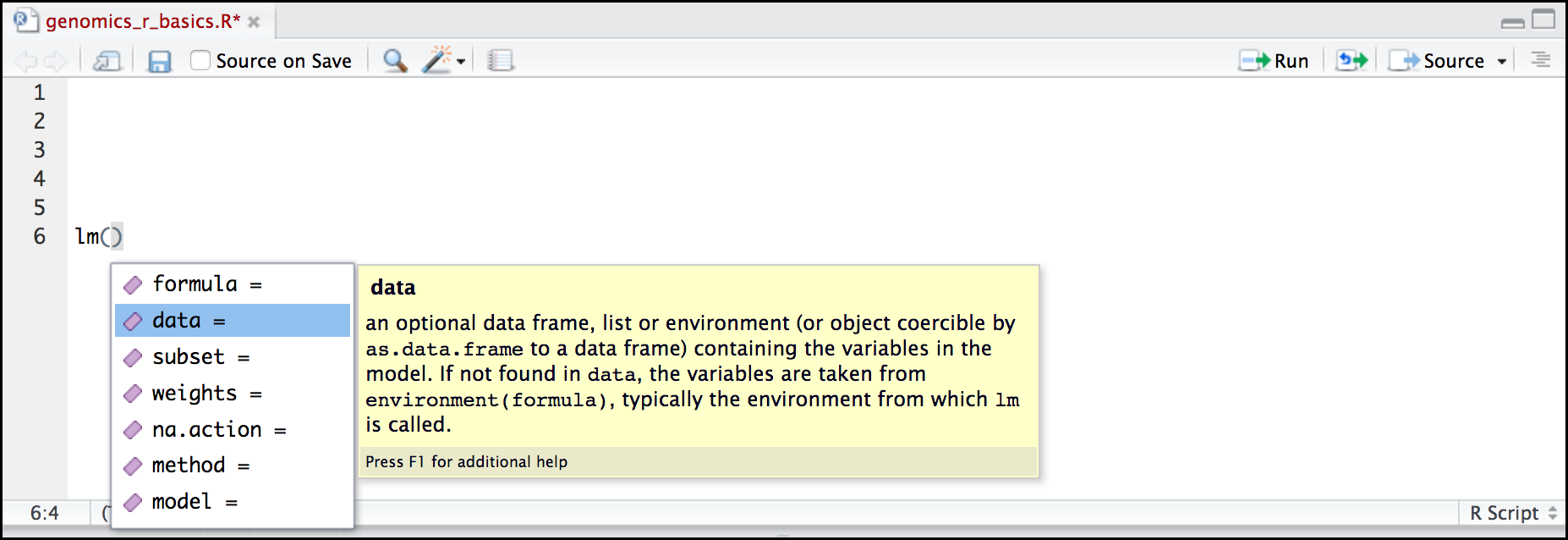

RStudio contextual help

Here is one last bonus we will mention about RStudio. It’s difficult to remember all of the arguments and definitions associated with a given function. When you start typing the name of a function and hit the Tab key, RStudio will display functions and associated help:

Once you type a function, hitting the Tab inside the parentheses will show you the function’s arguments and provide additional help for each of these arguments.

Key Points

R is a powerful, popular open-source scripting language

You can customize the layout of RStudio, and use the project feature to manage the files and packages used in your analysis

RStudio allows you to run R in an easy-to-use interface and makes it easy to find help

Collaborating with Github

Overview

Teaching: 30 min

Exercises: 60 minQuestions

How can I develop and collaborate on code with another scientist?

How can I give access to my code to another collaborator?

How can I keep code synchronised with another scientist?

How can I solve conflicts that arise from that collaboration?

What are Github

Objectives

Be able to create a new repository and share it with another scientist.

Be able to work together on a R script through RStudio and Github integration.

Understand how to make issues and explore the history of a repository.

Table of contents

Introduction

The collaborative power of GitHub and RStudio is really game changing. So far we’ve been collaborating with our most important collaborator: ourselves. But, we are lucky that in science we have so many other collaborators, so let’s learn how to accelerate our collaborations with them through GitHub!

We are going to teach you the simplest way to collaborate with someone, which is for both of you to have privileges to edit and add files to a repository. GitHub is built for software developer teams but we believe that it can also be beneficial to scientists.

We will do this all with a partner, and we’ll walk through some things all together, and then give you a chance to work with your collaborator on your own.

Pair up and work collaboratively

- Make groups of two scientists. They will collaborate through Github.

- Decide who will own the Github repository: this will be the “owner” also referred to as Partner 1.

- The other scientist will be called the “collaborator” also referred to as Partner 2.

- Please write your role on a sticky note and place it on your laptop to remember who you are!

Owner (Partner 1) setup

Create a Github repository

The repository “owner” will connect to Github and create a repository called first-collaboration. We will do this in the same way that we did in the “Version control with git and Github” episode.

Create a gh-pages branch

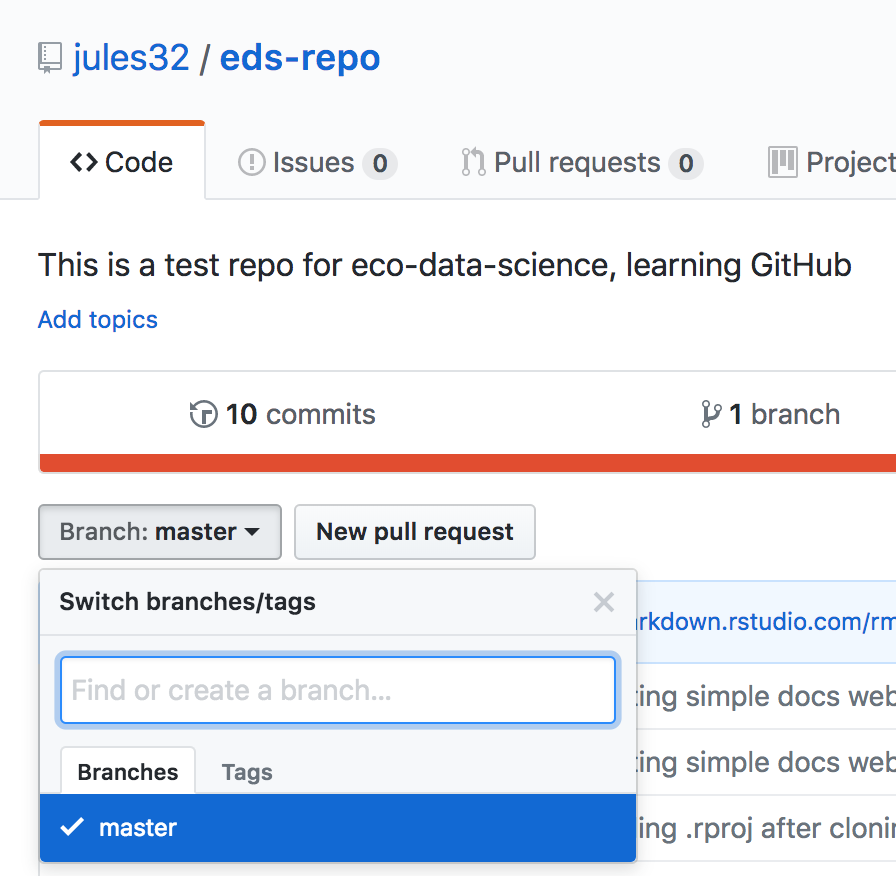

We aren’t going to talk about branches very much, but they are a powerful feature of git and GitHub. I think of it as creating a copy of your work that becomes a parallel universe that you can modify safely because it’s not affecting your original work. And then you can choose to merge the universes back together if and when you want.

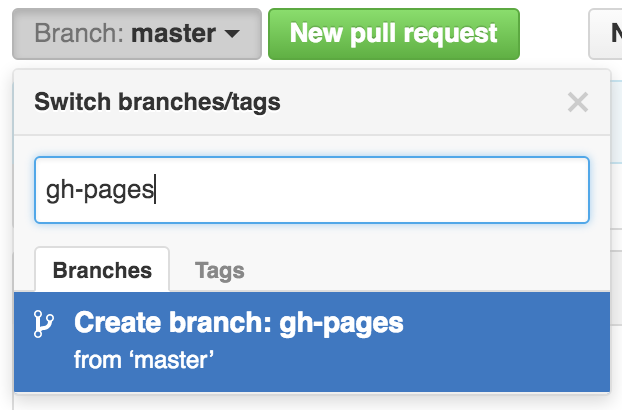

By default, when you create a new repo you begin with one branch, and it is named master. When you create new branches, you can name them whatever you want. However, if you name one gh-pages (all lowercase, with a - and no spaces), this will let you create a website. And that’s our plan. So, owner/partner 1, please do this to create a gh-pages branch:

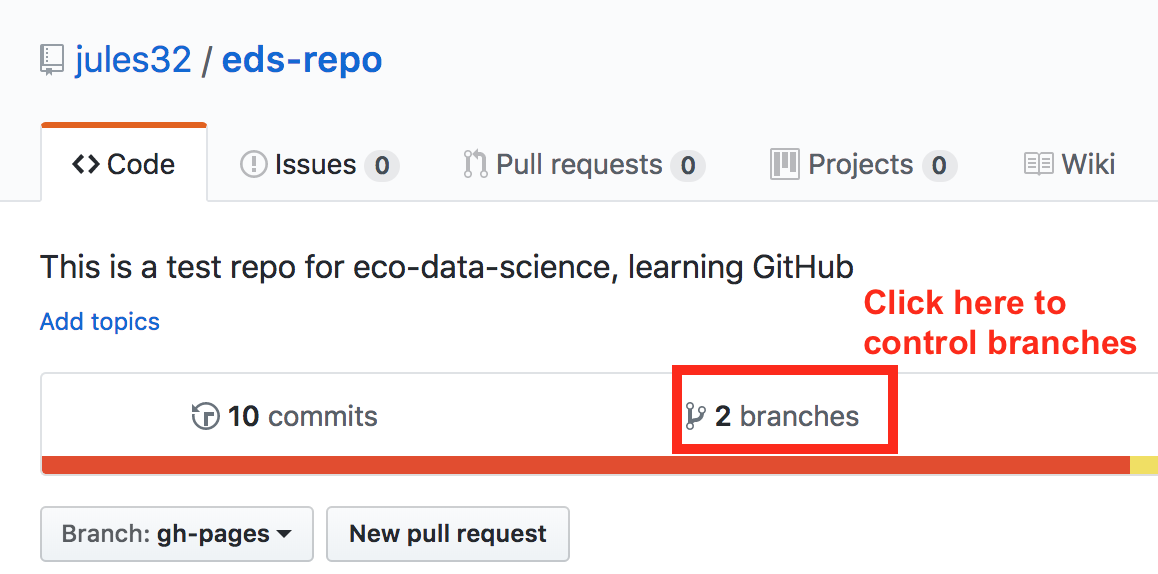

On the homepage for your repo on GitHub.com, click the button that says “Branch:master”. Here, you can switch to another branch (right now there aren’t any others besides master), or create one by typing a new name.

Let’s type gh-pages.

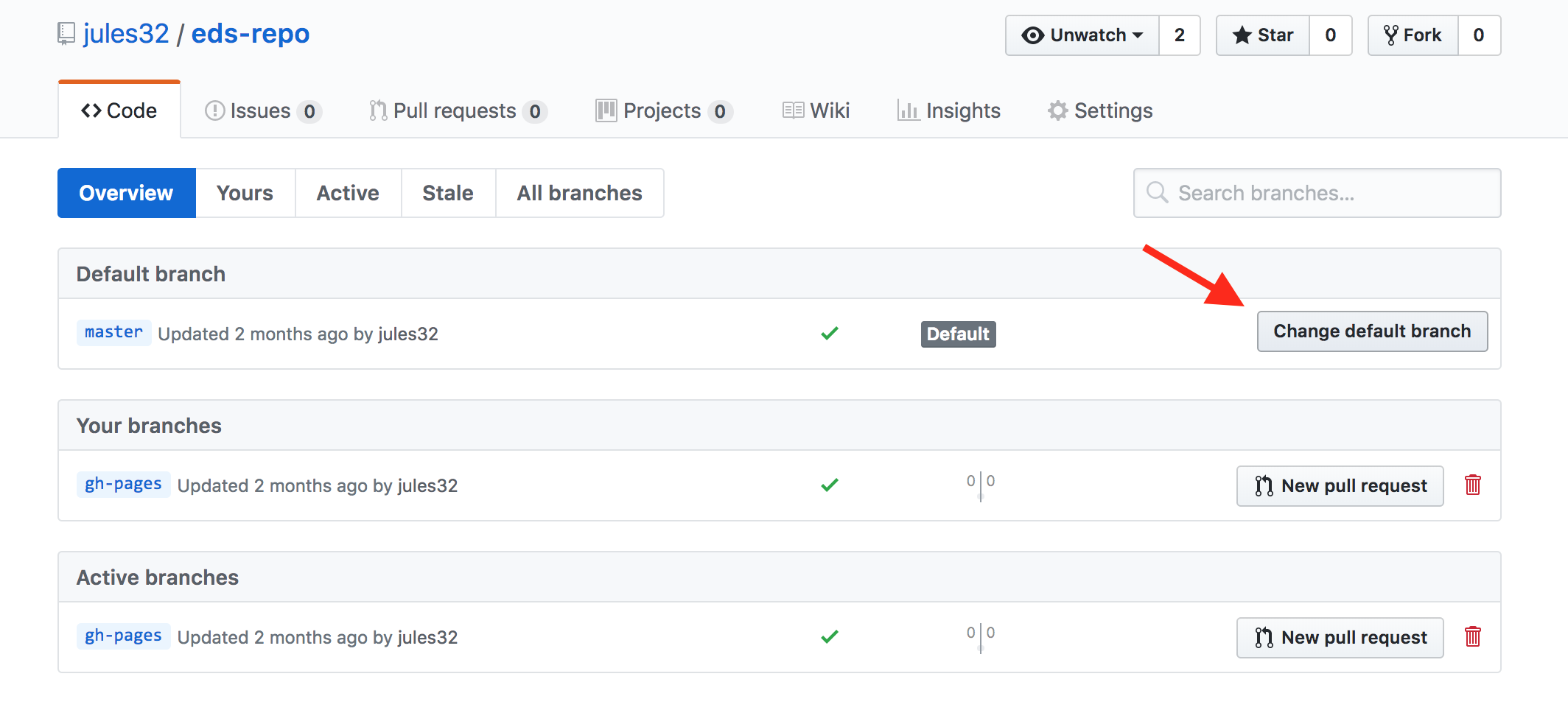

Let’s also change gh-pages to the default branch and delete the master branch: this will be a one-time-only thing that we do here:

First click to control branches:

And then click to change the default branch to gh-pages. I like to then delete the master branch when it has the little red trash can next to it. It will make you confirm that you really want to delete it, which I do!

Give your collaborator administration privileges (Partner 1 and 2)

Now, Partner 1, go into Settings > Collaborators > enter Partner 2’s (your collaborator’s) username.

Partner 2 then needs to check their email and accept as a collaborator. Notice that your collaborator has “Push access to the repository” (highlighted below):

Clone to a new Rproject (Owner Partner 1)

Now let’s have Partner 1 clone the repository to their local computer. We’ll do this through RStudio like we did before (see the “Version control with git and Github:Clone your repository using RStudio” episode section. But, we’ll do this with a final additional step before hitting the “Create Project”: we will select “Open in a new Session”.

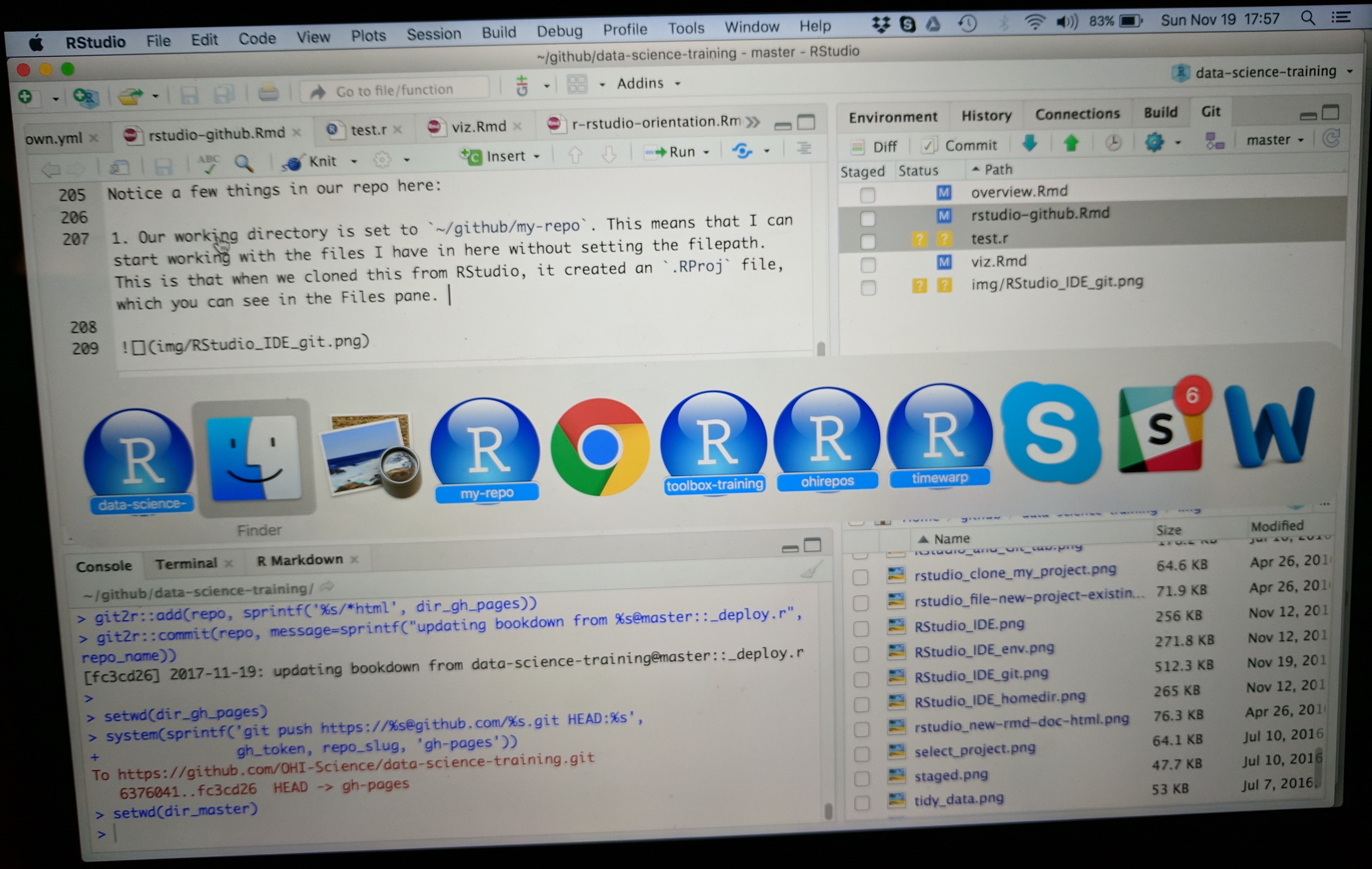

Opening this Project in a new Session opens up a new world of awesomeness from RStudio. Having different RStudio project sessions allows you to keep your work separate and organized. So you can collaborate with this collaborator on this repository while also working on your other repository from this morning. I tend to have a lot of projects going at one time:

Have a look in your git tab.

Like we saw earlier, when you first clone a repo through RStudio, RStudio will add an .Rproj file to your repo. And if you didn’t add a .gitignore file when you originally created the repo on GitHub.com, RStudio will also add this for you. So, Partner 1, let’s go ahead and sync this back to GitHub.com.

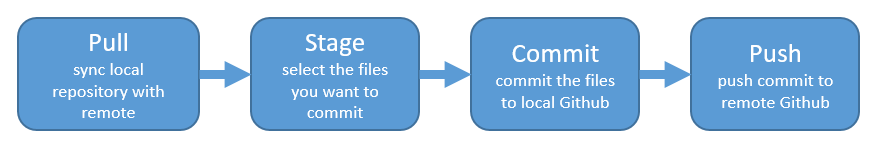

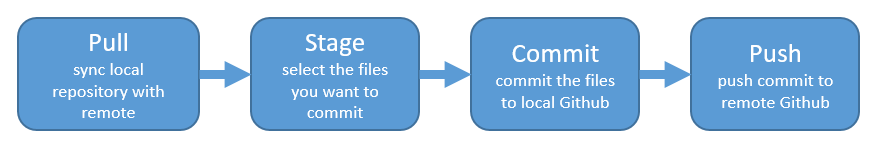

Remember:

Let’s confirm that this was synced by looking at GitHub.com again. You may have to refresh the page, but you should see this commit where you added the .Rproj file.

Collaborator (Partner 2) part

Clone to a new Rproject (Partner 2)

Now it’s Partner 2’s turn! Partner 2, clone this repository following the same steps that Partner 1 just did. When you clone it, RStudio should not create any new files — why? Partner 1 already created and pushed the .Rproj and .gitignore files so they already exist in the repo.

Discussion point

Question: When you clone it, RStudio should not create any new files — why?

Solution

Partner 1 already created and pushed the

.Rprojand.gitignorefiles so they already exist in the repo.

Edit a file and sync (Partner 2)

Let’s have Partner 2 add some information to the README.md. Let’s have them write:

Collaborators:

- Partner 2's name

When we save the README.md, And now let’s sync back to GitHub.

When we inspect on GitHub.com, click to view all the commits, you’ll see commits logged from both Partner 1 and 2!

Discussion point

Questions:

- Would you be able to clone a repository that you are not a collaborator on?

- What do you think would happen? Try it!

- Can you sync back?

Solution

- Yes, you can clone a repository that is publicly available.

- If you try to clone it on your local machine, it does work.

- Unfortunately, if you don’t have write permissions, you cannot contribute. You would have to ask for write/push writes.

State of the Repository

OK, so where do things stand right now? GitHub.com has the most recent versions of all the repository’s files. Partner 2 also has these most recent versions locally. How about Partner 1?

Partner 1 does not have the most recent versions of everything on their computer!.

Discussion point

Question: How can we change that? Or how could we even check?

Solution

PULL !

Let’s have Partner 1 go back to RStudio and Pull. If their files aren’t up-to-date, this will pull the most recent versions to their local computer. And if they already did have the most recent versions? Well, pulling doesn’t cost anything (other than an internet connection), so if everything is up-to-date, pulling is fine too.

I recommend pulling every time you come back to a collaborative repository. Whether you haven’t opened RStudio in a month or you’ve just been away for a lunch break, pull. It might not be necessary, but it can save a lot of heartache later.

Merge conflicts

What kind of heartache are we talking about? Let’s explore.

Stop and watch me create and solve a merge conflict with my Partner 2, and then you will have time to recreate this with your partner.

Within a file, GitHub tracks changes line-by-line. So you can also have collaborators working on different lines within the same file and GitHub will be able to weave those changes into each other – that’s it’s job! It’s when you have collaborators working on the same lines within the same file that you can have merge conflicts. Merge conflicts can be frustrating, but they are actually trying to help you (kind of like R’s error messages). They occur when GitHub can’t make a decision about what should be on a particular line and needs a human (you) to decide. And this is good – you don’t want GitHub to decide for you, it’s important that you make that decision.

Me = partner 1. My co-instructor = partner 2.

Here’s what me and my collaborator are going to do:

- My collaborator and me are first going to pull.

- Then, my collaborator and me navigate to the README file within RStudio.

- My collaborator and me are going to write something in the same file on the same line. We are going to write something in the README file on line 7: for instance, “I prefer R” and “I prefer Python”.

- Save the README file.

- My collaborator is going to pull, stage, commit and push.

- When my collaborator is done, I am going to pull.

- Error! Merge conflict!

I am not allowed to to pull since GitHub is protecting me because if I did successfully pull, my work would be overwritten by whatever my collaborator had written.

GitHub is going to make a human (me in this case) decide. GitHub says, “either commit this work first, or stash it”. Stashing means “ (“save a copy of the README in another folder somewhere outside of this GitHub repository”).

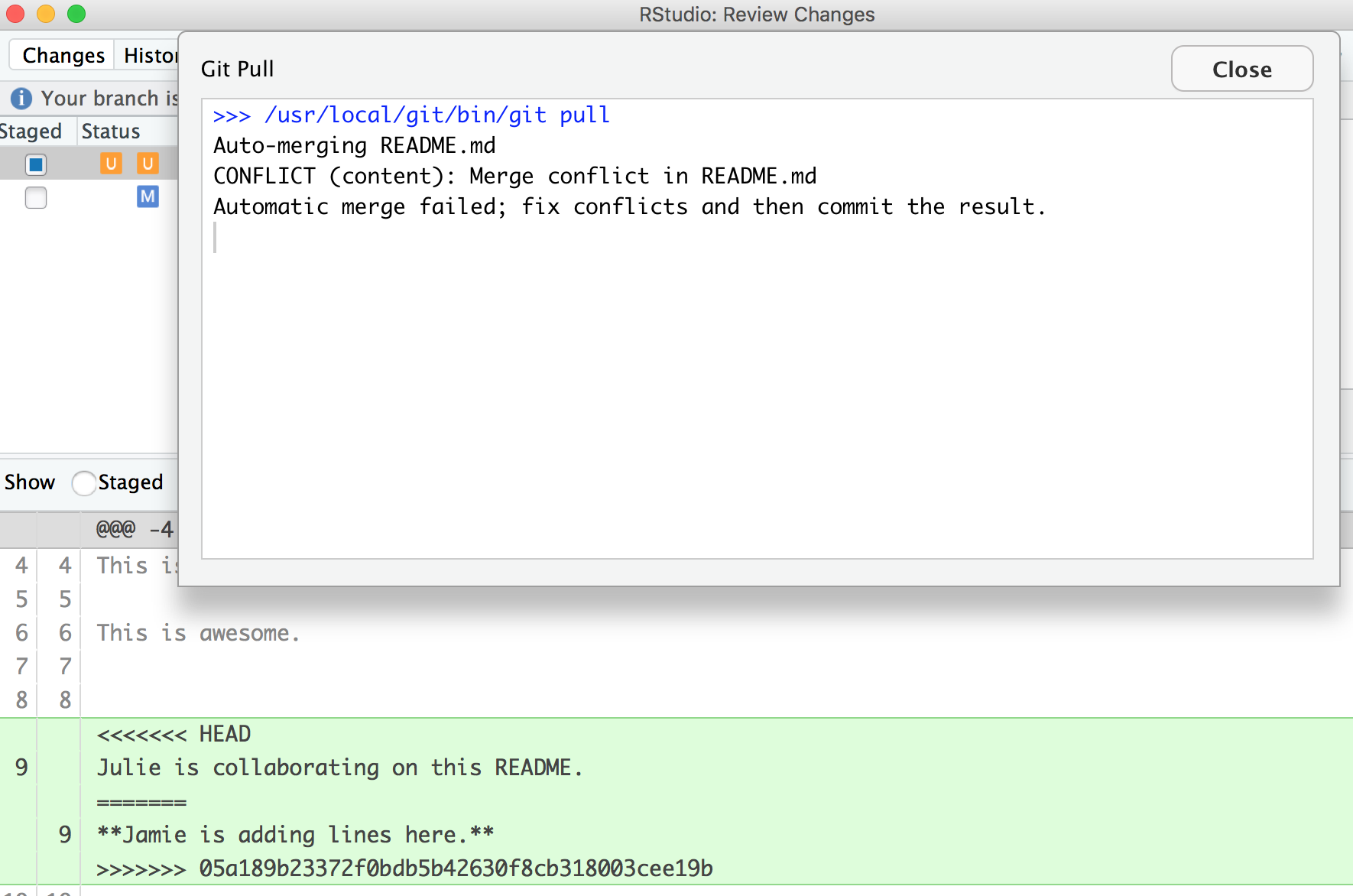

Let’s follow their advice and have me to commit first. Great. Now let’s pull again.

Still not happy!

OK, actually, we’re just moving along this same problem that we know that we’ve created: Both me and my collaborator have both added new information to the same line. You can see that the pop-up box is saying that there is a CONFLICT and the merge has not happened. OK. We can close that window and inspect.

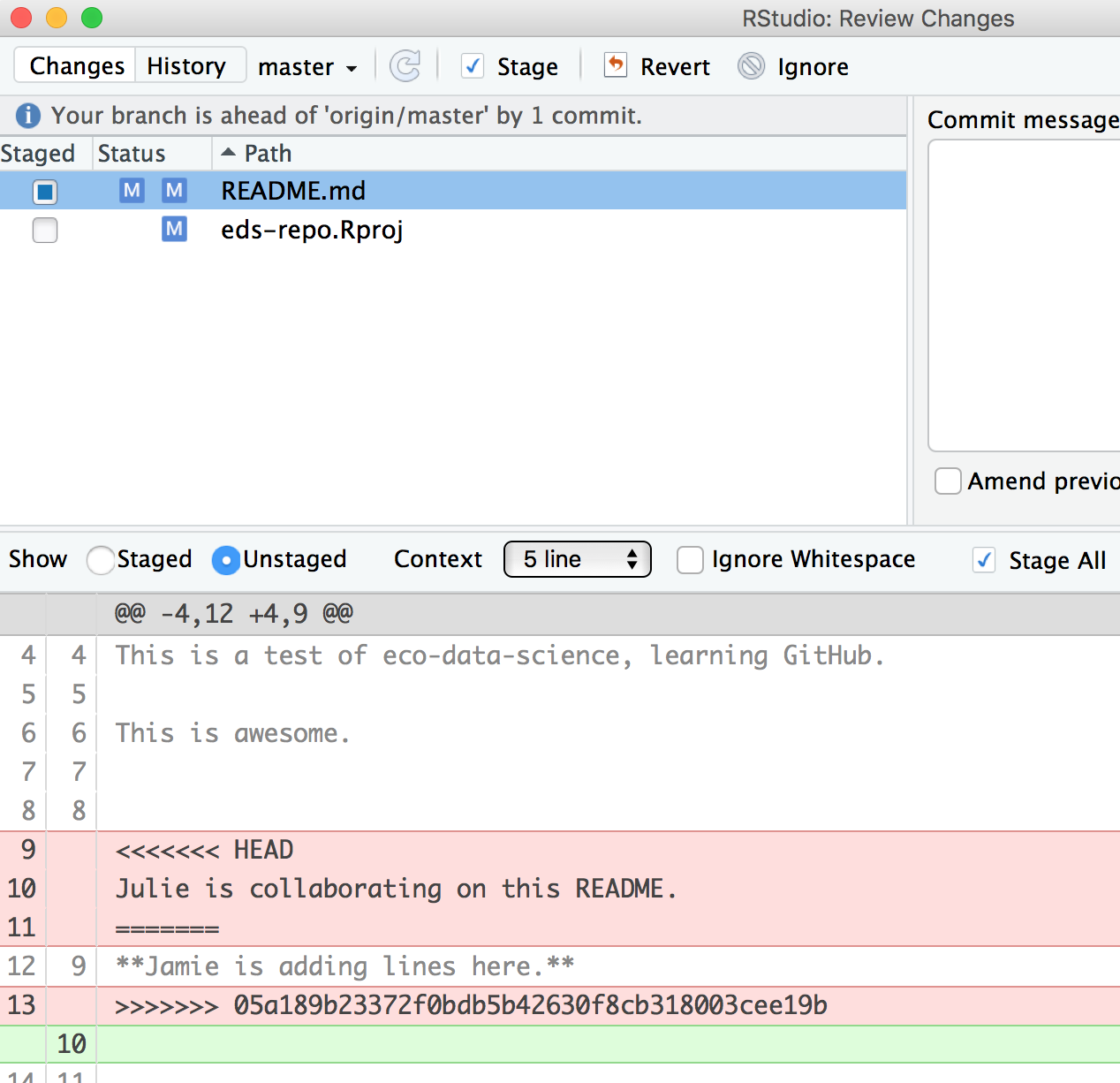

Notice that in the git tab, there are orange Us; this means that there is an unresolved conflict, and it is not staged with a check anymore because modifications have occurred to the file since it has been staged.

Let’s look at the README file itself. We got a preview in the diff pane that there is some new text going on in our README file:

<<<<<<< HEAD

Julie is collaborating on this README.

=======

**Jamie is adding lines here.**

>>>>>>> 05a189b23372f0bdb5b42630f8cb318003cee19b

In this example, I am Jamie and my collaborator is Julie. GitHub is displaying the line that Julie wrote and the line Jamie wrote separated by =======. So these are the two choices that Partner 2 has to decide between, which one do you want to keep? Where where does this decision start and end? The lines are bounded by <<<<<<<HEAD and >>>>>>>long commit identifier.

So, to resolve this merge conflict, my collaborator has to chose, and delete everything except the line they want. So, they will delete the <<<<<<HEAD, =====, >>>>long commit identifier and one of the lines that they don’t want to keep.

Do that, and let’s try again. In this example, we’ve kept my (Jamie’s) line:

Then be I need to stage and write a commit message. I often write “resolving merge conflict” or something so I know what I was up to. When I stage the file, notice how now my edits look like a simple line replacement (compare with the image above before it was re-staged):

Your turn

Exercise

- Create a merge conflict with your partner, like we did in the example above.

- Try to fix it.

- Try other ways to get and solve merge conflicts. For example, when you get the following error message, try both ways (commit or stash. Stash means copy/move it somewhere else, for example, on your Desktop temporarily).

How do you avoid merge conflicts?

I’d say pull often, commit and sync often.

Also, talk with your collaborators. Even on a very collaborative project (e.g. a scientific publication), you are actually rarely working on the exact same file at any given time. And if you are, make sure you talk in-person or through chat applications (Slack, Gitter, Whatsapp, etc.).

But merge conflicts will occur and some of them will be heartbreaking and demoralizing. They happen to me when I collaborate with myself between my work computer and laptop. So protect yourself by pulling and syncing often!

Create your collaborative website

OK. Let’s have Partner 2 create a new RMarkdown file. Here’s what they will do:

- Pull!

- Create a new RMarkdown file and name it

index.Rmd. Make sure it’s all lowercase, and namedindex.Rmd. This will be the homepage for our website! - Maybe change the title inside the Rmd, call it “Our website”

- Knit!

- Save and sync your .Rmd and your .html files: pull, stage, commit, pull, push.

- Go to GitHub.com and go to your rendered website! Where is it? Figure out your website’s url from your github repo’s url. For example:

- my github repo: https://github.com/jules32/collab-research

- my website url: https://jules32.github.io/collab-research/

- note that the url starts with my username.github.io

So cool! On websites, if something is called index.html, that defaults to the home page. So https://jules32.github.io/collab-research/ is the same as https://jules32.github.io/collab-research/index.html. If you name your RMarkdown file my_research.Rmd, the url will become https://jules32.github.io/collab-research/my_research.html.

Your turn

Exercise

Here is some collaborative analysis you can do on your own. We’ll be playing around > with airline flights data, so let’s get setup a bit.

- Person 1: clean up the README to say something about you two, the authors.

- Person 2: edit the

index.Rmdor create a new RMarkdown file: maybe add something about the authors, and knit it.- Both of you: sync to GitHub.com (pull, stage, commit, push).

- Both of you: once you’ve both synced (talk to each other about it!), pull again. You should see each others’ work on your computer.

- Person 1: in the RMarkdown file, add a bit of the plan. We’ll be exploring the

nycflights13dataset. This is data on flights departing New York City in 2013.- Person 2: in the README, add a bit of the plan.

- Both of you: sync

Explore on GitHub.com

Now, let’s look at the repo again on GitHub.com. You’ll see those new files appear, and the commit history has increased.

Commit History

You’ll see that the number of commits for the repo has increased, let’s have a look. You can see the history of both of you.

Blame

Now let’s look at a single file, starting with the README file. We’ve explored the “Raw” and “History” options in the top-right of the file, but we haven’t really explored the “Blame” option. Let’s look now. Blame shows you line-by-line who authored the most recent version of the file you see. This is super useful if you’re trying to understand logic; you know who to ask for questions or attribute credit.

Issues

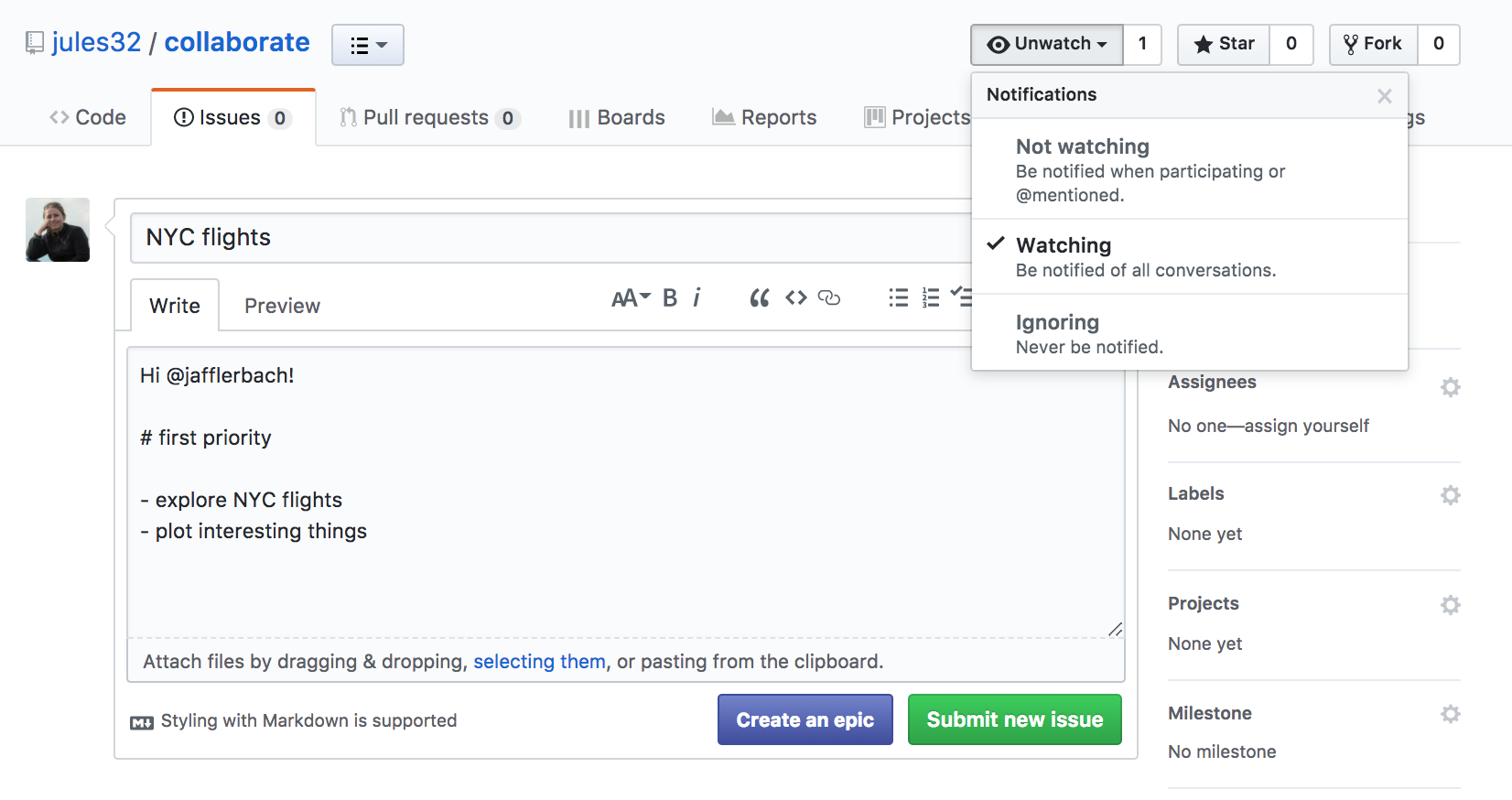

Now let’s have a look at issues. This is a way you can communicate to others about plans for the repo, questions, etc. Note that issues are public if the repository is public.

Let’s create a new issue with the title “NYC flights”.

In the text box, let’s write a note to our collaborator. You can use the Markdown syntax in this text box, which means all of your header and bullet formatting will come through. You can also select these options by clicking them just above the text box.

Let’s have one of you write something here. I’m going to write:

Hi @jafflerbach!

# first priority

- explore NYC flights

- plot interesting things

Note that I have my collaborator’s GitHub name with a @ symbol. This is going to email her directly so that she sees this issue. I can click the “Preview” button at the top left of the text box to see how this will look rendered in Markdown. It looks good!

Now let’s click submit new issue.

On the right side, there are a bunch of options for categorizing and organizing your issues. You and your collaborator may want to make some labels and timelines, depending on the project.

Another feature about issues is whether you want any notifications to this repository. Click where it says “Unwatch” up at the top. You’ll see three options: “Not watching”, “Watching”, and “Ignoring”. By default, you are watching these issues because you are a collaborator to the repository. But if you stop being a big contributor to this project, you may want to switch to “Not watching”. Or, you may want to ask an outside person to watch the issues. Or you may want to watch another repo yourself!

Let’s have Person 2 respond to the issue affirming the plan.

NYC flights exploration

Let’s continue this workflow with your collaborator, syncing to GitHub often and practicing what we’ve learned so far. We will get started together and then you and your collaborator will work on your own.

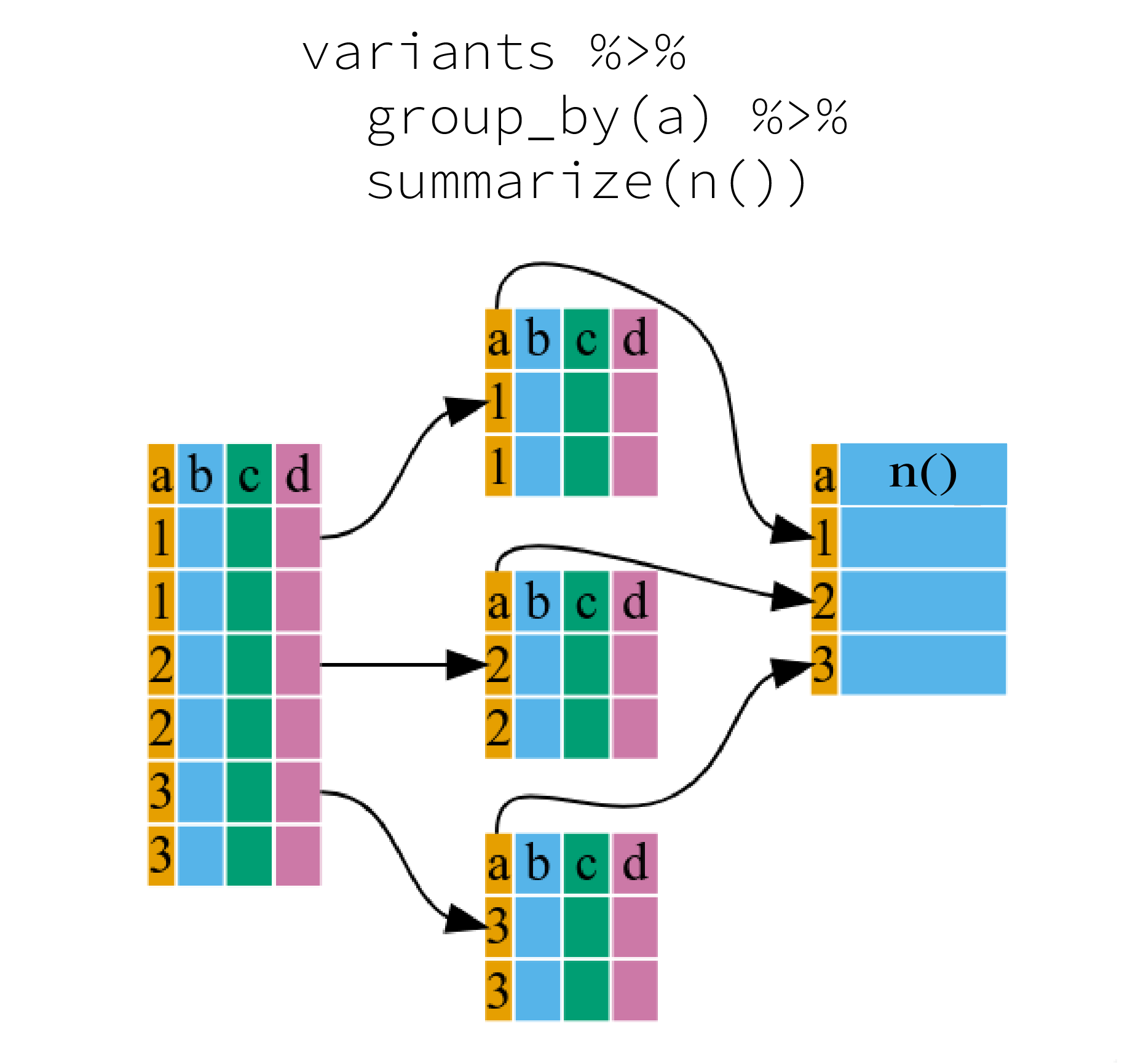

Here’s what we’ll be doing (from R for Data Science’s Transform Chapter):

Data: You will be exploring a dataset on flights departing New York City in 2013. These data are actually in a package called nycflights13, so we can load them the way we would any other package.

Let’s have Person 1 write this in the RMarkdown document (Partner 2 just listen for a moment; we will sync this to you in a moment).

library(nycflights13) # install.packages('nycflights13')

library(tidyverse)

This data frame contains all flights that departed from New York City in 2013. The data comes from the US Bureau of Transportation Statistics, and is documented in ?flights.

flights

Let’s select all flights on January 1st with:

filter(flights, month == 1, day == 1)

To use filtering effectively, you have to know how to select the observations that you want using the comparison operators. R provides the standard suite: >, >=, <, <=, != (not equal), and == (equal). We learned these operations yesterday. But there are a few others to learn as well.

Sync

Sync this RMarkdown back to GitHub so that your collaborator has access to all these notes.

Partner 2 pull

Now is the time to pull!

Partner 2 will continue with the following notes and instructions:

Logical operators

Multiple arguments to filter() are combined with “and”: every expression must be true in order for a row to be included in the output. For other types of combinations, you’ll need to use Boolean operators yourself:

&is “and”|is “or”!is “not”

Let’s have a look:

The following code finds all flights that departed in November or December:

filter(flights, month == 11 | month == 12)

The order of operations doesn’t work like English. You can’t write filter(flights, month == 11 | 12), which you might literally translate into “finds all flights that departed in November or December”. Instead it finds all months that equal 11 | 12, an expression that evaluates to TRUE. In a numeric context (like here), TRUE becomes one, so this finds all flights in January, not November or December. This is quite confusing!

A useful short-hand for this problem is x %in% y. This will select every row where x is one of the values in y. We could use it to rewrite the code above:

nov_dec <- filter(flights, month %in% c(11, 12))

Sometimes you can simplify complicated subsetting by remembering De Morgan’s law: !(x & y) is the same as !x | !y, and !(x | y) is the same as !x & !y. For example, if you wanted to find flights that weren’t delayed (on arrival or departure) by more than two hours, you could use either of the following two filters:

filter(flights, !(arr_delay > 120 | dep_delay > 120))

filter(flights, arr_delay <= 120, dep_delay <= 120)

Whenever you start using complicated, multipart expressions in filter(), consider making them explicit variables instead. That makes it much easier to check your work.

Partner 2 sync

Once you have filtered the flights dataframe for flights, sync it to Github (add, commit and push).

Your turn

Based on what you’ve learned previously about data transformation, you’ll make a series of data transformation on the flights dataset. Some ideas:

- calculate the average flight delay.

- determine the longest flight distance.

- Your own question!

Exercise

Partner 1 will pull so that we all have the most current information. With your partner, transform and compute several metrics about the data. Partner 1 and 2, make sure you talk to each other and decide on who does what. Remember to make your commit messages useful! As you work, you may get merge conflicts. This is part of collaborating in GitHub; we will walk through and help you with these and also teach the whole group.

Key Points

Github allows you to synchronise work efforts and collaborate with other scientists on (R) code.

Github can be used to make custom website visible on the internet.

Merge conflicts can arise between you and yourself (different machines).

Merge conflicts arise when you collaborate and are a safe way to handle discordance.

Efficient collaboration on data analysis can be made using Github.

R Basics

Overview

Teaching: 60 min

Exercises: 20 minQuestions

What will these lessons not cover?

What are the basic features of the R language?

What are the most common objects in R?

Objectives

Be able to create the most common R objects including vectors

Understand that vectors have modes, which correspond to the type of data they contain

Be able to use arithmetic operators on R objects

Be able to retrieve (subset), name, or replace, values from a vector

Be able to use logical operators in a subsetting operation

Understand that lists can hold data of more than one mode and can be indexed

“The fantastic world of R awaits you” OR “Nobody wants to learn how to use R”

Before we begin this lesson, we want you to be clear on the goal of the workshop and these lessons. We believe that every learner can achieve competency with R. You have reached competency when you find that you are able to use R to handle common analysis challenges in a reasonable amount of time (which includes time needed to look at learning materials, search for answers online, and ask colleagues for help). As you spend more time using R (there is no substitute for regular use and practice) you will find yourself gaining competency and even expertise. The more familiar you get, the more complex the analyses you will be able to carry out, with less frustration, and in less time - the fantastic world of R awaits you!

What these lessons will not teach you

Nobody wants to learn how to use R. People want to learn how to use R to analyze their own research questions! Ok, maybe some folks learn R for R’s sake, but these lessons assume that you want to start analyzing genomic data as soon as possible. Given this, there are many valuable pieces of information about R that we simply won’t have time to cover. Hopefully, we will clear the hurdle of giving you just enough knowledge to be dangerous, which can be a high bar in R! We suggest you look into the additional learning materials in the tip box below.

Here are some R skills we will not cover in these lessons

- How to create and work with R matrices and R lists

- How to create and work with loops and conditional statements, and the “apply” family of functions (which are super useful, read more here)

- How to do basic string manipulations (e.g. finding patterns in text using grep, replacing text)

- How to plot using the default R graphic tools (we will cover plot creation, but will do so using the popular plotting package

ggplot2) - How to use advanced R statistical functions

Tip: Where to learn more

The following are good resources for learning more about R. Some of them can be quite technical, but if you are a regular R user you may ultimately need this technical knowledge.

- R for Beginners: By Emmanuel Paradis and a great starting point

- The R Manuals: Maintained by the R project

- R contributed documentation: Also linked to the R project; importantly there are materials available in several languages

- R for Data Science: A wonderful collection by noted R educators and developers Garrett Grolemund and Hadley Wickham

- Practical Data Science for Stats: Not exclusively about R usage, but a nice collection of pre-prints on data science and applications for R

- Programming in R Software Carpentry lesson: There are several Software Carpentry lessons in R to choose from

Creating objects in R

Reminder

At this point you should be coding along in the “genomics_r_basics.R” script we created in the last episode. Writing your commands in the script (and commenting it) will make it easier to record what you did and why.

What might be called a variable in many languages is called an object in R.

To create an object you need:

- a name (e.g. ‘a’)

- a value (e.g. ‘1’)

- the assignment operator (‘<-‘)

In your script, “genomics_r_basics.R”, using the R assignment operator ‘<-‘, assign ‘1’ to the object ‘a’ as shown. Remember to leave a comment in the line above (using the ‘#’) to explain what you are doing:

# this line creates the object 'a' and assigns it the value '1'

a <- 1

Next, run this line of code in your script. You can run a line of code by hitting the Run button that is just above the first line of your script in the header of the Source pane or you can use the appropriate shortcut:

- Windows execution shortcut: Ctrl+Enter

- Mac execution shortcut: Cmd(⌘)+Enter

To run multiple lines of code, you can highlight all the line you wish to run and then hit Run or use the shortcut key combo listed above.

In the RStudio ‘Console’ you should see:

a <- 1

>

The ‘Console’ will display lines of code run from a script and any outputs or status/warning/error messages (usually in red).

In the ‘Environment’ window you will also get a table:

| Values | |

|---|---|

| a | 1 |

The ‘Environment’ window allows you to keep track of the objects you have created in R.

Exercise: Create some objects in R

Create the following objects; give each object an appropriate name (your best guess at what name to use is fine):

- Create an object that has the value of number of pairs of human chromosomes

- Create an object that has a value of your favorite gene name

- Create an object that has this URL as its value: “ftp://ftp.ensemblgenomes.org/pub/bacteria/release-39/fasta/bacteria_5_collection/escherichia_coli_b_str_rel606/”

- Create an object that has the value of the number of chromosomes in a diploid human cell

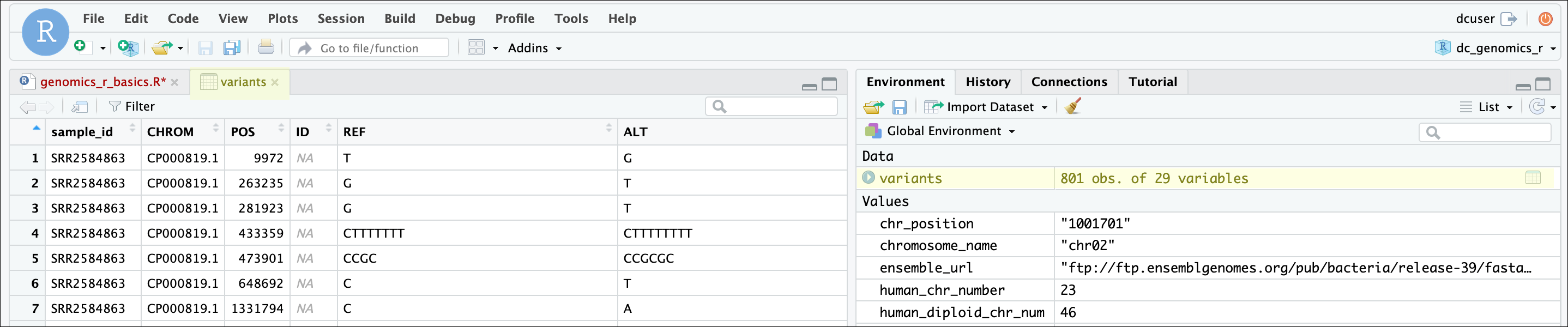

Solution

Here as some possible answers to the challenge:

human_chr_number <- 23 gene_name <- 'pten' ensemble_url <- 'ftp://ftp.ensemblgenomes.org/pub/bacteria/release-39/fasta/bacteria_5_collection/escherichia_coli_b_str_rel606/' human_diploid_chr_num <- 2 * human_chr_number

Naming objects in R

Here are some important details about naming objects in R.

- Avoid spaces and special characters: Object names cannot contain spaces or the minus sign (

-). You can use ‘_’ to make names more readable. You should avoid using special characters in your object name (e.g. ! @ # . , etc.). Also, object names cannot begin with a number. - Use short, easy-to-understand names: You should avoid naming your objects using single letters (e.g. ‘n’, ‘p’, etc.). This is mostly to encourage you to use names that would make sense to anyone reading your code (a colleague, or even yourself a year from now). Also, avoiding excessively long names will make your code more readable.

- Avoid commonly used names: There are several names that may already have a definition in the R language (e.g. ‘mean’, ‘min’, ‘max’). One clue that a name already has meaning is that if you start typing a name in RStudio and it gets a colored highlight or RStudio gives you a suggested autocompletion you have chosen a name that has a reserved meaning.

- Use the recommended assignment operator: In R, we use ‘<- ‘ as the

preferred assignment operator. ‘=’ works too, but is most commonly used in

passing arguments to functions (more on functions later). There is a shortcut

for the R assignment operator:

- Windows execution shortcut: Alt+-

- Mac execution shortcut: Option+-

There are a few more suggestions about naming and style you may want to learn more about as you write more R code. There are several “style guides” that have advice, and one to start with is the tidyverse R style guide.

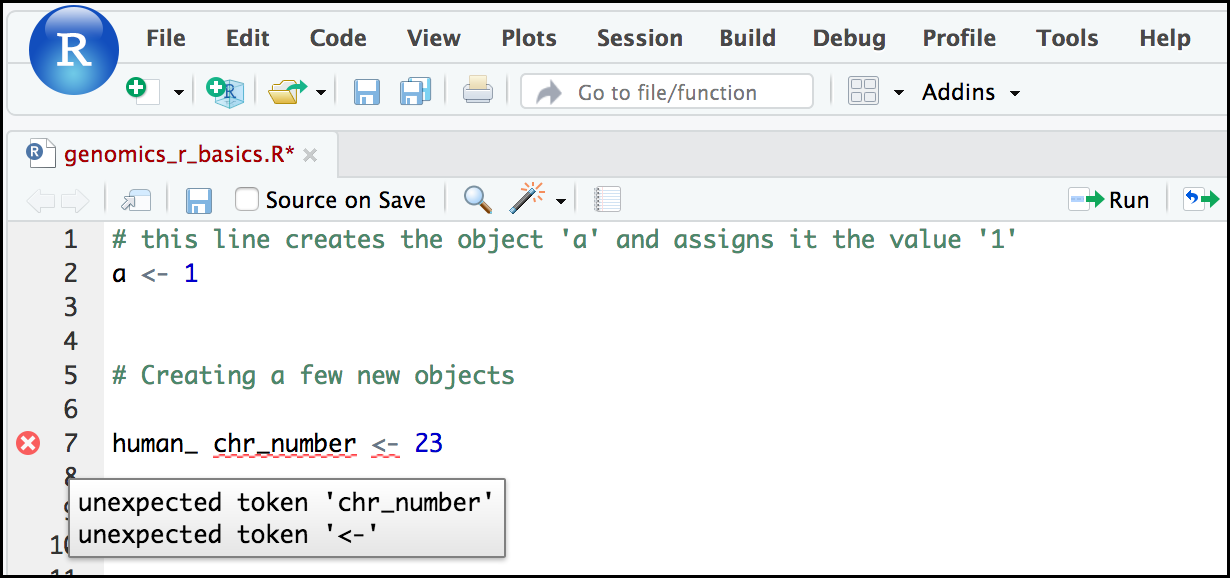

Tip: Pay attention to warnings in the script console

If you enter a line of code in your script that contains an error, RStudio may give you an error message and underline this mistake. Sometimes these messages are easy to understand, but often the messages may need some figuring out. Paying attention to these warnings will help you avoid mistakes. In the example below, our object name has a space, which is not allowed in R. The error message does not say this directly, but R is “not sure” about how to assign the name to “human_ chr_number” when the object name we want is “human_chr_number”.

Reassigning object names or deleting objects

Once an object has a value, you can change that value by overwriting it. R will not give you a warning or error if you overwriting an object, which may or may not be a good thing depending on how you look at it.

# gene_name has the value 'pten' or whatever value you used in the challenge.

# We will now assign the new value 'tp53'

gene_name <- 'tp53'

You can also remove an object from R’s memory entirely. The rm() function

will delete the object.

# delete the object 'gene_name'

rm(gene_name)

If you run a line of code that has only an object name, R will normally display the contents of that object. In this case, we are told the object no longer exists.

Error: object 'gene_name' not found

Understanding object data types (modes)

In R, every object has two properties:

- Length: How many distinct values are held in that object

- Mode: What is the classification (type) of that object.

We will get to the “length” property later in the lesson. The “mode” property corresponds to the type of data an object represents. The most common modes you will encounter in R are:

| Mode (abbreviation) | Type of data |

|---|---|

| Numeric (num) | Numbers such floating point/decimals (1.0, 0.5, 3.14), there are also more specific numeric types (dbl - Double, int - Integer). These differences are not relevant for most beginners and pertain to how these values are stored in memory |

| Character (chr) | A sequence of letters/numbers in single ‘’ or double “ “ quotes |

| Logical | Boolean values - TRUE or FALSE |

There are a few other modes (i.e. “complex”, “raw” etc.) but these are the three we will work with in this lesson.

Data types are familiar in many programming languages, but also in natural language where we refer to them as the parts of speech, e.g. nouns, verbs, adverbs, etc. Once you know if a word - perhaps an unfamiliar one - is a noun, you can probably guess you can count it and make it plural if there is more than one (e.g. 1 Tuatara, or 2 Tuataras). If something is a adjective, you can usually change it into an adverb by adding “-ly” (e.g. jejune vs. jejunely). Depending on the context, you may need to decide if a word is in one category or another (e.g “cut” may be a noun when it’s on your finger, or a verb when you are preparing vegetables). These concepts have important analogies when working with R objects.

Exercise: Create objects and check their modes

Create the following objects in R, then use the

mode()function to verify their modes. Try to guess what the mode will be before you look at the solution

chromosome_name <- 'chr02'od_600_value <- 0.47chr_position <- '1001701'spock <- TRUEpilot <- EarhartSolution

Error in eval(expr, envir, enclos): object 'Earhart' not foundmode(chromosome_name)[1] "character"mode(od_600_value)[1] "numeric"mode(chr_position)[1] "character"mode(spock)[1] "logical"mode(pilot)Error in mode(pilot): object 'pilot' not found

Notice from the solution that even if a series of numbers is given as a value

R will consider them to be in the “character” mode if they are enclosed as

single or double quotes. Also, notice that you cannot take a string of alphanumeric

characters (e.g. Earhart) and assign as a value for an object. In this case,

R looks for an object named Earhart but since there is no object, no assignment can

be made. If Earhart did exist, then the mode of pilot would be whatever

the mode of Earhart was originally. If we want to create an object

called pilot that was the name “Earhart”, we need to enclose

Earhart in quotation marks.

pilot <- "Earhart"

mode(pilot)

[1] "character"

Mathematical and functional operations on objects

Once an object exists (which by definition also means it has a mode), R can appropriately manipulate that object. For example, objects of the numeric modes can be added, multiplied, divided, etc. R provides several mathematical (arithmetic) operators including:

| Operator | Description |

|---|---|

| + | addition |

| - | subtraction |

| * | multiplication |

| / | division |

| ^ or ** | exponentiation |

| a%/%b | integer division (division where the remainder is discarded) |

| a%%b | modulus (returns the remainder after division) |

These can be used with literal numbers:

(1 + (5 ** 0.5))/2

[1] 1.618034

and importantly, can be used on any object that evaluates to (i.e. interpreted by R) a numeric object:

# multiply the object 'human_chr_number' by 2

human_chr_number * 2

[1] 46

Exercise: Compute the golden ratio

One approximation of the golden ratio (φ) can be found by taking the sum of 1 and the square root of 5, and dividing by 2 as in the example above. Compute the golden ratio to 3 digits of precision using the

sqrt()andround()functions. Hint: remember theround()function can take 2 arguments.Solution

round((1 + sqrt(5))/2, digits = 3)[1] 1.618Notice that you can place one function inside of another.

Vectors

Vectors are probably the

most used commonly used object type in R.

A vector is a collection of values that are all of the same type (numbers, characters, etc.).

One of the most common

ways to create a vector is to use the c() function - the “concatenate” or

“combine” function. Inside the function you may enter one or more values; for

multiple values, separate each value with a comma:

# Create the SNP gene name vector

snp_genes <- c("OXTR", "ACTN3", "AR", "OPRM1")

Vectors always have a mode and a length.

You can check these with the mode() and length() functions respectively.

Another useful function that gives both of these pieces of information is the

str() (structure) function.

# Check the mode, length, and structure of 'snp_genes'

mode(snp_genes)

[1] "character"

length(snp_genes)

[1] 4

str(snp_genes)

chr [1:4] "OXTR" "ACTN3" "AR" "OPRM1"

Vectors are quite important in R. Another data type that we will work with later in this lesson, data frames, are collections of vectors. What we learn here about vectors will pay off even more when we start working with data frames.

Creating and subsetting vectors

Let’s create a few more vectors to play around with:

# Some interesting human SNPs

# while accuracy is important, typos in the data won't hurt you here

snps <- c('rs53576', 'rs1815739', 'rs6152', 'rs1799971')

snp_chromosomes <- c('3', '11', 'X', '6')

snp_positions <- c(8762685, 66560624, 67545785, 154039662)

Once we have vectors, one thing we may want to do is specifically retrieve one or more values from our vector. To do so, we use bracket notation. We type the name of the vector followed by square brackets. In those square brackets we place the index (e.g. a number) in that bracket as follows:

# get the 3rd value in the snp_genes vector

snp_genes[3]

[1] "AR"

In R, every item your vector is indexed, starting from the first item (1) through to the final number of items in your vector. You can also retrieve a range of numbers:

# get the 1st through 3rd value in the snp_genes vector

snp_genes[1:3]

[1] "OXTR" "ACTN3" "AR"

If you want to retrieve several (but not necessarily sequential) items from a vector, you pass a vector of indices; a vector that has the numbered positions you wish to retrieve.

# get the 1st, 3rd, and 4th value in the snp_genes vector

snp_genes[c(1, 3, 4)]

[1] "OXTR" "AR" "OPRM1"

There are additional (and perhaps less commonly used) ways of subsetting a vector (see these examples). Also, several of these subsetting expressions can be combined:

# get the 1st through the 3rd value, and 4th value in the snp_genes vector

# yes, this is a little silly in a vector of only 4 values.

snp_genes[c(1:3,4)]

[1] "OXTR" "ACTN3" "AR" "OPRM1"

Adding to, removing, or replacing values in existing vectors

Once you have an existing vector, you may want to add a new item to it. To do

so, you can use the c() function again to add your new value:

# add the gene 'CYP1A1' and 'APOA5' to our list of snp genes

# this overwrites our existing vector

snp_genes <- c(snp_genes, "CYP1A1", "APOA5")

We can verify that “snp_genes” contains the new gene entry

snp_genes

[1] "OXTR" "ACTN3" "AR" "OPRM1" "CYP1A1" "APOA5"

Using a negative index will return a version of a vector with that index’s value removed:

snp_genes[-6]

[1] "OXTR" "ACTN3" "AR" "OPRM1" "CYP1A1"

We can remove that value from our vector by overwriting it with this expression:

snp_genes <- snp_genes[-6]

snp_genes

[1] "OXTR" "ACTN3" "AR" "OPRM1" "CYP1A1"

We can also explicitly rename or add a value to our index using double bracket notation:

snp_genes[7]<- "APOA5"

snp_genes

[1] "OXTR" "ACTN3" "AR" "OPRM1" "CYP1A1" NA "APOA5"

Notice in the operation above that R inserts an NA value to extend our vector so that the gene “APOA5” is an index 7. This may be a good or not-so-good thing depending on how you use this.

Exercise: Examining and subsetting vectors

Answer the following questions to test your knowledge of vectors

Which of the following are true of vectors in R? A) All vectors have a mode or a length

B) All vectors have a mode and a length

C) Vectors may have different lengths

D) Items within a vector may be of different modes

E) You can use thec()to add one or more items to an existing vector

F) You can use thec()to add a vector to an exiting vectorSolution

A) False - Vectors have both of these properties

B) True

C) True

D) False - Vectors have only one mode (e.g. numeric, character); all items in

a vector must be of this mode. E) True

F) True

Logical Subsetting

There is one last set of cool subsetting capabilities we want to introduce. It is possible within R to retrieve items in a vector based on a logical evaluation or numerical comparison. For example, let’s say we wanted get all of the SNPs in our vector of SNP positions that were greater than 100,000,000. We could index using the ‘>’ (greater than) logical operator:

snp_positions[snp_positions > 100000000]

[1] 154039662

In the square brackets you place the name of the vector followed by the comparison operator and (in this case) a numeric value. Some of the most common logical operators you will use in R are:

| Operator | Description |

|---|---|

| < | less than |

| <= | less than or equal to |

| > | greater than |

| >= | greater than or equal to |

| == | exactly equal to |

| != | not equal to |

| !x | not x |

| a | b | a or b |

| a & b | a and b |

The magic of programming

The reason why the expression

snp_positions[snp_positions > 100000000]works can be better understood if you examine what the expression “snp_positions > 100000000” evaluates to:snp_positions > 100000000[1] FALSE FALSE FALSE TRUEThe output above is a logical vector, the 4th element of which is TRUE. When you pass a logical vector as an index, R will return the true values:

snp_positions[c(FALSE, FALSE, FALSE, TRUE)][1] 154039662If you have never coded before, this type of situation starts to expose the “magic” of programming. We mentioned before that in the bracket notation you take your named vector followed by brackets which contain an index: named_vector[index]. The “magic” is that the index needs to evaluate to a number. So, even if it does not appear to be an integer (e.g. 1, 2, 3), as long as R can evaluate it, we will get a result. That our expression

snp_positions[snp_positions > 100000000]evaluates to a number can be seen in the following situation. If you wanted to know which index (1, 2, 3, or 4) in our vector of SNP positions was the one that was greater than 100,000,000?We can use the

which()function to return the indices of any item that evaluates as TRUE in our comparison:which(snp_positions > 100000000)[1] 4Why this is important

Often in programming we will not know what inputs and values will be used when our code is executed. Rather than put in a pre-determined value (e.g 100000000) we can use an object that can take on whatever value we need. So for example:

snp_marker_cutoff <- 100000000 snp_positions[snp_positions > snp_marker_cutoff][1] 154039662Ultimately, it’s putting together flexible, reusable code like this that gets at the “magic” of programming!

A few final vector tricks

Finally, there are a few other common retrieve or replace operations you may

want to know about. First, you can check to see if any of the values of your

vector are missing (i.e. are NA). Missing data will get a more detailed treatment later,

but the is.NA() function will return a logical vector, with TRUE for any NA

value:

# current value of 'snp_genes':

# chr [1:7] "OXTR" "ACTN3" "AR" "OPRM1" "CYP1A1" NA "APOA5"

is.na(snp_genes)

[1] FALSE FALSE FALSE FALSE FALSE TRUE FALSE

Sometimes, you may wish to find out if a specific value (or several values) is

present a vector. You can do this using the comparison operator %in%, which

will return TRUE for any value in your collection that is in

the vector you are searching:

# current value of 'snp_genes':

# chr [1:7] "OXTR" "ACTN3" "AR" "OPRM1" "CYP1A1" NA "APOA5"

# test to see if "ACTN3" or "APO5A" is in the snp_genes vector

# if you are looking for more than one value, you must pass this as a vector

c("ACTN3","APOA5") %in% snp_genes

[1] TRUE TRUE

Review Exercise 1

What data types/modes are the following vectors? a.

snps

b.snp_chromosomes

c.snp_positionsSolution

typeof(snps)[1] "character"typeof(snp_chromosomes)[1] "character"typeof(snp_positions)[1] "double"

Review Exercise 2

Add the following values to the specified vectors: a. To the

snpsvector add: ‘rs662799’

b. To thesnp_chromosomesvector add: 11

c. To thesnp_positionsvector add: 116792991Solution

snps <- c(snps, 'rs662799') snps[1] "rs53576" "rs1815739" "rs6152" "rs1799971" "rs662799"snp_chromosomes <- c(snp_chromosomes, "11") # did you use quotes? snp_chromosomes[1] "3" "11" "X" "6" "11"snp_positions <- c(snp_positions, 116792991) snp_positions[1] 8762685 66560624 67545785 154039662 116792991

Review Exercise 3

Make the following change to the

snp_genesvector:Hint: Your vector should look like this in ‘Environment’:

chr [1:7] "OXTR" "ACTN3" "AR" "OPRM1" "CYP1A1" NA "APOA5". If not recreate the vector by running this expression:snp_genes <- c("OXTR", "ACTN3", "AR", "OPRM1", "CYP1A1", NA, "APOA5")a. Create a new version of

snp_genesthat does not contain CYP1A1 and then

b. Add 2 NA values to the end ofsnp_genesSolution

snp_genes <- snp_genes[-5] snp_genes <- c(snp_genes, NA, NA) snp_genes[1] "OXTR" "ACTN3" "AR" "OPRM1" NA "APOA5" NA NA

Review Exercise 4

Using indexing, create a new vector named

combinedthat contains:

- The the 1st value in

snp_genes- The 1st value in

snps- The 1st value in

snp_chromosomes- The 1st value in

snp_positionsSolution

combined <- c(snp_genes[1], snps[1], snp_chromosomes[1], snp_positions[1]) combined[1] "OXTR" "rs53576" "3" "8762685"

Review Exercise 5

What type of data is

combined?Solution

typeof(combined)[1] "character"

Bonus material: Lists

Lists are quite useful in R, but we won’t be using them in the genomics lessons. That said, you may come across lists in the way that some bioinformatics programs may store and/or return data to you. One of the key attributes of a list is that, unlike a vector, a list may contain data of more than one mode. Learn more about creating and using lists using this nice tutorial. In this one example, we will create a named list and show you how to retrieve items from the list.

# Create a named list using the 'list' function and our SNP examples

# Note, for easy reading we have placed each item in the list on a separate line

# Nothing special about this, you can do this for any multiline commands

# To run this command, make sure the entire command (all 4 lines) are highlighted

# before running

# Note also, as we are doing all this inside the list() function use of the

# '=' sign is good style

snp_data <- list(genes = snp_genes,

refference_snp = snps,

chromosome = snp_chromosomes,

position = snp_positions)

# Examine the structure of the list

str(snp_data)

List of 4

$ genes : chr [1:8] "OXTR" "ACTN3" "AR" "OPRM1" ...

$ refference_snp: chr [1:5] "rs53576" "rs1815739" "rs6152" "rs1799971" ...

$ chromosome : chr [1:5] "3" "11" "X" "6" ...

$ position : num [1:5] 8.76e+06 6.66e+07 6.75e+07 1.54e+08 1.17e+08

To get all the values for the position object in the list, we use the $ notation:

# return all the values of position object

snp_data$position

[1] 8762685 66560624 67545785 154039662 116792991

To get the first value in the position object, use the [] notation to index:

# return first value of the position object

snp_data$position[1]

[1] 8762685

Key Points

Effectively using R is a journey of months or years. Still you don’t have to be an expert to use R and you can start using and analyzing your data with with about a day’s worth of training

It is important to understand how data are organized by R in a given object type and how the mode of that type (e.g. numeric, character, logical, etc.) will determine how R will operate on that data.

Working with vectors effectively prepares you for understanding how data are organized in R.

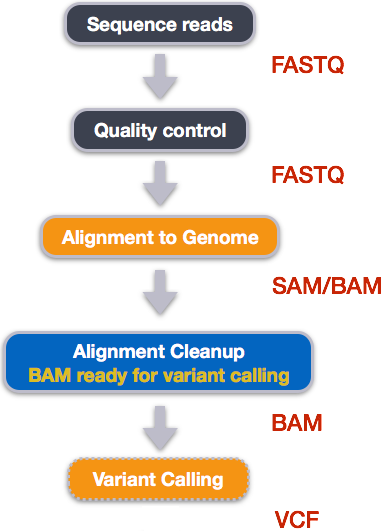

Introduction to the example dataset and file type

Overview

Teaching: 15 min

Exercises: 0 minQuestions